As enterprises worldwide rush to embed artificial intelligence into their core operations, a fundamental and often underestimated reality is coming into sharp focus: the most brilliant algorithm is only as effective as the infrastructure supporting it. The race to AI supremacy is not just about sophisticated models but about building a resilient, secure, and scalable foundation capable of handling unprecedented computational demands. This is the new frontier where digital infrastructure becomes the critical enabler of intelligent transformation, and companies are discovering that their legacy systems are ill-equipped for this revolution. For organizations aiming to move beyond isolated experiments and deploy AI at a production scale, the underlying network and data center architecture has emerged as the single most significant determinant of success or failure.

Beyond the Algorithm Is Your Infrastructure Ready for the AI Revolution

The excitement surrounding generative AI has largely centered on the capabilities of the models themselves, from creating content to automating complex analyses. However, this focus often obscures the immense strain these workloads place on enterprise infrastructure. The process of training a large language model involves moving petabytes of data across thousands of GPUs, requiring a network with exceptionally high bandwidth, low latency, and zero data loss. Anything less results in idle, expensive hardware and dramatically extended training times, rendering AI initiatives economically unfeasible.

This challenge intensifies when organizations deploy AI for real-time inference, where instantaneous responses are critical for applications like fraud detection or customer service bots. The immense complexity of managing, securing, and orchestrating these distributed systems at scale presents a formidable barrier. Traditional networking and data center models, designed for more predictable and siloed application traffic, simply fall short. They lack the integrated visibility and automated management needed to support the dynamic, high-performance demands of AI, forcing a fundamental rethink of infrastructure strategy.

The New Enterprise Imperative Moving from AI Experimentation to Production

The market has decisively shifted from treating AI as an isolated research project to integrating it as a mission-critical component of daily operations. Early successes in pilot programs have created an enterprise-wide imperative to scale these capabilities to drive tangible business outcomes, such as hyper-personalized customer experiences, optimized supply chains, and enhanced cybersecurity. This transition from experimentation to production is where many organizations encounter significant friction, as the ad-hoc systems used for testing prove inadequate for enterprise-grade reliability and security.

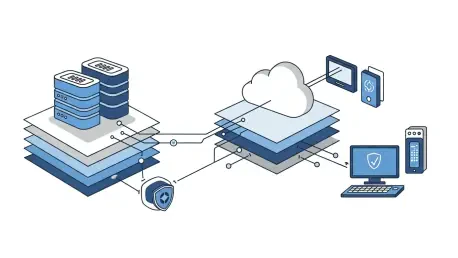

A holistic, full-stack strategy is no longer a luxury but a necessity for achieving these real-world results. Success hinges on a tightly integrated ecosystem where compute, networking, storage, and security work in concert. Without this unified approach, companies face a fragmented and brittle environment that is difficult to manage and impossible to secure effectively. The imperative is clear: to unlock the full potential of AI, organizations must build a cohesive and intelligent foundation that extends from the data center to the cloud and out to the edge.

Deconstructing Ciscos AI Blueprint a Multi Layered Strategy

Cisco’s approach to this challenge is built upon a multi-layered blueprint, beginning with its role as its own “first and most demanding customer.” By developing and deploying a shared AI fabric internally to enhance its own service delivery, the company creates “battle-hardened” solutions before they reach the public market. This internal adoption serves as a rigorous proving ground, ensuring that its commercial offerings are validated against the complexities of a massive, global operation. This practice provides Cisco with an unmatched depth of practical expertise in what it takes to run AI at scale.

A second pillar of this strategy is the development of specialized infrastructure forged through key alliances, most notably with NVIDIA. Recognizing that GPUs are only part of the equation, the partnership has yielded integrated solutions like the Nexus Hyperfabric, a system designed to simplify the deployment of high-performance AI clusters. This “Secure AI Factory” framework centralizes management and orchestration through the Intersight platform, giving organizations a single pane of glass to oversee everything from GPU utilization to Kubernetes microservices. This integration is designed to remove the complex guesswork from building and managing AI-ready data centers.

This blueprint extends beyond the data center to address the growing need for low-latency processing at the edge. In environments like Industrial IoT (IIoT), where decisions must be made in milliseconds, transmitting data to a central cloud is not viable. Cisco’s “Unified Edge” solution brings a consistent operational model to these remote deployments, allowing engineers to apply the same data center-grade configurations and security policies to both core and edge environments. Finally, security is embedded throughout the AI lifecycle, moving beyond traditional firewalls to address AI-specific threats such as adversarial attacks, supply chain vulnerabilities, and the complex risks posed by interacting multi-agent systems.

The Voice of Experience Insights from a Battle Hardened Approach

The value of Cisco’s internal AI deployment extends beyond product development; it has become a source of validated patterns and practical expertise that informs its customer-facing solutions. By grappling with the challenges of integrating massive compute and network stacks for both model training and inference within its own operations, the company has developed a deep understanding of the nuanced requirements for each phase. This hands-on experience has been instrumental in shaping its architectural recommendations and product roadmaps.

This “battle-hardened” approach has also spurred significant research and development, culminating in frameworks designed to manage modern threats proactively. The Integrated AI Security and Safety Framework, for instance, was developed from real-world observations of emerging vulnerabilities in AI systems. It provides a structured methodology for applying rigorous security standards from the initial design phase through ongoing maintenance. This commitment to practical, experience-driven innovation ensures that Cisco’s strategies are not merely theoretical but are grounded in the realities of enterprise-scale AI deployment.

The Cisco Playbook a Framework for Building an AI Ready Enterprise

For organizations embarking on their AI journey, Cisco’s strategy provides a clear playbook. The first principle is to unify infrastructure, extending a consistent operational model from the data center to the cloud and the edge. This approach reduces operational friction, eliminates silos, and allows IT teams to manage a distributed environment with a common set of tools and skills, accelerating deployment and simplifying maintenance.

The second core strategy is to treat security as a foundational element, not an afterthought. This involves applying rigorous security standards proactively across the entire AI system lifecycle, from data ingestion and model training to deployment and monitoring. By designing for security from the outset, organizations can mitigate risks before they become critical threats. This proactive stance is essential in an era where AI models themselves are becoming targets for sophisticated attacks.

Finally, the playbook emphasizes preparing for the next wave of AI evolution. This includes developing the tooling and protocols necessary to transition from today’s generative AI to the more autonomous, agentic systems of tomorrow. To support this future, organizations must invest in next-generation wireless and unified management platforms to create a truly “AI-ready” network. By embracing these principles, enterprises built a resilient and adaptable foundation capable of supporting not only current AI initiatives but also the intelligent systems of the future.