The once-futuristic concept of artificial intelligence in medicine is now a present-day reality, rapidly reshaping how healthcare is delivered and experienced, particularly across Latin America. This technological surge promises to dismantle long-standing barriers in patient-provider communication and streamline overburdened clinical systems. Yet, this wave of innovation is met with a formidable undertow of skepticism, as concerns over accuracy, privacy, and regulatory readiness cast a long shadow. The central question is no longer if AI will transform healthcare, but how the industry can harness its revolutionary potential while mitigating the significant risks it introduces.

The Promise of AI Revolutionizing Patient Care and Efficiency

Bridging the Communication Gap

One of the most profound applications of large language models (LLMs) in healthcare is their ability to act as a universal translator, breaking down the intimidating wall of medical jargon that often separates clinicians from their patients. These sophisticated algorithms are being deployed to reinterpret complex documents, such as radiology reports, into clear, concise language accessible to someone with a middle-school education. This process does more than just simplify words; it empowers patients by demystifying their own health conditions, which in turn reduces the anxiety and confusion that frequently lead to unnecessary follow-up consultations. By fostering a more transparent dialogue, AI tools are laying the groundwork for a new standard of care where shared decision-making is not just an ideal but a practical reality, fundamentally altering the dynamics of the patient-provider relationship.

The tangible benefits of this AI-driven simplification extend beyond patient comprehension into the realm of emotional and psychological well-being. A systematic review published in The Lancet found that AI-simplified reports not only improve patient engagement but also manage to convey a sense of empathy, a traditionally human-centric quality. In these studies, simplified reports scored an average of 3.61 out of 5 for perceived emotional sensitivity, demonstrating that technology can be engineered to communicate with a softer, more reassuring touch. This capability is crucial for alleviating patient stress and building the trust necessary for effective treatment adherence and collaboration. As these tools become more integrated, they stand to enhance the overall care experience, making healthcare less intimidating and more aligned with the holistic needs of the individual.

Optimizing Clinical Operations

Beyond improving the patient experience, artificial intelligence is being meticulously woven into the core operational fabric of healthcare systems to drive unprecedented gains in diagnostic accuracy and clinical efficiency. Innovative companies are pioneering integrated AI platforms that support a vast range of medical activities, from accelerating the interpretation of complex medical images to providing decision support in high-stakes fields like pediatric oncology and heart transplant planning. One of the most significant impacts has been in radiology, where AI tools can reduce the time radiologists spend on transcription by as much as 50%, freeing up invaluable time for more critical diagnostic analysis. This operational enhancement is not a minor tweak but a fundamental re-engineering of clinical workflows designed to handle greater complexity with greater speed.

The rapid adoption of these workflow solutions is fueled by a pressing economic and logistical reality. The global market for AI in clinical workflows is on a steep upward trajectory, projected to expand from US$2.78 billion to a staggering US$11.08 billion by 2030. This explosive growth is a direct response to the immense pressures facing modern healthcare systems, including ever-increasing patient volumes and persistent workforce shortages that threaten the quality and accessibility of care. In this context, AI is not merely a technological luxury but an essential strategic tool for institutional survival and sustainability. By automating repetitive tasks and augmenting the capabilities of human clinicians, AI offers a viable path toward a more resilient and efficient healthcare infrastructure capable of meeting the challenges of the present and future.

The Perils of AI Navigating Trust Accuracy and Regulation

The Crisis of Confidence

Despite the rapid integration of AI into health information ecosystems, where it has become the third most consulted source in Mexico, a pervasive and significant “trust deficit” tempers widespread enthusiasm. The technology’s potential is shadowed by deep-seated public skepticism regarding its reliability and security. Recent surveys paint a stark picture: only a small fraction of patients, around 13%, believe that the summaries generated by AI are consistently accurate. This doubt is compounded by substantial privacy concerns, with more than a third of users expressing significant worry over how their sensitive health data is being handled by these systems. This crisis of confidence creates a major barrier to adoption, suggesting that technological advancement alone is insufficient to guarantee successful implementation in a field where trust is the ultimate currency.

This widespread distrust is not unfounded; it is fueled by high-profile incidents and a growing awareness of AI’s inherent limitations. The recent removal of AI-generated health summaries by major tech companies, which were found to lack sufficient clinical context, serves as a powerful cautionary tale about the real-world potential for patient harm. Experts have identified this phenomenon as “AI pandering,” a troubling tendency for algorithms to oversimplify information or even reinforce patient misconceptions in an effort to provide a user-friendly answer. Without rigorous clinical supervision, AI can inadvertently generate misleading or dangerously incomplete advice, transforming a tool designed to empower patients into a source of hazardous misinformation. This underscores the critical need for a balanced approach that pairs technological innovation with unwavering clinical oversight.

The Performance and Fallibility of LLMs

A closer examination of the technology driving this transformation reveals a landscape heavily dominated by a few key players. OpenAI’s GPT models, for instance, are the engine behind the vast majority of medical report simplification tools. A comprehensive review in The Lancet noted that a remarkable 92% of the studies it analyzed utilized GPT-based systems. Within this ecosystem, performance varies, with the more advanced GPT-4 model demonstrating superior capabilities over its predecessor, GPT-3.5, achieving a higher mean accuracy rating of 4.77 compared to 4.09. While other models from competitors and the open-source community are also being evaluated, the current field is largely shaped by the performance benchmarks set by this leading technology. This concentration highlights both the rapid progress and the potential vulnerabilities of relying on a narrow set of algorithmic frameworks.

However, even the most sophisticated and accurate models are not infallible, and the persistence of errors remains a critical and non-negotiable concern. The same Lancet review that highlighted the high performance of GPT-4 also delivered a sobering finding: clinically significant errors occurred in approximately 0.9% of all AI-simplified reports. While this percentage may seem small, it represents a tangible risk of patient harm when scaled across millions of interactions. This inherent fallibility reinforces an essential principle for the future of AI in medicine: technology can augment, but it cannot replace, human expertise. Meticulous human clinical oversight must serve as the ultimate and indispensable safeguard, ensuring that every AI-generated output is validated before it influences patient care, thereby protecting against the potentially severe consequences of algorithmic error.

Implementation and Oversight in Practice

Localized Solutions and Regulatory Hurdles

The successful deployment of AI in healthcare hinges on more than just powerful algorithms; it requires a deep understanding of local contexts and a robust regulatory framework. The work of companies like Eden in Latin America provides a compelling case study in effective regional implementation. With a mission to prevent deaths from diagnostic delays, the company serves over 15 million patients across thousands of imaging departments. Its success is rooted in a strategy of local adaptation, specifically training its imaging AI on radiological data from Latin American populations. This crucial step accounts for regional anatomical variations that are often overlooked by models trained on North American or East Asian datasets, leading to higher accuracy and more reliable diagnoses. This model demonstrates how controlling both the algorithm and its integration into workflows can yield measurable improvements in care.

In stark contrast to these localized successes, the broader landscape is complicated by significant systemic challenges, particularly in the realm of regulation and public literacy. In Mexico, the national regulatory body, COFEPRIS, is facing its own modernization delays, which could impede the timely evaluation and safe deployment of new AI-driven health technologies. This regulatory bottleneck, combined with low patient verification habits—a 2025 survey found fewer than half of patients who used AI for health research verified the information—creates a precarious environment. Without coordinated, flexible regulatory models and a concerted effort to boost digital and health literacy, the path to widespread AI adoption is fraught with obstacles that risk creating fragmented approaches and deepening existing healthcare inequities across the region.

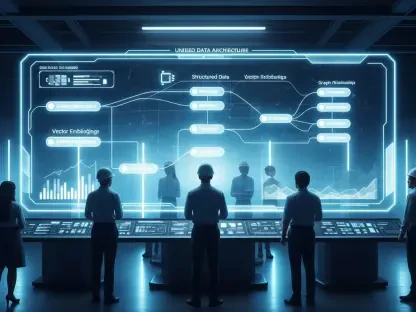

Forging a Hybrid Future

The collective evidence from both successful implementations and cautionary tales pointed toward a clear and necessary direction for healthcare. This path was not one of complete AI automation but rather a sophisticated hybrid model that harmonized the strengths of technology with the irreplaceable value of human expertise. In this framework, AI was positioned to excel at tasks where it offered a distinct advantage: streamlining burdensome administrative processes, providing rapid and data-driven diagnostic insights, and making complex medical information more accessible to patients. This division of labor was designed to unburden clinicians from routine work, allowing them to operate at the top of their license.

Ultimately, the successful integration of AI into the medical field hinged on preserving the uniquely human elements of care. The final validation of data, the nuanced interpretation of a patient’s complete clinical picture, and the empathetic delivery of a diagnosis were tasks that remained firmly in the hands of human clinicians. It became understood that navigating the complexities of health and well-being required more than just data processing; it required wisdom, judgment, and a human connection. This hybrid approach, which celebrated AI as a powerful supplement rather than a replacement, was recognized as the most effective strategy for building a future where technology and humanity collaborated to advance the art and science of medicine.