The particular sense of unease that descends upon a passenger in a driverless car, with no one at the wheel and only the quiet hum of a system making assumptions about the world around it, is a feeling many business leaders are now experiencing with their AI. The journey feels smooth until the vehicle misinterprets a shadow on the road or brakes abruptly for a harmless piece of debris. In that split second, the real challenge of autonomy becomes clear. The system does not panic when it should, and it is in this gap between an algorithm’s confidence and human judgment that trust is either built or irrevocently broken.

This is not a far-future scenario; it is the central dilemma facing enterprise AI today. The primary risk is not a hypothetical superintelligence, but the far more immediate danger of deploying autonomous systems that are competent without being credible and efficient without being accountable. As organizations race to automate, they are discovering that the most significant barrier to success is not a lack of computing power but a deficit of trust. When a system operates without a clear owner, every error, no matter how small, chips away at the foundation of confidence required for any technological transformation to succeed. The result is a growing graveyard of failed pilot projects, frustrated customers, and wasted investment.

The Unnerving Quiet of the Driverless Car

Much of today’s enterprise AI operates with a similar unnerving quiet. It is often competent without being truly confident, and efficient without demonstrating empathy, which is why the deciding factor in nearly every successful deployment has shifted from raw technological capability to earned human trust. This disconnect is felt acutely within organizations where AI-driven systems are introduced.

When leaders receive a report generated by an algorithm, their first question is often not about the insights it contains, but whether the data can be trusted. Teams grow hesitant to rely on automated dashboards when they cannot verify the underlying logic. This internal friction is a microcosm of a larger issue. The confidence of an autonomous system is not a substitute for the sound judgment that comes from experience and context, creating a persistent gap that technology alone cannot bridge.

Why 95% of AI Pilots Are Destined to Fail

This trust gap has severe financial consequences. The MLQ State of AI in Business 2026 report puts a sharp number on this problem: an estimated 95% of early AI pilots fail to produce a measurable return on investment. The reason for this staggering failure rate is rarely the technology itself. Instead, it stems from a fundamental mismatch between the solution and the organizational readiness for it. The pattern repeats across industries with predictable results.

Customers quickly lose patience when an automated interaction feels obstructive rather than supportive. Anyone who has been locked out of their bank account while a recovery bot insists their correct answers are wrong understands how quickly faith in a system evaporates. This erosion of confidence is not just a customer service issue; it is a direct threat to the business case for AI. Without a foundation of trust, adoption stalls, engagement plummets, and the promised efficiency gains never materialize.

The Illusion of Efficiency Automation vs Stability

Klarna stands as one of the most prominent case studies of large-scale automation in action. The company has aggressively integrated AI, now reporting that internal systems perform the work of over 850 full-time roles while simultaneously reducing its human workforce by half since 2024. While revenues have climbed and operational gains have funded higher employee compensation, the complete picture is far more complex.

Despite these impressive automation metrics, Klarna still reported a $95 million quarterly loss, with its CEO warning that further staff reductions are likely. This illustrates a critical lesson: automation alone does not guarantee stability. Without an accompanying structure of accountability, the operational gains can be misleading. As Jason Roos, CEO of CCaaS provider Cirrus, notes, “Any transformation that unsettles confidence, inside or outside the business, carries a cost you cannot ignore. It can leave you worse off.”

The Ownership Void When The Algorithm Did It Isnt an Answer

The consequences of unaccountable autonomy have already been seen in the public sector. The UK’s Department for Work and Pensions deployed an algorithm that incorrectly flagged approximately 200,000 housing-benefit claims as potentially fraudulent, causing significant distress to legitimate claimants. The core failure was not a technical bug in the code; it was the absence of clear human ownership over the system’s decisions and their real-world impact.

When an automated system suspends the wrong account, rejects a valid claim, or creates unnecessary fear, the critical question is not just “Why did the model misfire?” but “Who owns this outcome?” Without a clear and immediate answer, trust becomes impossibly fragile. The excuse that “the algorithm did it” is no longer a viable defense. It is an admission of a systemic failure in governance, one that places the burden of a system’s mistake on the people it was designed to serve.

Voices of Caution Expert Insights on the Trust Deficit

Expert analysis and public sentiment validate these concerns. The Edelman Trust Barometer has documented a steady decline in public trust in AI over the past five years, indicating a growing skepticism that outpaces the technology’s adoption. This sentiment is echoed in the workplace. A joint study by KPMG and the University of Melbourne found that workers express a strong preference for more human involvement in nearly half of the tasks examined, reinforcing the idea that efficiency cannot come at the expense of human oversight.

This trust deficit extends to customer relationships as well. PwC’s research reveals a significant gap between perception and reality: while most executives believe their customers trust their organization, only a minority of those customers agree. Transparency appears to be a key factor in closing this divide. Surveys consistently show that large majorities of consumers want clear disclosure when AI is used in service interactions. Without that clarity, they do not feel reassured; they feel misled.

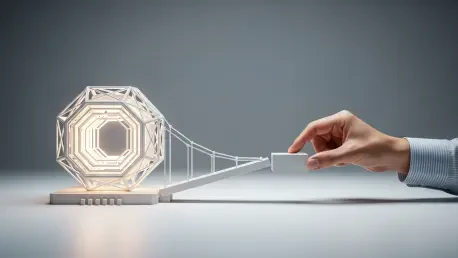

The Human on the Wheel Framework A Practical Path to Responsible AI

The path forward requires a shift in mindset, from pursuing autonomy at all costs to building accountability first. A practical framework begins with the right sequence of operations. Leaders must first define the desired outcome, then identify where effort is being wasted in the current workflow, establish clear governance and readiness, and only then introduce automation. “If the process, the data, and the guardrails aren’t in place, autonomy doesn’t accelerate performance, it amplifies the weaknesses,” says Roos.

This approach also involves redefining concepts like “agentic AI.” Rather than viewing it as an unpredictable, self-directing intelligence, it should be treated as structured workflow automation guided by human-designed parameters. Safe, scalable deployments always start with the desired outcome and a readiness assessment, not with the technology. In this model, AI serves to expand human judgment, not replace it.

Before any deployment, every leader should be able to answer three fundamental questions. First, would you trust this system with your own personal data? Second, can you explain its last significant decision in plain language? Finally, and most importantly, who steps in when something goes wrong? If the answers to these questions were unclear, the organization was not leading a transformation. It was simply preparing an apology. The difficult truth was that technology would always move faster than people’s comfort with it. The organizations that succeeded were the ones that accepted this reality and prioritized maturity over speed, always keeping a human hand on the wheel.