The intricate process of transforming a simple idea into a physical object has long been guarded by the complex language of computer-aided design, but a new system now allows creation to begin with just a few spoken words. A collaborative effort by researchers from MIT, Google Deepmind, and Autodesk Research has produced an AI-driven robotic assembly system that represents a significant advancement in the democratized creation of physical objects. This review explores the evolution of this technology, its key features, performance metrics, and the impact it has on design and fabrication. The purpose is to provide a thorough understanding of the system, its current capabilities, and its potential for future development.

An Introduction to Language Driven Fabrication

The innovative framework for AI-driven robotic assembly is built on the core principle of using natural language to overcome the high barrier to entry associated with traditional CAD software. For decades, bringing a digital design to life has required specialized skills and a steep learning curve, often stifling the creative brainstorming process for non-experts. This system dismantles that barrier by allowing users to issue simple text prompts, such as “build a chair,” to initiate the entire design-to-fabrication pipeline.

By prioritizing intuitive interaction, the system marks a pivotal development in the broader landscape of human-computer interaction and digital manufacturing. It offers a more accessible and sustainable alternative for turning concepts into physical reality. Instead of focusing on the minutiae of vertices and vectors, a user can focus on function and form, collaborating with the AI to achieve their vision. This approach not only streamlines prototyping but also encourages a more circular economy through its use of reusable components.

Core Technology and System Workflow

Dual Generative AI Models for Design Interpretation

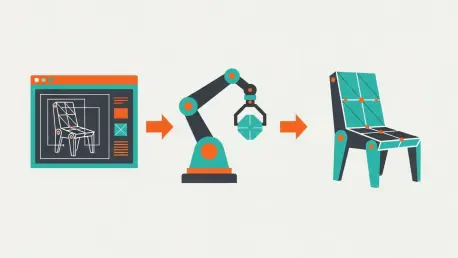

At the heart of the system lies a sophisticated, two-stage AI process that translates a user’s abstract request into a concrete, buildable blueprint. The workflow begins when the first generative model interprets a text prompt and produces an initial 3D geometric mesh of the object. This initial output, however, is a monolithic digital sculpture; it lacks the specific, component-level details needed for a robot to assemble it. The primary challenge this research overcomes is the intelligent segmentation of this form into functional, manufacturable parts.

This crucial step is handled by the second and more advanced model: a vision-language model (VLM). This VLM acts as the “eyes and brain” of the operation, analyzing the 3D mesh and reasoning about the object’s intended function. Drawing on its extensive training, the VLM identifies which surfaces serve critical purposes—like the seat or backrest of a chair—and intelligently maps parts from a predefined component library onto those areas. It outputs its reasoning in plain text before applying those decisions to the digital model, ensuring the final design is not just geometrically sound but also functionally practical.

The Human in the Loop Collaborative Design

A defining feature of this system is its emphasis on an interactive co-design process, positioning the AI not as an autonomous creator but as a collaborative partner. After the initial design is generated, users can provide iterative feedback through follow-up commands to refine the output. For example, a user might request to “only use panels on the backrest, not the seat,” allowing them to steer the design according to their specific aesthetic or functional preferences.

This human-in-the-loop approach empowers non-experts to navigate the design space with confidence and creativity. It fosters a sense of ownership over the final product, as users feel personally invested in the collaborative outcome. By facilitating this dialogue between human and machine, the system transforms the design process from a rigid, technical task into a fluid and engaging creative endeavor.

Robotic Assembly and Sustainable Prototyping

Once the user finalizes the digital blueprint, the system transitions from the virtual to the physical realm. A robotic arm then takes over, meticulously constructing the object by picking and placing prefabricated, reusable components according to the finalized plan. The performance of this assembly process demonstrates a seamless integration of the digital design phase with automated physical construction.

The emphasis on reusability is a cornerstone of the system’s design and positions it as a highly sustainable alternative for prototyping and manufacturing. Traditional prototyping often generates significant material waste. In contrast, this framework allows components to be easily disassembled and reassembled into entirely new creations. This modular, circular approach dramatically reduces the environmental footprint of innovation, making rapid iteration both economically and ecologically viable.

Innovations in Functional Reasoning and Interaction

This system demonstrates a profound shift in robotics, moving away from explicit programming and toward intuitive, natural language-based interaction. The most significant innovation is the VLM’s capacity for functional reasoning, which enables it to comprehend why a particular design choice is necessary. It understands that a chair needs a surface for sitting and a support for leaning, a level of comprehension that goes far beyond simple pattern matching. This ability to connect form to function is an emerging trend in creating more capable and collaborative AI systems.

Applications in Prototyping and On Demand Manufacturing

The real-world applications of this technology are both immediate and far-reaching. In the near term, it could serve as an invaluable tool for rapid prototyping in complex fields like aerospace and architecture, where iterating on physical models is both costly and time-consuming. Engineers and designers could quickly test and refine structural concepts, accelerating the innovation cycle significantly.

Looking further ahead, the long-term vision is to integrate such systems into homes and workshops for on-demand fabrication. Imagine designing and building customized furniture tailored to a specific space without ever leaving the house. This capability could fundamentally revolutionize supply chains by shifting manufacturing from centralized factories to localized, user-driven creation, eliminating the need to ship bulky, fully assembled products across the globe.

Performance Evaluation and System Challenges

The system’s effectiveness was validated in user studies, where its functionally-aware designs were overwhelmingly preferred. When compared against designs from two alternative algorithms—one placing panels on all horizontal surfaces and another placing them randomly—over 90 percent of participants chose the VLM-generated object. The model also proved its reasoning capability by explaining its design choices, confirming that its decisions were based on a genuine understanding of function.

Despite these successes, the technology faces challenges on its path to widespread adoption. The primary hurdles include scaling the system to handle more complex designs and a vastly expanded component library. Transitioning the platform from a controlled research prototype into a robust and reliable tool for general use will require further development to ensure it can manage a wider range of user requests and material constraints.

The Future of Collaborative Fabrication

The trajectory of this technology points toward a future defined by more seamless and natural human-robot collaboration. Future advancements will likely focus on enhancing the AI’s reasoning capabilities and expanding its understanding of physical principles, enabling it to assist in more complex and creative tasks. This research lays the groundwork for systems that are not just tools, but true creative partners.

The ultimate goal is to develop AI that people can communicate and “make things together with” as fluidly as they would with another person. As these systems evolve, they hold the potential to unlock unprecedented levels of creativity and accessibility in manufacturing. This work represents a foundational step in that direction, heralding an era where the ability to create physical objects is limited only by one’s imagination.

Concluding Assessment

The successful integration of dual generative AI models, human-in-the-loop feedback, and robotic assembly marked a pivotal achievement in digital fabrication. This system demonstrated a viable path toward making design and manufacturing more intuitive, sustainable, and accessible to a broader audience. Its ability to interpret natural language and reason about an object’s function represented a significant leap beyond previous automated systems. The research provided a compelling proof-of-concept for a future where collaborative creation with intelligent machines becomes a standard practice, fundamentally altering how we design and produce the objects that shape our world.