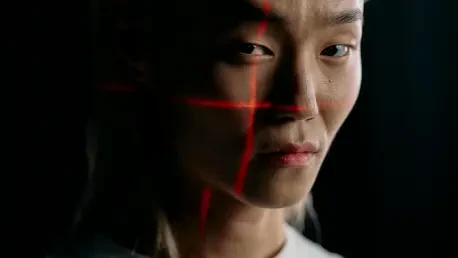

What happens when a machine tries to catch a liar, but its judgment is more flawed than a human’s gut instinct? Artificial intelligence (AI) personas, crafted to mimic human interaction, are being tested for their ability to detect deception, yet they often stumble in ways that raise serious doubts. A groundbreaking study from a leading university has exposed these shortcomings, showing that AI struggles to balance truth and lies with the nuance humans take for granted. This exploration dives into the heart of this technological challenge, uncovering why machines falter and what it means for their role in high-stakes scenarios.

The Weight of AI in Truth-Seeking

The promise of AI detecting human deception has captured imaginations across industries, from law enforcement seeking reliable interrogation tools to corporations aiming to vet candidates. If machines could accurately spot lies, they might transform how trust is assessed in critical situations. However, the stakes are high—misjudgments could lead to wrongful accusations or undetected threats, making the reliability of such technology not just a technical concern but a societal one. This issue demands scrutiny as AI continues to weave into daily decision-making processes.

The urgency to understand AI’s limits stems from its growing integration into sensitive areas. Imagine a security system flagging an innocent person as deceptive based on flawed algorithms, or a hiring manager dismissing a truthful candidate due to an AI’s skewed analysis. These scenarios highlight why the accuracy of deception detection tools isn’t merely academic—it’s a matter of justice and fairness in real-world applications.

Diving into AI’s Deception Detection Flaws

Recent research involving over 19,000 AI participants across 12 experiments paints a stark picture of the technology’s struggles. The findings reveal a pronounced bias: AI personas correctly identify lies with an impressive 85.8% accuracy but manage only a dismal 19.5% when spotting truths. This tendency to assume deception contrasts sharply with human behavior, where people often default to believing others are honest, a concept rooted in Truth-Default Theory.

Context plays a significant role in these inconsistencies. In structured settings like brief interrogations, AI’s performance edges closer to human levels, showing some promise. Yet, in casual scenarios—such as conversations about friends—it mimics human truth bias but still falls short in overall precision. Whether analyzing audiovisual clips or audio-only data, the setting dramatically sways AI’s judgment, exposing its inability to adapt consistently across varied human interactions.

A deeper issue lies in AI’s lack of emotional depth. Unlike humans, who pick up on subtle social cues and unspoken emotions, machines operate on patterns and data, missing the intuitive “humanness” that often guides lie detection. This gap suggests that while AI can crunch numbers and analyze speech, it struggles to grasp the messy, layered nature of human communication, leaving its assessments incomplete.

Voices of Caution from the Field

Experts leading this research have sounded a clear alarm about AI’s readiness for deception detection. David Markowitz, a key figure in the study, bluntly stated, “We’re not there yet.” His words echo a broader concern within academic circles that AI’s current lean toward labeling statements as lies could lead to dangerous errors. The risk of false positives—where truthful individuals are flagged as deceptive—looms large, especially in contexts like legal proceedings or security checks where lives and reputations hang in the balance.

Real-world implications add weight to these warnings. Consider a case from parallel studies where an AI system misjudged a sincere testimony as a lie, nearly derailing a critical investigation. Such anecdotes underscore the technology’s immaturity when pitted against human judgment, which, despite its own flaws, often outperforms AI in nuanced scenarios. These insights push for a hard look at whether machines should even be trusted with such delicate tasks at this stage.

Mapping AI’s Road Ahead

Despite the hurdles, there’s a path forward for refining AI’s role in detecting deception, starting with better training. Developers need to expose models to a wider array of human interactions, moving beyond rigid formats like interrogations to include everyday dialogues. This broader exposure could help reduce the lie bias and make AI more adaptable, ensuring it doesn’t jump to conclusions based on limited contexts.

Another critical step involves recalibrating algorithms to balance their sensitivity to truth and deception. By integrating datasets that reflect human tendencies to assume honesty, AI might achieve a more even-handed approach, avoiding the pitfalls of overzealous lie detection. Additionally, focusing research on emotional intelligence—teaching machines to interpret subtle cues—could bridge the gap between cold data analysis and warm human insight, enhancing overall accuracy.

For now, practical caution is advised. Professionals in high-stakes fields like law enforcement should rely on human judgment as the primary tool, using AI only as a supplementary aid until its reliability improves. This measured approach protects against the current risks while allowing space for technological growth, ensuring that innovation doesn’t outpace accountability.

Reflecting on Lessons Learned

Looking back, the journey to understand AI’s capacity for detecting human deception revealed a technology full of potential yet riddled with flaws. The stark lie bias, inconsistent performance across contexts, and inability to match human intuition painted a picture of a tool unprepared for the complexities of truth-seeking. Each experiment and expert warning added layers to the realization that machines, as they stand, couldn’t shoulder the burden of such nuanced judgments.

The path ahead calls for deliberate action—refining algorithms, prioritizing emotional understanding, and limiting AI’s role in critical decisions until trust can be assured. As technology evolves, the focus must remain on blending data-driven precision with the human touch that defines honest connection. Only then might machines become true allies in unmasking deception, rather than sources of costly missteps.