A sharper way to ask the hard question

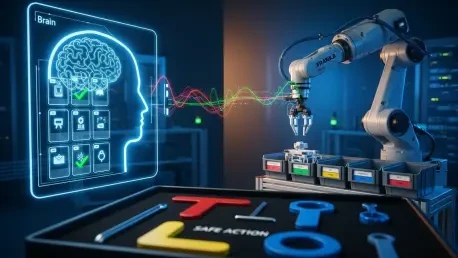

What if the leap in robot reliability came not from ever-larger models but from a smarter split between thinking and doing that keeps language plans on a short leash and loops real-world feedback back into every choice the machine makes? The premise is blunt: let one system plan in human terms, let another act only within safe bounds, and connect them with signals that report what actually happened. A recent set of trials delivered a concrete number to this idea—task completion rose about 17%, and on a Franka arm the system hit roughly 84% success across most tasks.

That outcome reframed the debate. Instead of chasing completeness in a single omnipotent model, the BrainBody-LLM approach argued that reliability emerges when high-level intent is constrained by executable actions and verified by outcomes. In effect, plan quality and safety improved because the planner was held accountable by the executor and the environment, not by a preference for longer prompts or bigger parameter counts.

Why this matters now

Home, lab, and factory instructions rarely arrive as clean formal scripts; they arrive messy, partial, and sometimes contradictory. Translating them into safe, executable actions remains the bottleneck, especially when perception is noisy and the world does not match a training set. Traditional policies answer with heavy engineering and rigid languages, but they struggle when tasks vary. LLMs can capture intent, yet in a physical setting, free-form outputs risk inventing actions or overlooking constraints.

The industry is already moving in a different direction: agentic language models that plan, call tools, and self-correct with feedback. Safety-first constraints have become table stakes, while closed-loop refinement is turning into a default. BrainBody-LLM operationalized those trends into a single pattern—the planner decomposes goals, the executor maps them onto a vetted action set, and feedback reconciles belief with reality. For builders, that trims brittle handoffs across modules. For researchers, it offers grounded generalization tests across simulation and hardware. For operators, it points to dependable autonomy under uncertainty, where a robot can adapt mid-task without becoming unpredictable.

The BrainBody-LLM design, tested

Modeled on the division of labor in humans, the architecture splits roles. The Brain LLM handles planning by breaking a goal such as “Eat chips on the sofa” into ordered, commonsense subgoals, keeping them abstract enough to avoid low-level control. The Body LLM executes by binding each subgoal to a fixed, safe action library. If a required action or object is not available, the Body flags “no available action/object,” rather than bluffing with a guess. Closed-loop signals—success or failure, object detections, state checks—flow back to the Brain to refine or re-sequence steps.

Three mechanisms do most of the work. First, task decomposition produces human-like subgoals that structure the job without dictating motor commands. Second, action grounding forces strict alignment with feasible robot commands, making every move interpretable and auditable. Third, feedback corrects drift: when the expected state does not materialize, the system revises the step or order, recovering from mismatches instead of plowing ahead. Safety is baked in by design because the action library limits the surface area for unpredictable behavior.

Evaluation spanned simulation and hardware. In VirtualHome, the team varied domestic chores to probe planning fidelity and recovery behavior. On a Franka Research 3 arm, the system operated amid perception noise, actuation latency, and execution errors. Results echoed across both: +17% task completion over strong baselines and about 84% success on most real-world tasks. Notably, explicit “no action available” signaling prevented silent failures and triggered fast replanning, a small design choice with outsized impact on predictability.

Signals from the field and lab

Across embodied AI studies, feedback-centric designs keep beating one-shot planners on long-horizon tasks. Systems that bind language to constrained action interfaces also show lower rates of unsafe or irrelevant outputs while preserving reasoning benefits. In this context, BrainBody-LLM did not emerge in a vacuum; it aligned with evidence that coupling planning to verifiable control improves robustness in the face of sensor noise and unexpected states.

Experts described the shift in blunt terms. “Closed-loop control is non-negotiable in robotics; it is what keeps systems honest,” one researcher said during a workshop. Another emphasized the match to agentic LLM patterns: “Planning, reflection, tool APIs, and episodic memory make sense in software; give them a constrained motor interface, and they make sense in robots, too.” The consensus extended to sensing: “Multimodality is the next frontier—3D vision, depth, and joint-level signals will push dexterity and context awareness.”

A small field vignette captured the approach in practice. During a table-clearing task, the Body reported that the target bowl was missing from the expected shelf. The Brain paused pickup steps, added a search-and-verify sequence, and only then resumed the original plan. That simple re-sequencing prevented a cascade of errors—no collisions, no wasted motions—showing how human-like adjustments emerge when planning is constrained and feedback is respected.

How to apply this pattern in your robot stack

Adopting the split begins with clean boundaries. Keep planning and execution separate: the planner outputs structured subgoals, the executor accepts only verified action tokens. Constrain and verify actions with a vetted library annotated with safety rules and preconditions. Require explicit unavailability signals and define fallback policies that re-plan rather than improvise. Close the loop by instrumenting success and failure outcomes, state deltas, and object detections, then feed those signals back at each step.

Deployment benefits from staged evaluation. Start in simulation—VirtualHome or equivalents—to cover diverse chores and reproduce edge cases. Transfer to hardware incrementally with task families that reveal perception and timing cracks. Manage performance with caches for frequent plan motifs and distillation for latency-sensitive skills. Monitor feedback logs to spot escalating retries, and trigger a safe halt if contradictions repeat. Meanwhile, plan for richer grounding by adding 3D vision and depth cues to stabilize state estimation, and by exposing guarded joint-level primitives that improve dexterity without compromising safety.

The broader takeaway had been practical and forward-looking. BrainBody-LLM showed that splitting planning from execution and wiring in closed-loop feedback could make LLM-driven robots more reliable, not less expressive. The gains—about 17% higher task completion and roughly 84% success on a real Franka arm—validated that reliability arose when language plans were bounded by what machines could safely do and checked against what actually happened. The next steps pointed toward deeper multimodality, sharper action libraries, and tighter latency budgets, each an actionable lever that moved embodied AI from clever demos to dependable work.