When a wildfire jumps a ridge and smoke blinds cameras, a drone that learns in place can turn chaos into usable signal before networks choke on raw video and teams lose the minutes that save homes and lives. That urgency framed a new effort at The University of Alabama in Huntsville, where assistant professor Dr. Dinh Nguyen secured a $599,830 NSF grant to build secure multi‑modal federated learning for networks of unmanned aerial vehicles. The project runs through September 2028 and targets civilian operations rather than military missions: disaster response, environmental monitoring, infrastructure inspection, and cybersecurity support. Instead of shuttling gigabytes to a distant server, the approach trains models on board and shares only updates, conserving bandwidth while guarding privacy. If successful, it could shift drone AI from after‑action analysis to real‑time, resilient decision‑making in the air.

From Centralized Bottlenecks To Collaborative Airframes

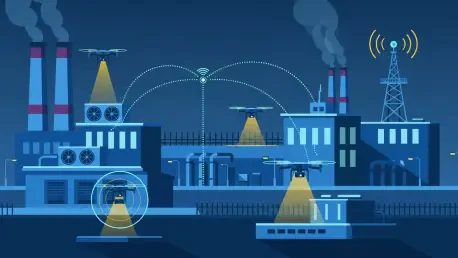

Traditional drone pipelines pulled raw feeds from cameras, thermal sensors, and radios into a central cloud, where power-hungry models digested everything. That fit lab settings but buckled in the field: patchy links, limited spectrum, and battery budgets punished constant uplinks, while privacy rules restricted what could be sent at all. Nguyen’s framework rebalanced the equation by moving training to the edge. Each drone tuned a local model on its own data—video, temperature, or network signals—and transmitted only compressed weight updates. A coordinator aggregated contributions into a global model, then broadcast improvements back to the fleet. The cycle shortened feedback loops, trimmed bandwidth, and reduced exposure of sensitive scenes, enabling mission teams to adapt faster as conditions evolved.

Designing for multi‑modality mattered because no two sorties looked alike. A river survey relied on video and GPS drift signals; a factory inspection leaned on vibration and thermal cues; a cyber patrol watched RF patterns. The framework introduced modality‑aware scheduling so drones prioritized learning on whichever sensors proved predictive at that moment, not just the heaviest camera stream. It also addressed field realities: intermittent connectivity, stragglers, and non‑identical data distributions. Asynchronous rounds let faster drones contribute without waiting; on‑device compression and quantization saved energy; adaptive aggregation balanced fleets where some craft saw smoke and flames while others stared at empty sky. The result aimed at practical readiness, not just benchmarks—models that kept improving despite noise and scarcity.

Security, Privacy, And Resilience By Design

Edge learning fell flat without robust safeguards, so the team layered defenses to match adversarial settings. Modality‑aware differential privacy added calibrated noise to updates, masking sensitive features that might trace to a household, license plate, or wireless signature. Secure aggregation ensured the server saw only an encrypted sum, preserving confidentiality even if a coordinator was probed. Equally critical, attack detection scanned for model poisoning by compromised drones—outliers in gradient space, inconsistent loss patterns, or anomalous update directions—quarantining suspect clients before damage spread. These measures complemented one another: privacy preserved dignity, cryptography protected process, and detection restored trust when links failed or devices fell into hostile hands.

Broader industry momentum reinforced the effort. Across edge AI, public agencies demanded systems that worked within bandwidth ceilings and honored privacy law, while operators pressed for faster cycles that did not collapse when storms or crowds congested networks. By aligning with these constraints, the approach promised tangible gains: quicker learning in fast‑changing scenes, fewer uplink dollars, and stronger guarantees for citizens caught in a drone’s field of view. Pathways to deployment included reference implementations for emergency managers, open benchmarks for multi‑modal federated tasks, and playbooks for testing under degraded comms. Standardization opportunities spanned telemetry schemas, privacy budgets, and red‑team protocols, laying groundwork for a shared, certifiable stack.

What It Could Change Next

If adopted, municipal fleets might fly with models that adapted street by street—flagging heat plumes before flare‑ups, spotting debris that threatened bridges, or detecting rogue signals that hinted at spoofing. Procurement conversations then shifted from data hoarding to capability sharing: which agencies hosted coordinators, how privacy budgets were allocated, and which missions demanded extra defenses. Vendors that shipped batteries and radios started factoring learning loads into endurance math. Universities contributed evaluation suites that mixed weather, crowds, and adversaries, making field tests repeatable. The program also hinted at economic upside: less backhaul meant lower operating costs, and cross‑agency collaboration reduced duplicated training.

The immediate next steps were concrete rather than grandiose. Pilot programs with fire departments and utility inspectors validated update schedules against airtime and safety limits. Stakeholders documented privacy budgets by sensor type and set red lines for sensitive scenes. Incident‑response playbooks incorporated model‑poisoning drills alongside radio jamming scenarios. Open artifacts—datasets scrubbed for privacy, reference code, and attack traces—were released under permissive terms to accelerate review. Success then depended on disciplined iteration: pushing updates only after flight tests, auditing aggregation logs, and retiring models that drifted. In that cadence, secure multi‑modal federated learning moved from a grant proposal to a working spine for civilian drone AI.