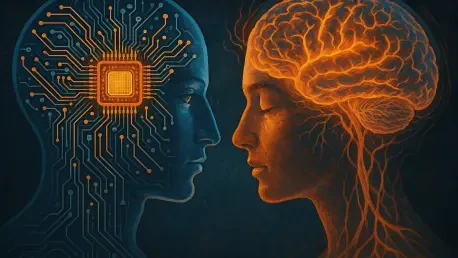

Laurent Giraid is a technologist deeply passionate about exploring the intersections of artificial intelligence and human cognition. His work particularly focuses on the fascinating parallels between AI models and the human brain, especially in terms of language processing and ethical considerations. In this interview, Laurent delves into the recent findings from his research, how they relate to neuroscience, and the implications for both AI development and understanding human cognitive functions.

Can you explain what you mean by “units” in large AI models and how they are similar to the brain’s language system?

In large AI models, “units” refer to individual components or neurons that work together within the model to process data. These units are similar to neurons in the human brain, where specific clusters can become specialized, focusing on distinct tasks like language processing. For our research, we found that certain units within models act much like the language network in our brains, selectively activating in response to language tasks.

What prompted your team to investigate the presence of specialized units within LLMs?

Our inspiration came from neuroscience, where much work has been done mapping specialized networks in the human brain, like the Language Network. We were curious to see if a similar structural specialization exists within large language models (LLMs), potentially explaining their prowess and limitations in handling language.

How did you identify which units in the AI models are language-selective?

We employed techniques from neuroscience by measuring how actively a unit responded when processing real sentences compared to random word lists. Units that were more active with structured language were identified as “language-selective.”

Could you elaborate on how turning off these specific units affects the AI model’s performance?

The models showed a significant drop in performance in language tasks when these specific units were deactivated. They struggled with generating coherent text and performing linguistic benchmarks, underlining the critical role these units play in language ability.

You mentioned the models struggled with language tasks after certain neurons were turned off. How did you determine that fewer than 100 neurons are critical for language?

Through experimentation, we discovered that disrupting less than 100 of these selective neurons—about 1% of the total—could severely impair language functionality. This finding surprised us, highlighting how a small subset is crucial for a model’s linguistic ability.

How did the performance of the models change when random units were turned off compared to when the language-specific units were disabled?

When random units were turned off, there was barely any impact on the models’ performance. In stark contrast, disabling the language-specific units led to a drastic reduction in language processing capabilities, reinforcing the idea that these units have a specialized and essential role.

What surprised you the most during your research into these language-selective units?

The most surprising element was the efficiency of the neuroscience-inspired techniques we used. We initially anticipated a more complex process, but these approaches quickly zeroed in on essential units, echoing the efficiency seen in brain network studies.

How did the techniques from neuroscience assist in identifying relevant units in the AI models?

Adopting techniques like localizers from neuroscience allowed us to quickly identify which units became active under specific tasks. It streamlined the process, letting us map units within AI models to functions akin to human cognitive tasks.

What are some additional questions that arise from finding specialized reasoning and social thinking units in some models but not others?

These observations raise questions about the training data and methodologies used. Why do certain models develop these specialized units while others do not? Is it about the diversity of the data or something in the training architectures that encourages specialization?

Why do you think certain AI models have specialized reasoning units while others do not?

It possibly boils down to the variance in training datasets and algorithms. Models exposed to a broader variety of tasks might naturally lead to the emergence of specialization, much like how diverse life experiences can shape neural pathways in humans.

In your view, does the presence of isolated units in a model correlate with better performance?

There does seem to be a correlation where models with isolated specialized units perform better on specific tasks. These units might provide a more concentrated and efficient processing pathway for related functions.

What future research directions are you considering, especially with multi-modal models?

We’re keen on exploring how multi-modal models, which handle inputs beyond text like images or sounds, grow specialized units. A major question is whether these models showcase deficits similar to those observed when language-specific units in LLMs are inhibited.

How do you think multi-modal models will compare to human processing when given combined inputs like text and visuals?

Multi-modal models could potentially mirror human processing much more closely by integrating diverse sensory data actively. We’re curious to see if they develop overlapping specialized units similar to the brain’s networks that juggle multiple types of inputs seamlessly.

Can these findings help us better understand neurological conditions in humans, similar to the effects of strokes on the brain’s Language Network?

Absolutely. Just as with strokes affecting the brain’s Language Network, understanding how specific disruptions impact AI provides a parallel to study brain conditions. It might guide us in diagnosing or treating language impairments in humans more effectively.

Looking at the potential applications, how do you see this research contributing to advancements in disease diagnosis and treatment?

This research opens paths to simulate cognitive dysfunctions in a controlled setting, offering new insights into diagnostics. By drawing parallels between AI and brain networks, we might refine strategies to detect and treat neurological issues more precisely, with AI factoring into therapeutic technologies.