Let me introduce Laurent Giraid, a renowned technologist whose expertise in artificial intelligence, particularly in machine learning, natural language processing, and AI ethics, has positioned him as a leading voice in the field. Today, we dive into the transformative world of generative AI, exploring its rapid integration into industries and daily life, the ethical challenges it poses, and the groundbreaking concepts like “world models” that could redefine its future. Our conversation also touches on the societal benefits AI can bring when guided by collaborative efforts, as well as the safeguards needed to ensure its responsible development.

How did the surge of tools like ChatGPT inspire collaborative initiatives focused on generative AI, and what broader societal impacts are these efforts aiming for?

The rise of tools like ChatGPT in 2022 was a wake-up call for many in the tech and academic communities. It showed just how quickly generative AI could penetrate everyday life and industries, sparking a need for structured collaboration to harness its potential responsibly. Initiatives like these bring together researchers, industry leaders, and educators to focus on using AI for societal good—think improved healthcare diagnostics, better educational tools, or even accelerating scientific discovery. The goal is to ensure that as AI evolves, it benefits humanity as a whole, not just a select few, while addressing risks like misinformation or inequity.

In what ways has generative AI already reshaped industries and the way people live their daily lives since its mainstream debut?

Generative AI has made a profound impact in a short time. In healthcare, for instance, it’s being used to analyze medical data and assist with diagnoses, while in scientific research, it’s helping model complex problems like protein folding. For everyday folks, it’s become a go-to for tasks like drafting emails, generating creative content, or even learning new skills through personalized chat interactions. It’s fascinating to see how it’s lowered barriers—people who never thought they’d interact with AI are now using it without a second thought.

What are some of the biggest hurdles in making generative AI reliable enough for critical real-world applications?

One of the main challenges is ensuring accuracy and trustworthiness. Generative AI can sometimes produce outputs that sound convincing but are factually wrong or biased due to the data it’s trained on. For critical applications—like in medicine or infrastructure—the stakes are high, so we need robust testing and validation methods. There’s also the issue of transparency; users and regulators need to understand how these systems make decisions. Overcoming these hurdles requires a mix of technical innovation and ethical oversight.

How can researchers and leaders ensure that generative AI remains safe and trustworthy as it continues to advance?

Safety and trust come down to proactive design and governance. We need to embed ethical principles into AI development from the start, like fairness and accountability. This means diverse teams working on these systems to minimize blind spots, as well as creating clear guidelines for deployment. Regular audits and public dialogue are also key—AI isn’t just a tech issue, it’s a societal one. Collaboration between academia, industry, and policymakers can help build frameworks that keep AI aligned with human values.

Can you explain the concept of ‘world models’ and how they differ from the large language models we’re familiar with today?

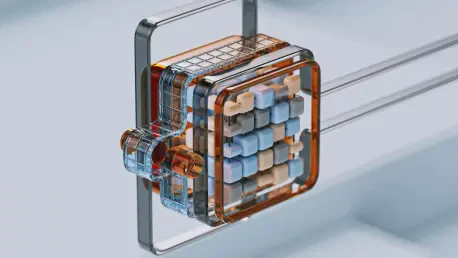

World models are a visionary leap in AI. Unlike current large language models that rely on massive datasets of text to predict and generate responses, world models learn by interacting with their environment, much like a child does through sensory experiences. They build an internal understanding of how the world works—physics, cause and effect, spatial relationships. This is a stark contrast to today’s models, which, while powerful, lack a grounded sense of reality and often just mimic patterns they’ve seen in data.

Why are world models seen as a potential game-changer for the future of AI, especially in areas like robotics?

World models could revolutionize AI by enabling systems to adapt to new situations without extensive retraining. In robotics, this means a robot could figure out how to navigate a cluttered room or perform a novel task just by observing and reasoning about its surroundings. This kind of flexibility is a game-changer because it moves us closer to general-purpose machines that can operate in unpredictable, real-world settings, whether that’s in a factory or a home.

What specific tasks or applications could robots with world models handle, and how might this transform their role in industries or households?

Imagine a robot in a warehouse that can instantly adapt to a new layout or handle unexpected obstacles without needing specific programming for every scenario. Or in a home, a robot that learns to assist with chores by watching and understanding context—like knowing not to vacuum when someone’s sleeping. This adaptability could make robots far more practical and cost-effective, expanding their use in logistics, caregiving, and even small businesses, ultimately making them true partners rather than just tools.

What are some practical ways to design safeguards for future AI systems, and why do experts believe these can prevent AI from becoming uncontrollable?

Safeguards, or guardrails, for AI involve building constraints into the system’s design—like limiting what actions it can take or ensuring it always seeks human approval for critical decisions. Think of it as programming a fail-safe, where the AI is inherently bound by rules it can’t override. Experts are confident in this approach because we’ve been designing similar controls for complex systems for centuries, from laws for human behavior to safety protocols in engineering. It’s about embedding control at the core, not as an afterthought.

What is your forecast for the future of generative AI and its impact on society over the next decade?

I believe generative AI will become even more integrated into our lives, driving innovations we can’t fully imagine yet—think personalized medicine or education tailored to every individual’s needs. But its impact will depend on how we steer it. If we prioritize ethics and inclusivity, it could bridge gaps in access to resources and knowledge. However, without careful oversight, it risks amplifying biases or creating new divides. The next decade will be about striking that balance, and I’m optimistic we can get it right with collective effort.