In the fast-paced realm of artificial intelligence (AI), one of the most pressing hurdles for tech giants is managing the immense heat generated by high-performance chips and GPUs that power complex workloads, and as AI applications become increasingly sophisticated, the risk of overheating threatens not only system performance but also hardware longevity. Microsoft Corporation is tackling this issue head-on with an innovative solution known as microfluidics, a technology that promises to redefine cooling strategies in data centers. Unlike conventional methods that often fall short under the strain of modern demands, this approach could set a new standard for efficiency and scalability. This exploration delves into how Microsoft is leveraging microfluidics to address thermal challenges, enhance chip design, and optimize overall data center operations, while also examining the broader implications for the tech industry.

Innovating Thermal Solutions for AI Systems

Revolutionizing Heat Management

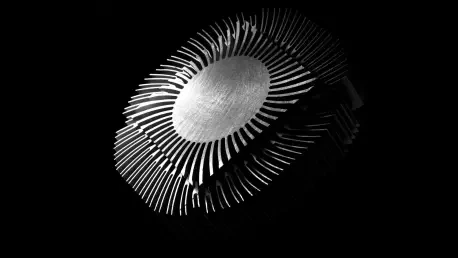

Microfluidics represents a bold leap forward in cooling technology, embedding tiny channels directly into AI chips to circulate cooling fluid precisely where heat is generated. This method stands in sharp contrast to traditional air or external liquid cooling systems, which often struggle to target heat sources effectively. Microsoft has initiated testing under controlled conditions, as overseen by systems technology expert Husam Alissa, with prototype systems showing impressive results. These tests span server chips that support cloud-based Office applications and GPUs dedicated to AI tasks. A standout feature of this approach is the ability to utilize fluids at elevated temperatures, reaching up to 70°C (158°F). This capability significantly reduces the energy required to cool the fluid, addressing a major inefficiency in conventional systems and paving the way for more sustainable data center operations.

The early success of microfluidics in Microsoft’s trials highlights its potential to transform thermal management on a broader scale. Beyond just maintaining optimal temperatures, this technology minimizes the risk of performance throttling, a common issue when chips overheat. By applying cooling directly at the chip level, the system ensures consistent operation even under heavy AI workloads. Additionally, the reduced energy footprint of using high-temperature fluids aligns with growing industry demands for greener solutions. As data centers consume vast amounts of power—often contributing to significant carbon emissions—such innovations are critical for balancing performance with environmental responsibility. Microsoft’s progress in this area signals a shift toward more integrated and efficient cooling mechanisms that could influence future hardware development across the sector.

Driving Efficiency in Data Centers

Another compelling aspect of microfluidics lies in its capacity to enhance overall data center efficiency beyond mere temperature control. By mitigating heat at the source, Microsoft can push the boundaries of hardware performance without the constant threat of thermal damage. This direct cooling method also allows for tighter integration of components, reducing the physical space needed for cooling infrastructure. As a result, data centers can allocate more room for computational hardware, maximizing output within existing facilities. This is particularly vital as AI-driven demand continues to surge, placing unprecedented pressure on infrastructure to scale rapidly while maintaining cost-effectiveness.

Moreover, the energy savings from microfluidics contribute to a more sustainable operational model, an increasingly important consideration for tech companies under scrutiny for their environmental impact. Traditional cooling often requires chilling fluids to much lower temperatures, a process that consumes substantial power. In contrast, Microsoft’s ability to operate with warmer fluids cuts down on this overhead, potentially lowering electricity costs and carbon footprints. This efficiency gain is not just a technical achievement but also a strategic one, positioning the company as a leader in responsible innovation. As other industry players observe these advancements, microfluidics could inspire a wave of similar efforts aimed at reconciling high performance with ecological stewardship in data center design.

Advancing Hardware Design and Industry Trends

Performance and Design Innovations

Microfluidics is not just about cooling; it unlocks remarkable possibilities for chip architecture and performance optimization at Microsoft. By effectively managing heat, the technology enables vertical stacking of chips, a design strategy that packs more computational power into a smaller footprint. This denser configuration can significantly boost processing capabilities, a crucial advantage for handling the intensive demands of AI applications. Such innovation allows for more robust systems without the need for sprawling hardware expansions, addressing both space and cost constraints in data centers. As AI workloads evolve, this approach could become a cornerstone of next-generation chip design, offering a scalable solution to keep pace with technological growth.

Equally transformative is the support microfluidics provides for overclocking, a technique where chips are pushed to operate at higher speeds to meet temporary spikes in demand. Technical fellow Jim Kleewein has noted its potential for applications like Microsoft Teams, which often experiences usage surges during peak meeting times. Instead of investing in additional hardware to manage these short-term loads, overclocking offers a flexible and economical alternative. With heat no longer a limiting factor, thanks to direct cooling, chips can sustain higher performance levels without risking damage. This adaptability not only enhances user experience by ensuring seamless operation during critical moments but also reflects a smarter approach to resource allocation, potentially setting a new benchmark for how data centers respond to fluctuating demands.

Custom Hardware and Market Dynamics

Microsoft’s broader strategy extends beyond cooling innovations to encompass comprehensive hardware customization for its expansive data centers. With capacity additions exceeding 2 gigawatts in recent times, as highlighted by Rani Borkar, vice president for hardware systems at Azure, the focus on tailored solutions is paramount. This includes adopting hollow-core fiber for networking, a technology that transmits data through air rather than glass, achieving faster speeds. Collaborations with industry leaders like Corning Inc. aim to scale this technology for widespread use, addressing connectivity bottlenecks in AI infrastructure. Such initiatives underscore a holistic push to optimize every facet of data center performance, from processing power to data transfer efficiency.

The market implications of these advancements are already evident, with news of the microfluidics project causing an 8.4% drop in the stock price of Vertiv Holdings Co., a major provider of data center cooling solutions. This reaction suggests growing investor concern over potential disruptions to traditional cooling markets as advanced technologies gain traction. If microfluidics proves scalable, it could challenge established players, prompting a reevaluation of competitive dynamics in the sector. For Microsoft, this positions the company at the forefront of innovation, potentially influencing industry standards for thermal management. As other tech giants take note, the ripple effects might drive accelerated investment in alternative cooling methods, reshaping the landscape of data center infrastructure over the coming years.

Charting the Future of AI Infrastructure

Memory Development and Strategic Focus

Looking ahead, Microsoft’s ambitions in AI hardware extend to critical areas like memory technology, with a particular emphasis on high-bandwidth memory (HBM). Essential for AI computing, HBM supports the rapid data access required for complex algorithms. Currently, the company’s Maia AI chip utilizes commercially available HBM from suppliers like Micron Technology Inc., but Rani Borkar has hinted at undisclosed developments in this domain. Describing HBM as pivotal to current AI needs, there’s a clear intent to explore proprietary advancements that could further enhance performance. This focus on memory underscores its role as a linchpin in sustaining the computational demands of future AI applications, hinting at exciting possibilities on the horizon.

The strategic importance of memory innovation cannot be overstated, especially as AI models grow in size and complexity, requiring ever-faster data processing capabilities. Microsoft’s ongoing efforts suggest a commitment to not only keeping pace with industry trends but also driving them forward through customized solutions. While specifics remain under wraps, the potential for breakthroughs in this area could redefine how AI systems handle massive datasets. As competition intensifies among tech leaders to dominate AI infrastructure, advancements in HBM and related technologies will likely play a decisive role. Microsoft’s proactive stance in this space positions it to address emerging challenges, ensuring that memory constraints do not hinder the scalability of AI-driven services in expansive data center environments.

Reflecting on Industry Transformation

Reflecting on the strides made, Microsoft’s adoption of microfluidics marks a pivotal moment in addressing the thermal challenges that once constrained AI data centers. The successful testing of direct chip cooling with high-temperature fluids demonstrates a viable path to efficiency, while innovations like chip stacking and overclocking redefine performance benchmarks. Beyond cooling, efforts in custom hardware and networking with hollow-core fiber showcase a comprehensive approach to optimization. The market’s response, evidenced by shifts in competitor stock values, underscores the disruptive potential of these advancements. Moving forward, the focus should shift to scaling these technologies for widespread adoption, fostering industry collaborations to overcome production hurdles, and continuing to explore memory solutions like HBM. These steps will be crucial in sustaining the momentum of AI growth, ensuring that infrastructure evolves in tandem with computational demands while prioritizing energy efficiency for a sustainable future.