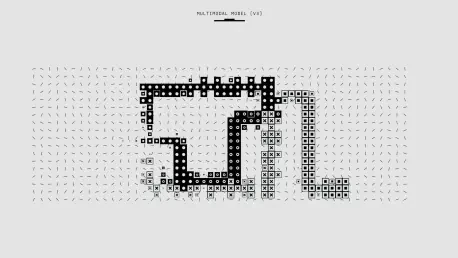

In an era where artificial intelligence shapes industries from healthcare to transportation, a staggering statistic reveals a critical gap: nearly 89% of AI research papers focus solely on vision and language data, leaving vast swaths of real-world complexity untapped. This narrow scope limits AI’s ability to address multifaceted challenges like climate change or autonomous driving, where diverse data inputs are essential. Enter the Multimodal AI Framework, a pioneering approach designed to integrate text, images, sound, and sensor readings into a cohesive system. This review explores the framework’s transformative potential, delving into its core features, real-world applications, and the hurdles it must overcome to redefine how AI interacts with the world.

Core Features of the Framework

Seamless Data Integration

At the heart of the Multimodal AI Framework lies its ability to process and unify multiple data types. Unlike traditional AI models that excel in isolated domains such as image recognition or natural language processing, this framework combines inputs like audio signals, environmental sensor data, and visual feeds to create a more holistic understanding of complex scenarios. This integration is achieved through advanced algorithms that align and interpret disparate data streams, enabling AI to mimic human-like perception across varied contexts.

The technical significance of this capability cannot be overstated. By breaking down silos between data modalities, the framework allows AI systems to make informed decisions in dynamic environments. For instance, in autonomous vehicles, it ensures that visual cues are cross-referenced with radar and sound data to enhance navigation accuracy, pushing the boundaries of what AI can achieve in safety-critical applications.

Ethical and Safety Guidelines

Another cornerstone of this framework is its emphasis on ethical deployment and safety. Built-in protocols guide developers in creating AI systems that prioritize user trust and accountability, addressing concerns like bias in data processing or unintended consequences in real-time applications. These guidelines are not mere afterthoughts but are woven into the framework’s design, ensuring that ethical considerations shape every stage of development.

The impact of such protocols is evident in their ability to foster reliability. By embedding safety mechanisms, the framework mitigates risks associated with AI misinterpretation of multimodal inputs, such as incorrect medical diagnoses or flawed environmental predictions. This focus on trustworthiness sets a new standard for AI systems, aligning technological progress with societal well-being.

Performance and Real-World Impact

Cutting-Edge Applications

The Multimodal AI Framework shines brightest in its practical applications across diverse sectors. In healthcare, it empowers diagnostic tools to integrate clinical records, imaging scans, and genomic data, leading to more accurate disease detection and personalized treatment plans. This convergence of information enables medical professionals to move beyond surface-level analysis, tackling intricate health challenges with precision.

In the realm of transportation, the framework underpins self-driving car technology by synthesizing visual, sensor, and geospatial data. This multidimensional approach enhances vehicle safety, allowing for real-time responses to unpredictable road conditions. Beyond these fields, environmental forecasting benefits immensely, as the framework combines satellite imagery, weather sensor readings, and historical trends to predict climate patterns, aiding in disaster preparedness and agricultural planning.

A particularly striking use case emerges in global crisis response. By integrating data from social media feeds, satellite imagery, and on-ground sensors, the framework supports rapid decision-making during pandemics or natural disasters. Such versatility underscores its potential to address pressing issues on a planetary scale, making it a vital tool for policymakers and researchers alike.

Current Trends and Research Bias

Recent trends in AI research highlight both the urgency and the challenge of adopting multimodal approaches. Statistics from leading repositories show that an overwhelming majority of studies still prioritize vision and language data, with limited exploration of other modalities. Starting this year, over the next two years, a gradual shift is expected as the industry recognizes the need for broader data integration to solve real-world problems effectively.

This persistent bias toward narrow data types reveals a gap that the Multimodal AI Framework aims to bridge. Emerging research now focuses on practical deployment rather than theoretical advancements, aligning with the framework’s goals. Collaborative efforts between academia and industry are gaining momentum, signaling a collective push to diversify AI capabilities and ensure they meet the demands of complex environments.

Challenges in Development and Adoption

Technical and Scalability Hurdles

Despite its promise, the Multimodal AI Framework faces significant technical challenges. Integrating diverse data types requires immense computational power and sophisticated alignment techniques to avoid discrepancies between modalities. These hurdles often result in slower processing times or reduced accuracy, particularly when scaling the framework for widespread use in resource-constrained settings.

Moreover, the complexity of managing multimodal datasets poses risks of overfitting or misinterpretation, where AI might draw incorrect conclusions from poorly synchronized inputs. Ongoing efforts by developers aim to streamline these processes through optimized algorithms and enhanced hardware support, but achieving seamless scalability remains a work in progress.

Ethical and Regulatory Obstacles

Beyond technical issues, ethical and regulatory concerns loom large. The framework’s ability to handle sensitive data across modalities raises questions about privacy and consent, especially in applications like healthcare or surveillance. Ensuring that AI adheres to global standards while respecting cultural and legal differences is a daunting task for developers.

Regulatory frameworks lag behind the rapid pace of AI innovation, creating uncertainty for industry adoption. Governments and organizations are beginning to address these gaps, but harmonizing policies to support safe and equitable deployment of multimodal AI remains a critical barrier. Balancing innovation with oversight will be essential to unlocking the framework’s full potential.

Final Thoughts and Next Steps

Looking back, this review highlighted how the Multimodal AI Framework stands as a beacon of innovation, tackling the limitations of conventional AI by integrating diverse data streams with an unwavering commitment to ethics and safety. Its applications across healthcare, transportation, and environmental forecasting demonstrate a remarkable capacity to transform industries, while persistent challenges in scalability and regulation underscore the road ahead.

Moving forward, stakeholders must prioritize collaborative research to refine data integration techniques and reduce computational demands. Industry leaders should advocate for standardized ethical guidelines, ensuring that privacy and trust remain at the forefront of deployment. Additionally, policymakers need to accelerate the development of adaptive regulations that keep pace with technological advancements. By focusing on these actionable steps, the Multimodal AI Framework can evolve into a cornerstone of practical, trustworthy AI, paving the way for solutions to some of humanity’s most daunting challenges.