A monumental leap in artificial intelligence hardware has been achieved through a novel training method that reduces energy consumption by nearly six orders of magnitude compared to traditional GPUs while simultaneously boosting model accuracy. This groundbreaking technique, developed by a team of researchers from China’s Zhejiang Lab and Fudan University and detailed in a recent Nature Communications study, directly resolves a critical and long-standing conflict between the physical limitations of next-generation analog computing hardware and the intricate demands of AI training algorithms. Known as the error-aware probabilistic update (EaPU), this innovation promises to unlock the full potential of energy-efficient analog in-memory computing, potentially reshaping the economic and environmental landscape of the entire AI industry by making the development of powerful models more sustainable and accessible.

The Promise and Problem of Analog AI Hardware

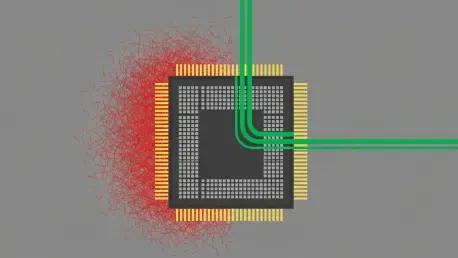

The relentless growth of artificial intelligence is increasingly constrained by the immense energy appetite of digital computing hardware, particularly during the training phase of large-scale models. A highly promising alternative lies in analog in-memory computing, which utilizes devices such as memristors. These unique electronic components merge memory and processing functions into a single unit, closely mimicking the synaptic structure of the human brain. By leveraging fundamental physical principles like Ohm’s law, arrays of memristors can execute the complex matrix operations at the heart of neural network computations with extraordinary energy efficiency. This elegant approach overcomes the primary energy bottleneck in conventional digital chips, where data must be constantly shuttled back and forth between separate memory and processing units, a process that consumes significant power and time. This potential has driven substantial research, making memristors a focal point for the future of AI hardware.

While using memristor-based systems for AI inference—the process of applying a trained model to make predictions—has shown considerable success in prototypes, the task of training deep neural networks directly on this hardware has remained a formidable challenge. The core problem lies in the inherent imprecision of writing data to these analog devices. The backpropagation algorithm, the cornerstone of modern AI training, requires making a vast number of small, highly precise adjustments to the network’s synaptic weights. However, memristors are physically noisy and unpredictable components. Any attempt to set a memristor’s conductance, which represents a synaptic weight, to an exact value is plagued by physical limitations, including programming tolerances and a phenomenon known as post-write drift, where the stored value can subtly change over time, disrupting the delicate learning process and leading to instability.

A Paradigm Shift with the Error-Aware Probabilistic Update

This physical reality creates a fundamental “algorithm-hardware mismatch” that has historically thwarted progress. The researchers discovered that the desired weight updates dictated by the backpropagation algorithm are frequently 10 to 100 times smaller than the intrinsic noise level of the memristor devices themselves. Consequently, when the algorithm calls for a tiny, nuanced adjustment, the hardware’s stochastic nature and write errors can cause the weight to jump wildly, completely overwhelming the intended update and derailing the training. This instability has forced researchers into unsatisfactory compromises, such as sacrificing the precision needed for high accuracy or consuming excessive energy in repeated, forceful attempts to coerce the devices into exact states. These challenges have largely confined on-chip training to smaller, less complex networks, limiting the practical application of this otherwise promising technology.

Instead of attempting to fight against the inherent physical noise of memristor devices, the researchers devised EaPU, a method that strategically embraces this uncertainty. This ingenious approach redesigns the update rule to align perfectly with the hardware’s natural characteristics. It transforms the small, deterministic weight adjustments generated by the standard backpropagation algorithm into larger, discrete updates that are applied probabilistically rather than with absolute certainty. The mechanism is elegantly simple: a noise threshold, denoted as ΔWth, is established, corresponding to the minimum reliable change that can be made to a memristor’s conductance. When the training algorithm calculates a desired weight update that is smaller than this threshold, EaPU intervenes. Instead of attempting a futile, noise-drowned small update, the system applies a full, reliable threshold-sized pulse with a probability directly proportional to the magnitude of the originally intended change.

Validation Across Scales and Striking Results

A crucial aspect of this innovative design is that, over many iterations, the average value of the probabilistic updates mathematically converges to the exact value intended by the standard backpropagation algorithm. This elegant solution ensures that the overall learning trajectory and training performance of the neural network are preserved without requiring impossible precision from the hardware. The most dramatic and beneficial side effect of this approach is a massive reduction in the frequency of write operations. Since the vast majority of weight updates during deep learning are very small, EaPU intelligently filters them out, skipping the write operation most of the time. This results in an astonishing update sparsity of over 99%. In one rigorous test involving a 152-layer ResNet, the team observed that only 0.86 out of every thousand parameters required an update at any given training step, a clear demonstration of the method’s profound efficiency.

The research team rigorously validated the EaPU method through a combination of physical hardware experiments and large-scale simulations.