The relentless computational appetite of modern artificial intelligence has pushed conventional silicon technology to its absolute limits, creating an energy and performance crisis that only a fundamental shift in computing architecture can resolve. The development of all-optical AI hardware represents such a shift, promising to overcome the energy and speed limitations of traditional electronic chips. This review will explore the evolution of this technology, focusing on the groundbreaking LightGen chip, its key architectural features, performance metrics, and the impact it is poised to have on generative AI applications. The purpose of this review is to provide a thorough understanding of the technology, its current capabilities, and its potential future development.

The Dawn of Optical AI Moving Beyond Silicon Limits

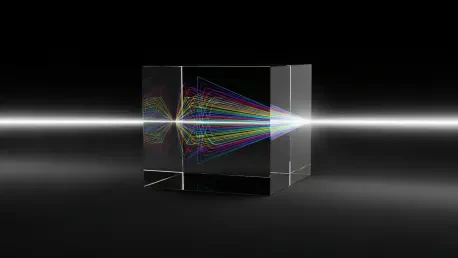

The core principle behind optical AI hardware is the substitution of electrons with photons for computational tasks. Photons, the fundamental particles of light, travel at the highest possible speed, generate negligible heat, and consume far less power compared to the electrons that flow through today’s silicon transistors. This theoretical advantage has long been recognized, but its practical application is now being driven by necessity. The context for this technological pivot is the increasingly unsustainable trajectory of conventional computing.

Large-scale AI models, such as those powering generative applications like ChatGPT and Stable Diffusion, place an enormous strain on existing infrastructure. Their training and operation demand massive data centers that consume megawatts of power, contributing significantly to operational costs and environmental concerns. As these models grow in complexity, the performance bottlenecks and energy requirements of silicon-based chips become ever more pronounced, hindering further progress. Consequently, optical computing has moved from a theoretical ideal to a critical pathway for developing faster, more efficient, and ultimately sustainable artificial intelligence.

The LightGen Chip Architecture and Innovation

The LightGen chip, developed through a collaboration between Shanghai Jiao Tong University and Tsinghua University, represents a landmark achievement in this field. Its design is not merely an optical version of an electronic chip but a fundamental reimagining of how computation can be applied to AI. It moves away from the limitations of both conventional electronics and previous attempts at photonic computing, introducing an architecture tailored specifically for the demands of modern AI.

A Novel All-Optical Neural Network

At the heart of LightGen’s design is an intricate, all-optical neural network composed of over two million specialized photonic “neurons.” These components are engineered to process information in a manner analogous to the human brain, facilitating massively parallel processing. This stands in stark contrast to the operational model of traditional silicon chips, where transistors act as simple on-off switches that handle data in a linear, sequential fashion.

This architectural difference is profound. While a conventional CPU or GPU must process data step-by-step, LightGen’s photonic neurons can manage vast, simultaneous streams of information. This parallel capability is exceptionally well-suited for the multi-layered and interconnected data structures inherent in deep learning and generative AI tasks. By mimicking the brain’s ability to process incredible amounts of information at once, the chip can tackle complex problems with far greater speed and fluidity.

The Leap to Three-Dimensional Processing

Perhaps the most significant architectural breakthrough of the LightGen chip is its three-dimensional structure. Earlier generations of photonic chips were designed on a two-dimensional plane, which imposed critical limitations on their ability to process complex, high-dimensional data. When tasked with analyzing a high-resolution image, for example, a 2D chip would have to resort to a piecemeal strategy, breaking the image into smaller patches and processing each one individually.

This fragmentation process is inherently inefficient and often leads to a loss of coherence in the final output, as the AI struggles to reassemble the processed fragments into a unified whole. LightGen’s 3D architecture, which stacks layers of photonic neurons, overcomes this challenge entirely. It allows the chip to perceive and process an entire image, a 3D scene, or a video frame as a single, holistic entity. This capability not only boosts efficiency but also dramatically improves the quality and realism of the generated output.

Performance Benchmarks and Breakthroughs

Empirical testing has confirmed the theoretical advantages of LightGen’s design, revealing performance gains that are not incremental but transformative. In a series of rigorous benchmarks, the prototype was pitted against state-of-the-art electronic hardware in computationally intensive tasks, with the results signaling a new frontier for AI processing power.

Unprecedented Speed and Power Density

In direct comparisons with the NVIDIA A100, a top-tier GPU widely considered a workhorse for high-performance AI, LightGen demonstrated a staggering 100-fold increase in processing speed. This acceleration means that tasks that would take hours on conventional hardware could potentially be completed in minutes.

Moreover, this speed did not come at the cost of size. The chip also achieved a 100-fold increase in computing power density, signifying its ability to pack significantly more computational capability into the same physical footprint as its electronic counterparts. This combination of speed and density opens the door for more powerful and compact AI systems across a range of applications.

Revolutionizing Energy Efficiency

Alongside its speed, LightGen’s most critical advantage lies in its energy savings. The optical chip consumed 100 times less power to perform equivalent tasks compared to the A100 GPU. This dramatic reduction in energy consumption directly addresses one of the most pressing challenges facing the AI industry today.

The massive power draw of data centers running AI models is a major economic and environmental concern. By offering a path to dramatically lower energy use, optical hardware like LightGen positions itself as a cornerstone technology for sustainable AI. This efficiency could make large-scale AI more accessible and economically viable while mitigating its carbon footprint.

Real-World Applications and Generative Capabilities

The practical potential of the LightGen chip has been demonstrated through its proficiency in some of the most demanding generative AI tasks. Its performance suggests it could not only accelerate existing applications but also enable new possibilities across various industries.

High-Fidelity Image and Video Generation

During testing, LightGen was successfully deployed to generate complex, high-resolution images of subjects like animals and landscapes, as well as short, high-definition videos. The quality of this output was rigorously assessed and found to be on par with, and in some instances superior to, the results produced by leading generative AI systems such as Stable Diffusion and StyleGAN.

This proves that the chip’s theoretical advantages in holistic, 3D processing translate into tangible improvements in output quality. Its ability to handle complex visual data without fragmentation leads to more coherent and detailed generative results, showcasing its immediate relevance for creative and content-generation industries.

Powering Large-Scale AI Models

The broader implications of LightGen extend across the entire AI ecosystem. Its combination of speed and efficiency has the potential to remove the hardware constraints that currently limit the size and complexity of large-scale models. More advanced and capable versions of foundational models like ChatGPT could be developed and run more economically.

By drastically lowering the computational barriers, this technology could democratize access to high-performance AI, allowing smaller research teams and companies to innovate without needing access to vast, power-hungry supercomputers. This could foster a new wave of innovation and accelerate the deployment of advanced AI solutions in science, medicine, and beyond.

Current Challenges and Future Development

Despite its groundbreaking performance, it is crucial to recognize that optical AI hardware is still in its early stages. The journey from a successful research prototype to widespread commercial adoption is fraught with challenges that require significant investment and engineering effort.

From Prototype to Production

Currently, the LightGen chip exists as a proof-of-concept prototype. Translating this success into a mass-produced commercial product involves overcoming substantial technical and manufacturing hurdles. New fabrication techniques will need to be perfected, and standards must be developed to ensure reliability and scalability.

Furthermore, integrating a completely new type of hardware into the existing computing ecosystem presents its own set of challenges. Software stacks, programming languages, and system architectures will all need to be adapted to leverage the unique capabilities of optical processors, a process that will take time and collaboration across the industry.

Scaling for Next-Generation AI

The immediate roadmap for LightGen’s development is focused on refinement and scaling. The research team is actively working on enhancing the chip’s architecture to handle even larger and more sophisticated AI models, ensuring that the hardware’s capabilities continue to evolve in lockstep with the rapid advancements in AI algorithms.

This ongoing research is vital for pushing the boundaries of what is possible with optical computing. The goal is to create a platform that not only meets the needs of today’s most advanced AI but is also prepared to power the next generation of artificial intelligence, which promises to be even more complex and data-intensive.

The Future of AI A Photonic Horizon

The emergence of high-performance optical AI hardware like LightGen signals more than just an improvement in computing; it represents a potential paradigm shift for the entire industry. The transition from electronics to photonics as the primary medium for computation could redefine the limits of what is technologically achievable.

This shift promises to unlock a future of sustainable, high-performance artificial intelligence that is currently unattainable with silicon-based technology. Future breakthroughs in drug discovery, climate modeling, and autonomous systems that require unimaginable computational power may become feasible. This photonic horizon points toward a future where AI’s exponential growth is no longer tethered to an unsustainable rise in energy consumption.

Conclusion A New Era for AI Hardware

This review examined the groundbreaking development of the LightGen optical AI chip and its profound implications for the future of computing. The analysis highlighted how its innovative all-optical, three-dimensional architecture successfully mimicked the parallel processing of the human brain to overcome the limitations of both conventional silicon and prior photonic designs.

The empirical evidence underscored the technology’s transformative potential. The chip demonstrated orders-of-magnitude improvements in processing speed, power density, and energy efficiency when compared to elite electronic hardware. Ultimately, the successful development and testing of LightGen resolved critical questions about the viability of optical computing for complex AI tasks and heralded the dawn of a new, more sustainable era for AI hardware.