A groundbreaking control technique for Large Language Models is poised to reshape the field of artificial intelligence, dismantling the immense financial and computational barriers that have long stifled progress in understanding how these complex systems operate. Researchers from Manchester have

The once-clear boundary separating human authorship from machine-generated text has dissolved into a pervasive ambiguity, presenting one of the most significant trust challenges of our time. This escalating difficulty in determining content provenance is no longer a niche academic concern; it has

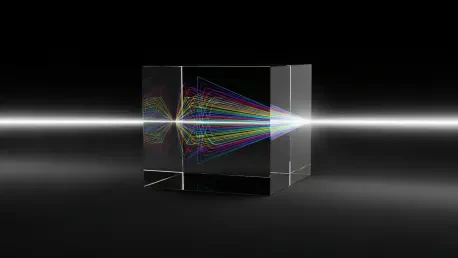

The relentless computational appetite of modern artificial intelligence has pushed conventional silicon technology to its absolute limits, creating an energy and performance crisis that only a fundamental shift in computing architecture can resolve. The development of all-optical AI hardware

A devastating parasitic disease known as "white spot" has long plagued marine aquaculture, causing massive fish kills and inflicting severe economic damage on an industry vital to global food security. Caused by the parasite Cryptocaryon irritans , the infection spreads with alarming speed, and by

The rapid proliferation of large language models from theoretical research constructs into indispensable, widely deployed tools has been driven by a confluence of breakthroughs in their foundational architecture, training methodologies, and operational scale. These sophisticated artificial

The initial surge of excitement surrounding Generative AI has given way to a more pragmatic and pressing challenge for businesses: how to convert the technology's vast potential into tangible, sustainable growth. Many organizations, after early experimentation, find themselves with a collection of