The seemingly effortless intelligence of modern AI, from generating text to identifying diseases, conceals a colossal and unsustainable energy demand that threatens to stall the technology’s progress before it reaches its full potential. A single complex query to a large language model can consume a startling amount of electricity, but the true energy crisis lies in the initial training process, a phase that requires a computational power equivalent to that of a small city. This immense barrier has not only environmental consequences but also economic ones, limiting the development of cutting-edge AI to a handful of corporations with the resources to foot the astronomical energy bills.

A Future Where AI Training Consumes 1,000 Times Less Energy

The challenge of AI’s energy consumption has become one of the most significant hurdles in the field, creating a demand for a fundamental re-imagining of the hardware that powers it. The current trajectory of simply building larger data centers and using more powerful digital chips is not a long-term solution. Instead, a recent breakthrough from a collaborative team of researchers has presented a viable alternative that could slash the energy required for training sophisticated AI models by a factor of up to 1,000, promising a more sustainable and accessible future for artificial intelligence.

This innovation hinges on a new method that successfully tames the historically problematic nature of analog computing for the delicate task of AI training. By developing a sophisticated algorithm that works in harmony with the physical imperfections of analog chips, researchers have demonstrated that it is possible to achieve the accuracy of digital systems at a fraction of the energy cost. This discovery does not merely offer an incremental improvement; it represents a potential paradigm shift that could fundamentally alter the economics, accessibility, and application of AI technology across countless industries.

The Unsustainable Appetite of Digital Intelligence

The exponential growth of AI models has led to a corresponding explosion in their energy and resource requirements, creating what many experts see as an environmental and economic wall. The training of a single state-of-the-art AI model can emit as much carbon as hundreds of transatlantic flights, and the operational costs of running these models in massive data centers are staggering. This high cost of entry consolidates power, stifling innovation by making it prohibitively expensive for startups, academic institutions, and smaller companies to compete at the forefront of AI development.

At the heart of this inefficiency lies a fundamental limitation of traditional digital computers known as the “von Neumann bottleneck.” For nearly a century, computer architecture has been based on physically separating the memory units that store data from the processing units that perform calculations. This design forces data to be constantly shuttled back and forth between these two components, a process that consumes the vast majority of the energy and time in modern computations. This constant data movement is the primary source of the heat and power drain that makes scaling AI on digital hardware so inefficient and costly.

A Paradigm Shift to Computing with Physics

In stark contrast to the digital approach, analog in-memory computing (AIMC) offers an elegant solution to the data movement problem. This technology merges the functions of data storage and computation into a single physical location, effectively eliminating the von Neumann bottleneck. Instead of representing data as discrete 0s and 1s, AIMC leverages the natural laws of physics, using the continuous physical properties of analog components—like the level of electrical resistance in a memory cell—to perform complex mathematical operations such as matrix multiplication instantly and with minimal energy expenditure.

Despite this immense potential for energy efficiency, analog computing has long been dismissed as unsuitable for the rigorous demands of AI model training. The core issue is the inherent imperfection of analog hardware. Unlike the precise, predictable world of digital bits, analog systems are susceptible to physical variations, background noise, and non-linear behaviors that corrupt calculations. During AI training, which relies on an algorithm called backpropagation to make millions of tiny, precise adjustments to a model’s parameters, these hardware flaws introduce errors that compound over time, leading to a phenomenon known as “degraded learning” and preventing the model from ever reaching high levels of accuracy.

The breakthrough that overcomes this long-standing obstacle is a novel training algorithm named “Residual Learning.” Developed by researchers at Cornell Tech in collaboration with IBM and Rensselaer Polytechnic Institute, this method acts as an intelligent layer between the AI model and the imperfect analog hardware. Rather than ignoring the hardware’s flaws, the algorithm actively monitors the deviations and distortions occurring in the physical system in real time. It then dynamically calculates and applies precise compensations to the learning updates, effectively nullifying the negative effects of the analog noise and ensuring the training process remains stable and unbiased.

From a Theoretical Hurdle to a Practical Reality

The success of the Residual Learning algorithm was validated through rigorous testing, in which the research team trained several types of neural networks on their analog platform. The results were definitive: the models trained on the analog hardware achieved an accuracy level that was on par with those trained on conventional, high-precision digital systems. This accomplishment marks the first time that analog hardware has been used to train an AI model to a competitive standard of performance, transforming AIMC from a promising concept for simple tasks into a viable platform for developing complex, state-of-the-art AI.

This research effectively reframes the relationship between software and hardware in AI development. As articulated by the study’s lead, Professor Tianyi Chen, the method allows the software to become aware of the hardware’s physical limitations and actively compensate for them. This symbiotic approach makes the hardware’s inherent flaws irrelevant to the final outcome of the training process. The result is a system that successfully combines the best of both worlds: the raw computational speed and unparalleled energy efficiency of analog computing with the precision and accuracy previously achievable only through purely digital means.

The Dawn of Practical AI from Data Centers to Edge Devices

By dramatically lowering the immense energy and financial costs associated with training large AI models, this technological advance stands to democratize the field of artificial intelligence. It lowers the barrier to entry, empowering a wider range of innovators—from academic researchers and startups to businesses in developing nations—to create and experiment with powerful AI systems. This could lead to a significant acceleration in AI-driven innovation across various sectors, as the ability to develop sophisticated models is no longer confined to a few tech giants.

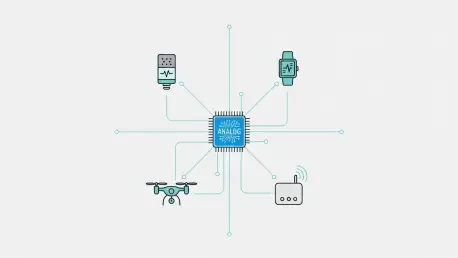

Furthermore, the extreme energy efficiency of analog training unlocks a vast new frontier of applications at the network’s edge. Currently, complex AI functions are impractical for battery-powered devices due to the prohibitive power draw of digital chips. This breakthrough paves the way for advanced, on-device intelligence in a host of new products, including smart medical sensors that can diagnose conditions in real time, next-generation wearable technology, fully autonomous robots that can learn and adapt in the field, and intelligent Internet of Things (IoT) devices for industrial and home use where power efficiency is paramount.

The development of this analog-aware training method represented a pivotal step toward resolving the AI sustainability crisis. The research team’s planned next steps, which included adapting the algorithm for major open-source models and pursuing industry collaborations, signaled a clear path from the laboratory to real-world deployment. This work did not just solve a technical problem; it unlocked the potential for a fundamental shift in how artificial intelligence was developed, deployed, and ultimately utilized across society.