The almost subconscious act of rereading a line of text, triggered by the quiet realization that the words have passed by without leaving any meaning, is a distinctly human experience. This internal check, a moment of “thinking about thinking,” is known as metacognition. For modern artificial intelligence, which can generate vast amounts of complex information but remains fundamentally unaware of its own reasoning process, the absence of this capability represents a critical vulnerability. As AI is increasingly integrated into high-stakes applications, this lack of self-assessment poses significant risks. This analysis explores the emerging trend of artificial metacognition, examining the mechanisms driving its development, its real-world implications, and the future trajectory of AI that can know what it does not know.

The Rise and Application of Self-Correcting AI

The push for AI systems that can self-regulate is not merely an academic exercise; it is a direct response to the growing pains of deploying powerful but fallible technology. As AI models become more integrated into society, their failures become more visible and consequential, creating a powerful demand for systems that are not just capable, but also reliable and transparent.

Charting the Growth of Reflective Systems

The demand for more dependable AI has intensified following a series of high-profile failures and the technology’s rapid expansion into critical sectors. Fields such as medicine, finance, and autonomous systems cannot tolerate the overconfident errors that have characterized some generative models. An AI that offers a flawed medical diagnosis or a faulty financial projection without any sense of its own uncertainty is a liability, not an asset. This market pressure is driving a significant shift in AI development.

Consequently, a clear trend is emerging within the AI research community: a move beyond optimizing for raw performance and toward addressing the fundamental limitations of AI’s self-awareness. The focus is no longer just on what an AI can do, but on how it does it and how well it understands its own process. The development of frameworks like artificial metacognition is a key indicator of this evolution, signaling a maturation of the field from building powerful engines to engineering responsible and trustworthy systems.

Real-World Mechanics of an AI That Thinks Twice

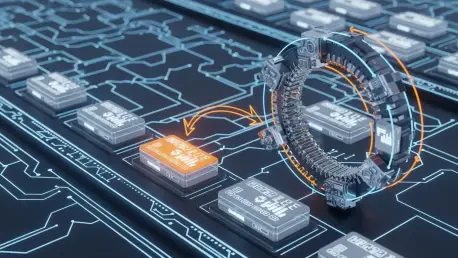

At the heart of this trend is a concrete engineering solution designed to give AI a proxy for self-reflection. This is achieved through a novel framework centered on a “metacognitive state vector,” a quantitative measure of an AI’s internal state that translates abstract assessments into actionable data. This vector provides the system with a multi-faceted view of its own cognitive process.

This vector is composed of five core dimensions that function like internal sensors. Emotional Awareness tracks emotionally charged content to handle sensitive topics appropriately. Correctness Evaluation acts as a confidence meter, gauging the AI’s certainty in the factual accuracy of its output. Experience Matching assesses how familiar a new problem is by comparing it to its training data, while Conflict Detection scans for internal contradictions in the information being processed. Finally, Problem Importance allows the AI to weigh the stakes of a task, dedicating more resources to critical decisions.

These dimensions collectively inform a control system that functions much like an orchestra conductor. Using the data from the vector, the system can dynamically switch an ensemble of AI models between two processing modes, analogous to human System 1 (fast, intuitive) and System 2 (slow, deliberative) thinking. For a simple, familiar query where the vector signals high confidence and low conflict, the AI responds quickly. However, if the vector detects a novel, important, or contradictory problem, it shifts into a deliberative mode where different models take on roles like generator, critic, or expert to reason through the problem more carefully.

Expert Insights on the Necessity of Self-Aware AI

Researchers at the forefront of this trend emphasize that modern generative AI operates under a significant paradox. These systems possess extraordinary capabilities for language generation and pattern recognition, yet they operate without any mechanism to evaluate the quality of their own output or the confidence they should have in it. This makes them powerful but brittle tools.

The objective of artificial metacognition, experts clarify, is not to create consciousness or sentience in machines. Rather, the goal is to build a practical, computational architecture that improves resource allocation, response quality, and overall system safety. It is an engineering solution to an engineering problem: how to make an inherently unaware system behave in a more measured, reflective, and reliable manner.

This trend is seen as crucial for fostering trust and accountability in AI systems. A metacognitive AI can do more than just provide an answer; it can explain its confidence level, flag internal uncertainties, and justify why it chose a more deliberative approach. Most importantly, this capability allows an AI to know when its own analysis is insufficient and it is time to defer to human judgment, transforming it from an opaque oracle into a transparent and accountable partner.

The Road Ahead Potential and Pitfalls

The future applications of artificial metacognition promise to enhance safety and effectiveness across numerous domains. In healthcare, a diagnostic tool could recognize that a patient’s symptoms do not fit any typical patterns and alert a human doctor to a potentially rare condition. Adaptive educational software could sense a student’s confusion by detecting conflicting inputs and adjust its teaching strategy in real time. In finance, models could flag their own uncertainty when market data is unprecedented, preventing automated systems from making catastrophic trades.

Beyond these immediate applications, this trend has the potential to evolve into what researchers call “metareasoning”—the ability for an AI to reason about its own reasoning processes. This would represent a significant leap forward, allowing systems to not only identify a problem but also analyze why their initial approach failed and devise a better strategy. Such a capability would lead to far more robust, adaptable, and sophisticated AI systems.

However, the path forward is not without its challenges. The deliberative “System 2” processing required for metacognition is computationally expensive, which could limit its application. Validating the effectiveness of these complex systems across a wide range of diverse tasks will be a significant undertaking. Furthermore, there is a societal risk of misinterpreting these advanced self-regulatory capabilities as genuine self-awareness or consciousness, which could lead to misplaced trust and ethical complications.

Conclusion: Toward a Wiser Artificial Intelligence

The emergence of artificial metacognition signaled a pivotal shift in the trajectory of AI development. It marked a move away from the singular pursuit of raw computational power and toward the cultivation of systems capable of self-assessment and reflection. The development of a practical framework, built around concepts like the metacognitive state vector, provided a tangible path to creating AI that is not only more capable but also fundamentally safer and more reliable.

This trend was foundational for the responsible and effective deployment of advanced AI in society. By enabling an AI to understand its own cognitive limits, artificial metacognition helped bridge the gap between human trust and machine capability. The vision that guided this work was not simply to create a more powerful tool, but to develop a reliable partner that understands its own strengths and, crucially, knows when to ask for help.