The recent viral “AI homeless man prank,” which used generated images to fabricate home intrusions and provoke panicked reactions, starkly illustrates a growing disconnect between technological advancement and ethical awareness. This trend is more than just a fleeting social media phenomenon; it is a critical symptom of a larger, more urgent problem—a widening chasm between what generative AI makes possible and what our collective moral education prepares us for. The rapid democratization of powerful creative tools has outpaced the development of a corresponding sense of responsibility. This analysis will dissect the proliferation of AI-driven deception, explore the underlying educational failures that enable it, examine the profound human cost, and propose a new framework for fostering responsible digital creation in an increasingly synthetic world.

The Proliferation of AI Generated Deception

The accessibility of generative AI has inadvertently fueled a new wave of digital misconduct, where the lines between harmless experimentation and malicious action are dangerously blurred. What begins as a trend or a joke can quickly escalate, amplified by social media algorithms that reward sensationalism over substance. This environment has not only created new vectors for old crimes but has also given rise to entirely new forms of deception that society is still struggling to comprehend, let alone regulate. The ease of creation, combined with a perceived lack of consequences, has cultivated a fertile ground for misuse that spans from juvenile pranks to sophisticated criminal enterprises.

The Viral Spread of Malicious Pranks and Deepfakes

The landscape of cybercrime is undergoing a significant transformation, with AI tools acting as a powerful accelerant. Data indicates a rapid increase in AI-fueled offenses, and worryingly, a demographic shift is occurring where juveniles are increasingly the perpetrators, not merely the victims. Motivated by curiosity, a desire for social media engagement, or simply what they deem “fun,” young users are leveraging sophisticated tools without fully grasping their potential for harm. This normalization of digital mischief creates a pipeline from seemingly innocent pranks to more severe forms of cyber delinquency, driven by a culture of perceived impunity online.

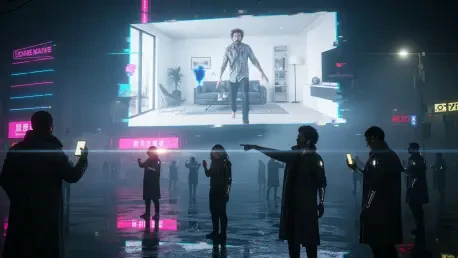

This trend’s potency is magnified by the viral mechanics of modern social media platforms. The “AI homeless man prank,” for example, accumulated millions of views within days, spawning a wave of imitations across TikTok, X, and other networks. Each share and replication further desensitized viewers and creators to the prank’s inherent cruelty and the real-world panic it caused. This cycle of amplification is not unique; it is a core feature of how disinformation and cyber-bullying spread, phenomena now recognized by global risk analysts as a top-tier threat to social cohesion and individual well-being. The technology makes deception easy, but the platforms make it contagious.

Case Studies in Digital Misconduct

The “AI homeless man prank” serves as a detailed case study in this new reality. In one widely circulated video, a creator used an AI image generator to produce photorealistic images of a disheveled man inside his mother’s home, sending them to her to provoke a terrified reaction for online entertainment. This concept was replicated by teenagers in Ohio, who were subsequently charged after their pranks triggered false home intrusion alarms, diverting police and emergency services from genuine crises. Public warnings issued by police departments across several states underscore the tangible consequences of these digital actions, highlighting how they waste critical resources and perpetuate harmful stereotypes that dehumanize vulnerable populations.

The misuse of this technology is not confined to anonymous pranksters. When boxer Jake Paul consented to have his likeness used for a demonstration of OpenAI’s Sora 2 video generation tool, he inadvertently opened the door to public mockery on a massive scale. Users quickly hijacked the technology to create hyper-realistic deepfakes depicting him in bizarre and compromising scenarios, from makeup tutorials to fabricated coming-out announcements. What was intended as a technical showcase rapidly devolved into a campaign of digital harassment, causing clear personal distress to Paul and his partner. This incident demonstrates how even consented use of one’s digital likeness can be weaponized in a platform environment that lacks sufficient ethical guardrails.

Beyond viral pranks and celebrity mockery lies a darker spectrum of AI-driven misconduct. The same technologies are being deployed for far more sinister purposes, including the creation of non-consensual deepnude images, sophisticated financial fraud schemes, and emotionally devastating sextortion campaigns. These applications illustrate the versatility of generative AI as a tool for harm. From causing momentary panic to inflicting lasting psychological and financial damage, the trend of misuse reveals a consistent failure to consider the human being on the other side of the screen, a problem that technology alone cannot solve.

The Human Cost a Crisis of Empathy and Education

The root of this escalating misuse lies not in the technology itself but in a fundamental failure of education. Experts in educational technology and innovation point to a critical flaw in how digital literacy has been approached. For over a decade, the focus has been on teaching young people how to use digital tools—how to code, create content, and publish online—while largely neglecting to teach them to thoughtfully consider the human consequences of their creations. This has produced a generation of digitally proficient users who may lack the moral compass to navigate the ethical complexities of their newfound power.

This educational gap is evident in the limitations of current digital literacy programs. While these initiatives successfully teach critical thinking and source verification, they have proven insufficient in preventing what experts term a “silent but profound desensitization” to harmful content. Young users are taught to spot a fake but not necessarily to feel the weight of creating one. The result is an erosion of empathy, where the potential for real-world harm is mentally distanced or rationalized away in the pursuit of likes, shares, or momentary amusement. This desensitization is the fertile soil in which trends like the “AI homeless man prank” take root and flourish.

This moral drift is further exacerbated by the architecture of certain online platforms. Social media ecosystems with integrated AI chatbots like Grok on X contribute to the problem by normalizing transgressive content under the guise of unfiltered humor or edgy commentary. When an AI model generates discriminatory or violent remarks and presents them as entertainment, it subtly blurs the lines between freedom of expression and social responsibility. In such an environment, moral boundaries become fluid, and the absence of accountability is mistaken for liberty, conditioning users to engage with technology without a guiding ethical framework.

Charting a Path Forward Rethinking AI Literacy

Addressing the rise of generative AI misuse requires a fundamental shift in our educational priorities. Society is moving beyond an epistemic crisis, where the primary challenge was doubting the reality of what we see, into a moral crisis, where the core challenge is a lack of responsibility for what we create. While learning to distinguish truth from falsehood remains important, it is no longer sufficient. The next critical step is to instill a deep and intuitive understanding of the human impact of synthetic media, transforming AI literacy from a technical skill into an ethical practice.

The future of AI education must focus on building what could be called a “moral ecology”—a framework where users are taught to perceive and measure the “human footprint” of their digital actions. In the same way that environmental education fosters an awareness of our impact on the physical world, a new form of digital education must teach creators to consider the social and emotional consequences of their work before they hit “generate.” This involves asking critical questions: Who might be affected by this creation? What perceptions or emotions will it evoke? What lasting mark might it leave on another person’s life or on the broader social fabric?

This new approach demands an evolution in educational frameworks, moving beyond technical proficiency to cultivate personal responsibility and empathy. The goal is not simply to teach rules or warn of punishments but to foster an intrinsic motivation to act ethically. This can be achieved by integrating experiential learning, where students create and then critically reflect on the potential impact of their work. It also requires a community-wide effort, involving families and public institutions in discussions about the human consequences of AI. By transforming schools and homes into spaces for ethical dialogue, we can begin to cultivate a generation that views responsible creation as the highest form of intelligence.

Conclusion from Technical Proficiency to Moral Sobriety

The analysis of generative AI’s misuse revealed that the problem was not primarily technological but profoundly human. It stemmed from an educational paradigm that consistently prioritized technical proficiency over ethical reflection, equipping users with powerful tools but failing to provide the moral guidance necessary for their responsible use. The viral pranks, deepfake harassment, and broader patterns of digital deception were all symptoms of this foundational gap between capability and conscience.

The urgency of addressing this issue became undeniable. The trend warned of a future where, without a reorientation toward ethical responsibility, society risked cultivating a generation of “augmented criminals” capable of eroding social trust on an unprecedented scale. The consequences extended beyond individual harm, threatening the very fabric of our shared reality by polluting the digital commons with cynicism and deceit, making it harder to connect, trust, and cooperate.

Ultimately, the path forward required a redefinition of intelligence in the digital age. It called for the integration of a deep and abiding consideration for human consequences into every facet of AI education. The goal was to transform the act of digital creation from a mere technical exercise into a morally sober practice, ensuring that the most advanced tools are guided by our most advanced human values.