Imagine a world where art and creativity are no longer constrained by human limitations or time. In 2025, the landscape of digital art creation has transformed dramatically, driven by the advent of AI-powered text-to-image models. Artists, designers, and content creators now have access to sophisticated tools that generate vivid images from mere textual descriptions. This industry report delves into the leading text-to-image models for 2025, their advanced technologies, and the diverse applications they open up in different sectors.

Understanding Text-to-Image AI

Text-to-image AI has made significant strides since its early days, evolving into a robust and widely-utilized technology. The core premise involves converting textual input into visual output, enabling users to generate images by describing them in words. These models have reached a level where they can produce photorealistic and highly detailed images, making them invaluable across numerous creative and professional applications. Complex algorithms and neural networks interpret textual prompts and transform them into intricate visual representations, reflecting a deep understanding of nuances and context.

Leading Text-to-Image Models

Recraft V3

Recraft V3, developed by Recraft AI, stands out for its exceptional quality and accuracy. This model excels in photorealism, producing images with impeccable composition, lighting, and detail. It’s particularly favored by professionals in marketing and design, where visual fidelity is paramount. Recraft AI’s commitment to continuous updates ensures that the model maintains its cutting-edge status, offering users an always-improving tool for high-quality image generation.

FLUX1.1 [pro]

Black Forest Labs released FLUX1.1 [pro], a model renowned for its versatility and detail. This model can adapt to a wide array of styles, from photorealistic to abstract art, making it a flexible choice for diverse creative needs. Its integration into various workflows via APIs like Replicate and Together.ai further enhances its utility. Regular updates keep it at the forefront of technology, improving speed, quality, and overall performance.

Ideogram v2

Ideogram v2 is notable for accurately embedding legible text within images—a capability crucial for specific design and artistic applications. Its powerful inpainting capabilities enable precise editing of existing images, providing designers and artists with significant control over their visual work. Continuous improvements in image quality, speed, and text accuracy ensure that Ideogram v2 remains a valuable tool for producing detailed and editable visual content.

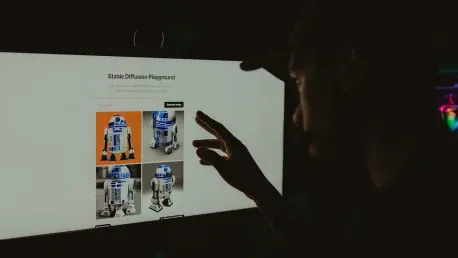

Stable Diffusion XL (SDXL)

Stable Diffusion XL, an open-source model, leverages latent diffusion techniques to create high-quality images. It can generate detailed visuals, whether for artistic or imaginative purposes, and is accessible on consumer GPUs, making it a practical option for a broad audience. Nonetheless, its full potential can be best harnessed by users with some technical expertise in AI and image processing, due to its sophisticated capabilities and extensive ecosystem.

DALL-E 3

OpenAI’s DALL-E 3, launched in October 2023, builds on its predecessors’ successes. Known for its nuanced understanding of prompts, DALL-E 3 generates precise and realistic images. Its seamless integration with ChatGPT enhances creative workflows, offering a powerful tool for generating diverse visual content. The model’s emphasis on accuracy and variety makes it a preferred choice for various creative applications.

Midjourney

Midjourney has carved a niche with its ability to produce visually striking images across diverse styles. Its strong command of artistic principles allows it to interpret and execute complex prompts with high precision. Regular updates enable Midjourney to continuously expand its stylistic range and improve its capabilities, making it a go-to for artists and designers seeking high-quality, creative visual outputs.

Adobe Firefly

Adobe Firefly offers high-quality image generation from simple text prompts, integrated seamlessly with Adobe’s creative suite. This tight integration facilitates a smooth workflow for users familiar with Adobe’s suite, making it especially appealing. Firefly provides tools for custom model training, allowing for tailored image generation, which further enhances its versatility and practicality for creative professionals.

Runway ML Gen-2

Runway ML Gen-2 pushes the boundaries of text-to-image AI by extending capabilities to video generation. It creates high-quality short clips from text prompts and supports transitions between image-to-video and video-to-video formats. This unique feature makes it a valuable tool for filmmakers, advertisers, and other professionals seeking to incorporate dynamic visual content into their projects.

Artbreeder

Artbreeder combines AI with user creativity, fostering a collaborative community. Users can blend existing images or generate new ones from text prompts. Tools like Composer and Collager offer additional ways to experiment and create unique visual content. This community-driven approach and accessibility make Artbreeder a popular choice among artists looking to push the boundaries of their craft.

Current Industry State

The text-to-image AI industry in 2025 is characterized by rapid advancements and widespread adoption across multiple sectors. These models have become indispensable in fields like digital art, advertising, design, and more. The availability of open-source models and API integration capabilities has democratized access, enabling even smaller businesses and independent creators to leverage these powerful tools. As these models become more sophisticated, the quality, speed, and versatility of the generated images continue to improve, setting higher standards for AI-driven creativity.

Trends and Innovations

Recent innovations in AI image generation include advancements in model architecture, data utilization, and training methodologies. Transformer-based models have revolutionized text understanding and image synthesis, while diffusion models have enhanced the detail and realism of generated visuals. Attention mechanisms and multi-modal learning techniques have further refined the accuracy of these models, allowing them to produce images that closely align with intricate textual descriptions.

Applications and Impact

Text-to-image models are employed extensively in creative industries, marketing, e-commerce, education, and entertainment. They streamline the creation of custom visuals, concept art, storyboards, and prototypes, significantly reducing time and cost. Additionally, these models improve accessibility by aiding visually impaired individuals in understanding textual content through generated images. Their versatility and efficiency empower businesses and creators to explore new avenues and experiment with innovative visual solutions.

Challenges and Ethical Considerations

Despite the advancements, developing text-to-image models presents several challenges, including generating consistently high-quality images and interpreting complex prompts accurately. Ethical considerations, such as addressing biases in generated content, preventing misuse, and ensuring transparency, are critical. High computational power requirements for advanced models also pose practical limitations for widespread use. Addressing these challenges is essential for the sustainable and responsible growth of the industry.

Future Outlook

The findings indicate that text-to-image models will continue to evolve, offering increasingly sophisticated and versatile tools for visual creation. As technology advances, the potential applications and accessibility of these models will expand, paving the way for innovative and inclusive digital content. Future developments will likely focus on enhancing model capabilities, improving user experience, and addressing ethical concerns to ensure the responsible use of AI in visual arts.

In conclusion, the industry in 2025 is poised for significant growth, driven by continuous advancements in AI technology. The leading models, such as Recraft V3, FLUX1.1 [pro], and DALL-E 3, offer diverse and powerful tools for a wide range of creative needs, indicating a promising future for text-to-image AI. As the technology progresses, it is expected to further revolutionize the way visual content is created, making it more accessible, efficient, and innovative for a global audience.