The relentless pursuit of colossal artificial intelligence has long been governed by a simple, seemingly unbreakable rule: bigger is always better, with progress measured in the ever-escalating count of model parameters. This philosophy has driven the creation of models so vast they require immense computational resources, effectively limiting cutting-edge AI to a handful of heavily funded corporations. For years, this paradigm went largely unchallenged, as smaller models, while competent in basic tasks, consistently failed to match the complex reasoning abilities of their larger counterparts. This established a clear hierarchy where computational might directly translated to intellectual prowess.

However, a fundamental shift is underway, challenging this long-standing assumption about the nature of AI power. The emergence of a new generation of compact yet highly capable models suggests that architectural elegance and training efficiency may be more critical than sheer size. This development is not merely an academic curiosity; it has profound implications for the accessibility, cost, and future trajectory of artificial intelligence. The central question now facing the industry is whether a smarter design can truly overcome a massive deficit in scale, potentially democratizing access to high-end reasoning capabilities and redefining the competitive landscape.

The Scaling Law is Broken How a 7-Billion Parameter Model is Redefining AI Power

In a direct challenge to the “bigger is better” paradigm, the Technology Innovation Institute (TII) has introduced Falcon #R 7B, a compact model that is forcing a reevaluation of how AI performance is measured. With just seven billion parameters, this model enters a weight class typically associated with conversational proficiency rather than elite logical deduction. Yet, its performance metrics tell a different story, one where intelligent design allows it to operate far outside its expected capabilities, setting a new benchmark for what small-scale AI can achieve.

The most striking aspect of Falcon #R 7B is its demonstrated ability to surpass the reasoning skills of competitors many times its size. In rigorous testing, it has outperformed models with far greater parameter counts, including established systems like the 32-billion parameter Qwen model and Nvidia’s 47-billion parameter Nemotron. This unexpected outcome disrupts the predictable correlation between size and intelligence that has defined the industry for years, proving that a model’s power is not solely a function of its scale.

This development moves the conversation beyond a simple numbers game. It raises a critical inquiry into the core principles of AI development: is superior reasoning an inevitable byproduct of brute-force computation, or can it be achieved through more sophisticated and efficient means? Falcon #R 7B’s existence suggests the latter, positing that a combination of novel architecture and meticulously targeted training can unlock performance previously thought to be the exclusive domain of AI behemoths.

Beyond Brute Force The High Cost of the AI Arms Race

For years, the generative AI landscape has been dominated by the brute-force scaling law, a principle holding that the path to greater intelligence is paved with more data and an ever-increasing number of parameters. This belief fueled an arms race among leading technology firms, each striving to build the largest model possible. The underlying assumption was that with sufficient scale, complex reasoning and nuanced understanding would emerge organically from the sheer volume of connections within the neural network.

The practical consequences of this philosophy, however, are becoming increasingly apparent and prohibitive. Massive models demand extraordinary computational power for both training and inference, leading to immense energy consumption and astronomical operational costs. Furthermore, their significant memory requirements make them inaccessible for deployment on local hardware or in resource-constrained environments, creating a high barrier to entry and concentrating power within a few well-capitalized organizations.

In response to these limitations, a strategic pivot is becoming evident within the open-weight AI ecosystem. The focus is gradually shifting away from the raw accumulation of parameters and toward a more nuanced appreciation for efficiency. Developers are now prioritizing architectural innovation and inference performance, seeking to deliver powerful reasoning capabilities in smaller, more agile packages. This trend represents a move toward sustainability and accessibility, aiming to create models that are not only intelligent but also practical and economical to deploy.

Inside the Architecture The Mamba-Transformer Hybrid Advantage

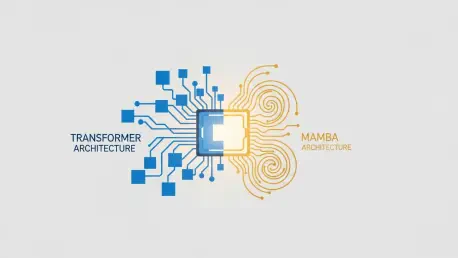

At the heart of Falcon #R 7B’s remarkable efficiency is a novel hybrid architecture that strategically combines the strengths of two distinct technologies: the established Transformer and the emerging Mamba, a state-space model (SSM). This new backbone for AI represents a significant departure from the pure Transformer design that has dominated the field. By integrating these two approaches, the model can leverage the Transformer’s proven capabilities while mitigating its most significant performance bottlenecks.

This hybrid design directly addresses the “cost of thinking,” a major challenge for models performing complex, multi-step reasoning. Traditional Transformers rely on an attention mechanism with quadratic scaling complexity (O(n²)), meaning that as the length of a reasoning chain increases, the computational cost explodes exponentially. In contrast, Mamba processes information sequentially with linear-time scaling (O(n)), allowing it to handle extremely long sequences of thought with vastly improved efficiency. This integration enables Falcon #R 7B to generate extensive logical deductions without incurring the prohibitive computational penalty of a pure Transformer.

The real-world benefits of this architectural choice are quantifiable and substantial. According to TII’s technical reports, Falcon #R 7B can process approximately 1,500 tokens per second on a single GPU, a throughput nearly double that of a comparable pure-Transformer model. This efficiency translates directly into superior performance on specialized benchmarks. In mathematical reasoning, it achieves a groundbreaking 83.1% on the AIME 2025 benchmark, outperforming larger models like the 15B Apriel-v1.6-Thinker. In coding, it scores a reported high of 68.6% on the LCB v6 benchmark, while its general reasoning abilities remain highly competitive with models twice its size.

The Hybrid Wave How Industry Leaders are Embracing Architectural Innovation

The development of Falcon #R 7B is not an isolated breakthrough but rather a prominent example of a much broader industry-wide trend. Major technology companies are increasingly moving away from monolithic Transformer architectures and embracing hybrid designs to achieve a better balance of performance and efficiency. This collective shift validates the core premise that architectural innovation is becoming as important as raw scale in the quest for advanced AI.

Research and development from other industry giants confirm that the hybrid approach is rapidly becoming a new standard. Nvidia’s Nemotron 3 family, for instance, employs a hybrid mixture-of-experts (MoE) and Mamba-Transformer design specifically to power more efficient agentic AI systems. Similarly, IBM’s Granite 4.0 family utilizes a comparable hybrid architecture to reduce memory requirements by over 70%, making powerful models more deployable. AI21 Labs has also explicitly adopted this strategy with its Jamba model family, which features a “Joint Attention and Mamba” architecture to enhance its capabilities.

This widespread adoption by key players lends significant credibility to the idea that smaller, more efficient models represent the future of specialized AI. As more organizations invest in these sophisticated hybrid architectures, the performance gap between compact open-weight models and large-scale proprietary systems continues to narrow. The success of Falcon #R 7B and its contemporaries signals a maturation of the field, where clever engineering and targeted design are creating powerful, accessible, and sustainable alternatives to the resource-intensive models of the past.

A Blueprint for Efficiency Advanced Training and Inference Strategies

Beyond its innovative architecture, Falcon #R 7B’s exceptional performance is the result of a highly refined two-stage training pipeline engineered to maximize what its creators call “reasoning density.” The first stage, a cold-start Supervised Fine-Tuning (SFT) process, utilized a meticulously curated dataset heavily weighted toward complex mathematics and code. A difficulty-aware weighting scheme was implemented to force the model to focus on challenging problems, while a single-teacher approach ensured it learned a coherent and consistent internal logic, avoiding the performance degradation that can result from conflicting reasoning styles.

Following the initial SFT phase, the model underwent a second stage of refinement using Group Relative Policy Optimization (GRPO), a form of reinforcement learning. In a significant departure from standard industry practice, the TII team completely removed the KL-divergence penalty, a constraint that typically prevents a model from deviating too far from its original training. This bold move allowed Falcon #R 7B to aggressively explore and discover novel reasoning pathways, pushing its capabilities beyond the boundaries of its initial supervised learning.

For practical deployment, these advanced training techniques are complemented by a sophisticated inference strategy known as Test-Time Scaling with DeepConf. This framework operates by generating multiple parallel reasoning paths and using an adaptive pruning mechanism to terminate less confident chains in real time. This intelligent process creates a new Pareto frontier for deployment, optimizing the trade-off between accuracy and computational cost. In benchmark tests, this method enabled the model to achieve 96.7% accuracy on key reasoning tasks while simultaneously reducing total token usage by an impressive 38%, demonstrating a powerful blueprint for efficient and effective AI.

The release and subsequent analysis of models like Falcon #R 7B marked a definitive turning point in the trajectory of artificial intelligence development. The industry had long operated under the assumption that greater computational power was the primary, if not sole, driver of progress. These new models, however, demonstrated that the path forward was not paved only with trillions of parameters but was instead defined by architectural ingenuity, data curation, and focused training methodologies. This shift signaled a maturation of the field, moving from an era of brute force to one of refined engineering.

This evolution opened up a host of new possibilities that were previously constrained by the high cost of elite AI. It made advanced reasoning accessible to a wider range of developers, researchers, and organizations that lacked the resources to operate massive-scale models. The focus on efficiency also pointed toward a more sustainable development path, one less reliant on ever-expanding data centers. Ultimately, the success of these compact, powerful models reshaped the competitive landscape, showing that innovation, not just resource accumulation, had become the true measure of advancement in artificial intelligence.