Welcome to an insightful conversation with Laurent Giraid, a renowned technologist and AI expert whose work has been pivotal in shaping the landscape of machine learning, natural language processing, and AI ethics. Today, we dive into his perspectives on the groundbreaking developments at Nous Research, particularly their latest release, Hermes 4—a family of AI models pushing the boundaries of open-source technology. We’ll explore the innovative features of Hermes 4, the philosophy driving user control over rigid safety guardrails, and the cutting-edge training systems behind its success. Join us as Laurent shares his vision for democratizing AI and challenging the dominance of big tech in this rapidly evolving field.

How did the vision for Nous Research come about, and what fuels your passion for advancing open-source AI?

I’ve always believed that AI shouldn’t be locked behind corporate walls. The idea for Nous Research stemmed from a desire to empower users and developers by giving them access to powerful tools without the heavy-handed restrictions often imposed by big tech. Our passion comes from a mix of technical curiosity and a sense of responsibility—AI has the potential to solve incredible problems, but only if it’s in the hands of many, not just a few. We’re driven by the mission to create models that prioritize user agency and creativity while still pushing the envelope on performance.

What sets Hermes 4 apart from other AI models in the market, especially when it comes to user experience?

Hermes 4 is designed with the user at the forefront. Unlike many proprietary systems that come with strict content filters, Hermes 4 offers a level of freedom that lets users explore its capabilities without constant refusals or disclaimers. We’ve also focused on making interactions engaging—whether it’s through creative outputs or robust reasoning skills. On benchmarks like math and coding, it holds its own against the best, but what really sets it apart is how it balances performance with accessibility, ensuring users aren’t boxed in by overly cautious design.

Can you walk us through the concept of ‘hybrid reasoning’ in Hermes 4 and why it’s a game-changer?

Absolutely. Hybrid reasoning in Hermes 4 allows users to switch between quick, straightforward responses and a more deliberate, step-by-step thought process. When the deeper mode is engaged, the model shows its internal reasoning within special tags, making the process transparent. This isn’t just about getting the right answer—it’s about helping users understand how the AI arrived there, which builds trust and aids learning. It’s particularly powerful in complex domains like mathematics, where seeing the logic unfold can be as valuable as the solution itself.

Hermes 4 has made waves with its high score on the RefusalBench test. Why do you think minimizing refusals is so important for AI systems?

RefusalBench measures how often an AI declines to answer user requests, and Hermes 4’s high score reflects our philosophy: AI should be a tool that serves the user, not a gatekeeper. Constant refusals can frustrate users and stifle creativity, especially for researchers or developers experimenting with novel ideas. By minimizing refusals, we’re ensuring the model is practical and versatile, even for edge-case queries. It’s about striking a balance—being helpful without overstepping into censorship.

Let’s dive into the training behind Hermes 4. How does a system like DataForge contribute to creating such a capable model?

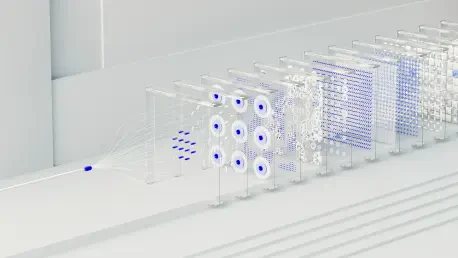

DataForge is one of our secret weapons. It uses a graph-based approach to generate synthetic training data, taking simple inputs and transforming them into complex, instruction-following examples. Think of it as turning a basic article into a creative format like a rap song, then building questions and answers around it. This method allows us to create diverse, high-quality datasets that teach the model to think flexibly and adapt to varied tasks. It’s a big reason Hermes 4 can handle everything from math to creative writing so effectively.

What makes Atropos unique as a reinforcement learning framework, and how does it enhance Hermes 4’s abilities?

Atropos is our custom-built, open-source reinforcement learning environment with hundreds of specialized ‘gyms’ for skills like coding, math, and tool use. What’s unique is its rejection sampling approach—models only get feedback when they produce correct, high-quality outputs. This ensures that Hermes 4 learns from the best responses, refining its precision and reliability. It’s like training an athlete with only the most effective drills, which translates into top-tier performance across diverse challenges.

Nous Research has been vocal about prioritizing user control over strict safety guardrails. What’s behind this philosophy?

We believe that AI should be a steerable tool, not a nanny. Strict guardrails often feel like they’re more about corporate protection than user benefit, and they can hinder innovation by shutting down legitimate exploration. Our stance is that users—especially developers and researchers—should have the freedom to fine-tune or prompt the model as they see fit. Transparency and control are far more valuable than arbitrary restrictions, even if it means navigating complex ethical debates. It’s about trusting the community to use these tools responsibly.

How do you see the role of smaller startups like Nous Research in competing with the massive budgets of tech giants in AI development?

Startups like ours prove that innovation doesn’t always need billion-dollar budgets. With a tight-knit team of talented folks and strategic use of resources—like our 192 GPUs for training—we can punch way above our weight. It’s about focusing on specialized techniques and community-driven goals rather than sheer scale. The open-source movement levels the playing field by sharing knowledge and tools, and I think we’re showing that creativity and agility can challenge even the biggest players in the AI space.

Looking ahead, what’s your forecast for the future of open-source AI and its impact on the broader industry?

I’m incredibly optimistic about open-source AI. It’s going to be the catalyst for democratizing access to cutting-edge technology, breaking down barriers that big tech has built. Over the next few years, I expect to see more powerful, user-focused models emerging from the community, driving innovation in ways that proprietary systems can’t. The impact will be profound—think more tailored solutions for education, healthcare, and beyond, all without the gatekeeping. The future of AI isn’t just in the hands of giants; it’s in the hands of everyone willing to contribute.