Large Language Models are becoming incredibly sophisticated thinkers, but they face a fundamental limitation when confronted with the vast digital libraries of modern enterprises: they simply cannot read a whole book at once. An LLM’s “context window”—the finite amount of information it can process at any given moment—remains a critical bottleneck, preventing these powerful tools from tackling complex, real-world tasks that involve analyzing massive documents, extensive codebases, or comprehensive legal files. Even when information technically fits, models often suffer from “context rot,” a phenomenon where they forget or misinterpret critical details from the beginning of a long prompt. Researchers from MIT, however, have developed a groundbreaking framework known as the Recursive Language Model (RLM), which sidesteps these issues not by building a bigger model, but by fundamentally re-envisioning how a model interacts with data.

The Recursive Language Model Framework

Shifting the Paradigm from Ingestion to Interaction

The central innovation of the RLM framework is a profound yet elegant shift in perspective. Rather than attempting the brute-force method of stuffing millions of tokens into a model’s inherently limited context, the RLM treats an entire text as an external data source, akin to how a classical computer utilizes a hard drive for datasets too large to fit into its main memory. In this new model, the LLM is transformed from a passive reader into an active agent, effectively acting as a “programmer.” It does not initially “see” the full text; instead, its primary function becomes writing and executing code to programmatically navigate, search, and inspect this vast, external information source. This approach elegantly sidesteps the architectural and computational challenges of ever-expanding context windows, which researchers argue is an inefficient path due to an “entropy argument” requiring exponentially more training data for diminishing returns.

This novel methodology empowers the model to intelligently query and dissect enormous volumes of text with surgical precision. For instance, instead of reading an entire legal document, the RLM can generate a small piece of code to find all mentions of a specific contract clause or to isolate a single chapter within a technical manual for focused analysis. Once the code identifies a relevant and manageable snippet, only that small, targeted chunk of text is pulled into the LLM’s active context window for high-level reasoning and synthesis. This core process—decomposing a monumental problem into a series of smaller, more tractable subtasks—is the key to its remarkable power. The framework can even apply this technique recursively, allowing the model to progressively dive deeper into the information, peeling back layers of complexity until it arrives at a comprehensive answer, all without ever being overwhelmed by the full dataset.

A Dual-Agent Architecture for Efficiency

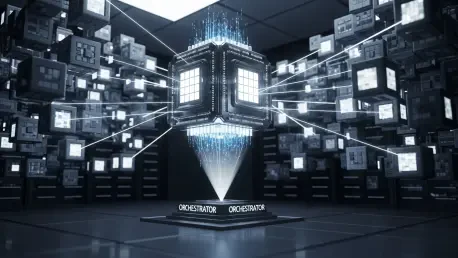

To orchestrate this intricate workflow with maximum efficiency, the RLM framework typically employs a sophisticated two-agent system that separates high-level strategy from granular execution. A highly capable “Orchestrator” model, often a state-of-the-art LLM like GPT-5, serves as the operation’s strategic brain. It is responsible for analyzing the overarching task, devising a comprehensive plan of attack, and writing the necessary code to dissect the problem and manage the flow of data between different stages of the process. This division of labor ensures that the most powerful reasoning capabilities are reserved for the most complex parts of the task, such as planning and synthesis, preventing the waste of valuable computational resources on more routine functions. The Orchestrator acts as a project manager, breaking down the monolithic challenge into a sequence of logical steps.

Once the high-level plan is established, the Orchestrator delegates the more granular and repetitive work to a “Worker” model, which is frequently a faster and more cost-effective LLM designed for executing specific instructions. For example, the Orchestrator might identify ten relevant paragraphs scattered throughout a million-token document; the Worker is then called ten separate times to analyze each one individually. This architecture brilliantly optimizes for both cost and capability, leveraging the strengths of different models for different tasks. Crucially, from the end-user’s perspective, this complex internal ballet of code generation and delegated analysis is entirely invisible. The system functions just like a standard API call—it accepts a prompt and returns a final answer—making it a seamless “drop-in replacement” for existing applications looking to overcome their context limitations.

Putting the Framework to the Test

Dominating Long-Context Benchmarks

The empirical performance of the RLM framework on challenging long-context benchmarks has been nothing short of revolutionary, demonstrating a leap in capability that redefines what is possible for AI. When subjected to the immense scale of the BrowseComp-Plus dataset, a benchmark that involves reasoning over a staggering 6 to 11 million tokens, standard base models failed completely, scoring a definitive 0%. In stark contrast, the RLM, powered by GPT-5 as its orchestrator, achieved an impressive 91.33% accuracy. This result not only showcases the framework’s ability to navigate and comprehend documents at a previously impossible scale but also vastly outperformed other advanced agentic systems, cementing its status as a leading solution for massive-context reasoning.

This success was not an isolated event. The framework’s prowess was further validated on the OOLONG-Pairs benchmark, a test specifically designed with a computational complexity that scales quadratically with the length of the input. On this task, base models were effectively paralyzed, scoring near zero, while the RLM demonstrated an emergent ability to manage this escalating complexity, achieving a balanced F1 score of 58%. Its versatility was also highlighted on the CodeQA benchmark, a test focused on code understanding within large repositories. Here, the RLM more than doubled the performance of the base GPT-5 model, jumping from 24% to 62% accuracy. Performance data consistently showed that while a base model’s accuracy rapidly degrades on contexts longer than 16,000 tokens, the RLM’s performance held steady, proving its resilience against context rot.

Practical Realities of Cost and Control

Remarkably, despite its sophisticated, multi-step workflow involving code generation and multiple model calls, the RLM framework can be surprisingly cost-effective. On certain benchmarks, it proved to be up to three times cheaper than alternative long-context methods like summarization, which often incur high costs by processing large amounts of text repeatedly. This efficiency stems from its surgical approach, which only loads small, relevant text snippets into the context of powerful models, thereby minimizing token usage. This makes the RLM an economically viable solution for enterprises looking to deploy large-scale document analysis without incurring prohibitive operational expenses, democratizing access to capabilities that were once the exclusive domain of organizations with massive compute budgets.

However, this economic advantage comes with a significant trade-off: cost variability and the need for careful management. The performance of the RLM is described as “long-tailed,” meaning that while the median cost of a task is low, an occasional run can become unpredictably and extremely expensive. This can occur if the model gets trapped in an inefficient reasoning loop, performs thousands of redundant checks, or writes overly complex code for a simple task. This behavior varies by model; while GPT-5 was found to be more conservative, some open-source models were observed attempting an excessive number of sub-calls. At present, this requires developers to implement their own custom guardrails, budget controls, and logical checks to mitigate the risk of these costly outlier events, adding a layer of engineering complexity to its deployment.

A New Era for Enterprise AI

The development of the Recursive Language Model framework marks a significant milestone in addressing the persistent long-context limitations of LLMs. By treating prompts not as data to be ingested but as an external, inspectable environment, it allows models to effectively reason over millions of tokens without requiring costly and time-consuming retraining. This provides a practical and powerful path for enterprises to finally tackle information-dense tasks that had previously been out of reach. For industries like law, finance, and software engineering, where professionals must meticulously analyze enormous legal files, dense financial reports, or sprawling codebases, the RLM offers a tangible solution. It unlocks the potential to harness the full reasoning power of advanced AI on problems of an unprecedented scale, moving beyond simple information retrieval to enable genuine, deep comprehension of vast digital archives. This innovation is not a replacement for existing techniques like Retrieval-Augmented Generation (RAG) but is positioned as a vital, complementary tool in the AI developer’s arsenal. For enterprise architects and developers, the RLM framework furnishes a powerful new instrument for building a new class of applications, transforming intractable data challenges into solvable problems and heralding a new era of enterprise intelligence.