The software development industry is grappling with a significant disconnect between the promise of artificial intelligence and its practical application, as the widely anticipated tenfold productivity revolution has given way to a more sobering reality of modest, incremental gains. This has triggered a crucial reevaluation, shifting the industry’s focus from the raw computational power of large language models to the methodologies and tools developers use to harness them. A compelling new philosophy is gaining traction, one that advocates for moving beyond the chaotic, unstructured nature of chat-based interactions toward a disciplined, systematic framework known as orchestration. This emerging paradigm, championed by platforms like Zencoder’s Zenflow, proposes that the key to unlocking AI’s full potential lies not in better models, but in a fundamentally more structured and verifiable engineering process.

The Problem with “Vibe Coding”

From Unstructured Prompts to Engineering Discipline

The central conflict in the current AI coding landscape stems from the failure of the advertised “10x productivity” boom to materialize, with comprehensive research pointing to more realistic gains hovering around 20%. This discrepancy is increasingly attributed not to the limitations of AI models themselves, but to the flawed interaction paradigm that has become the industry standard. The common practice of developers typing natural language prompts into a chat interface, a method often dismissed as “vibe coding” or “Prompt Roulette,” has proven inadequate for the intricate demands of large-scale enterprise software. While this approach can be effective for simple, isolated tasks, it consistently breaks down when applied to complex projects, introducing significant technical debt and failing to scale effectively across engineering teams. The core issue is a lack of engineering discipline governing the application of AI, turning a powerful tool into an unpredictable variable.

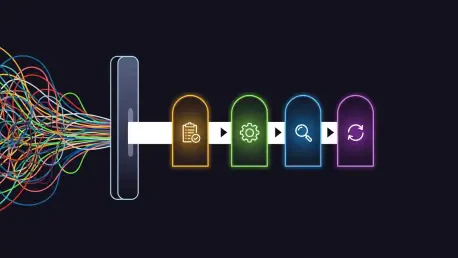

To address this fundamental flaw, orchestration platforms are introducing structured workflows as a foundational pillar of AI-assisted development. This approach systematically replaces the ad-hoc, unpredictable nature of free-form prompting with a repeatable and predictable sequence for every coding task. A standard workflow under this model involves distinct, mandatory stages: planning, implementation, testing, and review. By enforcing this rigorous structure, these systems aim to produce consistent, reliable, and high-quality outcomes, transforming AI from a creative but erratic partner into a dependable component of the engineering process. This shift mirrors the evolution of project management, where unstructured to-do lists were superseded by sophisticated platforms to enable collaboration and predictability at scale. In the same vein, structured AI workflows are designed to ensure that the benefits of AI can be reliably scaled across entire organizations.

Anchoring AI to Intent

A pervasive challenge that undermines the efficiency of AI coding assistants is a phenomenon known as “iteration drift,” where the code generated by an AI model gradually diverges from the original requirements over a series of conversational prompts. Each subsequent instruction, clarification, or correction can introduce subtle deviations, leading to a final product that may be functional but fails to meet the precise specifications of the initial task. This slow, almost imperceptible deviation creates a frustrating and time-consuming cycle of refinement, often negating the initial productivity gains. Developers find themselves constantly course-correcting the AI, a process that feels less like collaboration and more like a struggle to keep the model on track. This problem highlights the inherent weakness of a purely conversational interface for complex technical work, where precision and adherence to a plan are paramount.

Orchestration platforms combat this issue by mandating a specification-first approach, a discipline known as spec-driven development. Before any code is generated, the AI agents are required to first produce a detailed technical specification outlining the architecture, components, and logic of the proposed solution. Following this, they must formulate a comprehensive, step-by-step implementation plan based on that approved specification. This critical preliminary process serves as an anchor, grounding all subsequent work in a clear, unambiguous, and agreed-upon set of requirements. It ensures that the AI’s output remains aligned with the developer’s intent throughout the entire development lifecycle, effectively eliminating iteration drift. The validity of this methodology has been so clearly demonstrated that major AI research labs have begun training their own flagship models to follow similar specification-first procedures, signaling a broader industry consensus on its importance.

A New Paradigm: Verification and Fleet Management

Building Trust in AI-Generated Code

Arguably the most transformative feature introduced by the orchestration paradigm is the concept of multi-agent verification, a sophisticated system designed to build trust and reliability into AI-generated code. This approach is built on the recognition that AI models, particularly those from the same provider or “family,” often share inherent biases, blind spots, and stylistic patterns that can lead to recurring types of errors. To counteract this, orchestration platforms deploy a cross-verification system that pits models from different providers against each other in an adversarial review process. For instance, the system might task an Anthropic Claude model with meticulously reviewing and critiquing code generated by an OpenAI GPT model, and vice versa. This process, analogous to seeking a second opinion from a specialist doctor, leverages the distinct training data and architectural differences between models to catch subtle flaws that a single model, or even a human developer, might miss. This method is claimed to produce results on par with next-generation models, significantly elevating the quality and robustness of the final code.

This rigorous emphasis on verification directly targets one of the most significant criticisms of AI coding tools: their tendency to produce “slop”—code that appears syntactically correct and may even pass superficial checks but contains subtle logical flaws that cause failures in production. This leads to a common pitfall described as the “death loop.” In this scenario, a developer, reluctant to spend time manually reviewing unfamiliar AI-generated logic, accepts the code without thorough validation. When a subsequent, related task fails, the developer lacks the foundational understanding of the underlying code to debug the problem effectively. Their only recourse is to return to the AI, prompting it repeatedly for a fix in a frustrating cycle that can waste an entire day or more. By institutionalizing a multi-agent review process before any code is committed, orchestration platforms are designed to break this destructive loop. This not only improves the correctness of the code but also enhances the developer’s comprehension, ensuring they understand the logic before it becomes part of the codebase.

Streamlining the Developer Workflow

Beyond ensuring code quality, modern orchestration tools are designed to fundamentally streamline the developer’s workflow by introducing sophisticated interfaces for parallel execution. This capability directly addresses the current cumbersome reality for many developers who use AI, which often involves juggling multiple terminal windows and browser tabs to manage different AI-driven processes simultaneously. A developer might have one window running a code generation task, another handling a debugging session, and a third performing a code review. This fragmented and manually intensive process is inefficient and prone to error, detracting from the deep focus required for complex software engineering. Orchestration platforms solve this by providing a unified command center that allows developers to manage a “fleet” of multiple AI agents at once, all from a single, intuitive interface.

This centralized management system represents a significant improvement in user experience and operational efficiency. Each AI agent operates within its own isolated sandbox environment, which is crucial for preventing them from interfering with one another’s tasks or accessing unintended parts of the codebase. This “fleet management” capability empowers developers to execute complex, multi-part tasks in parallel with confidence. For example, one agent could be tasked with writing unit tests for a new feature while another simultaneously refactors a related module, and a third analyzes the performance implications of the changes. By providing a structured and controlled environment for parallel AI operations, these platforms transform what was once a chaotic and disjointed process into a streamlined and highly productive workflow, allowing developers to leverage the full power of multiple AI agents without being overwhelmed by the complexity of managing them.

The Strategic Bet on the Application Layer

Carving a Niche in a Crowded Market

In an increasingly competitive landscape populated by dedicated AI-first code editors, established giants like Microsoft-backed GitHub Copilot, and the frontier AI labs themselves, orchestration platforms are carving out a distinct and strategic niche. Their core strategy is not to compete on the development of foundational models but to establish themselves as the indispensable, model-agnostic layer that sits on top of them. Reflecting the reality that developers and enterprises increasingly use a diverse mix of AI providers to suit different tasks, platforms like Zenflow offer support for models from major players like Anthropic, OpenAI, and Google. This flexibility allows teams to leverage the best model for any given job without being locked into a single ecosystem, a critical advantage in a rapidly evolving market.

The unique competitive edge for these platforms lies in their cross-provider verification capability. While a lab like OpenAI will naturally optimize its tools for its own models, it is highly unlikely to build a system that uses a direct competitor’s model, such as Claude, as a primary verifier. This creates a rare opening in the market for a neutral third party to provide this essential quality assurance function. Furthermore, these companies are heavily emphasizing their enterprise-readiness, pursuing and obtaining critical certifications such as SOC 2 Type II, ISO 27001, and ISO 42001, while also ensuring compliance with data privacy regulations like GDPR. This dedicated focus on security and compliance is crucial for attracting and retaining clients in highly regulated sectors such as finance and healthcare, differentiating them from more consumer-focused tools.

The Future is Orchestration

The emergence of sophisticated orchestration tools signaled a pivotal shift in the AI coding industry. It became clear that engineering leaders, under immense pressure to deliver tangible productivity gains, could no longer afford a “wait-and-see” approach while tools that could potentially double their teams’ velocity were becoming available. A natural and efficient division of labor began to crystallize within the ecosystem: the frontier labs focused their immense resources on building the most powerful and capable foundational models, while a new class of companies, like Zencoder and Cursor, concentrated on creating the best application and user experience layers to make those models truly effective for professional developers.

This movement marked the end of the initial era dominated by simplistic, chat-based interfaces. Orchestration became the prevailing theme as the industry collectively acknowledged the inherent limitations of unstructured interaction for complex engineering work. The trajectory of this evolution drew a powerful parallel to the early days of digital work, when primitive tools like email and spreadsheets were used for every conceivable task, from communication to project planning. Just as specialized project management platforms emerged to bring structure and scalability to that chaos, orchestration platforms were positioned to bring discipline to the chaos of “vibe coding.” The ultimate bet was placed not just on the raw power of AI models, but on the belief that the software enabling developers to use those models effectively would be just as critical—if not more so—to the future of software development.