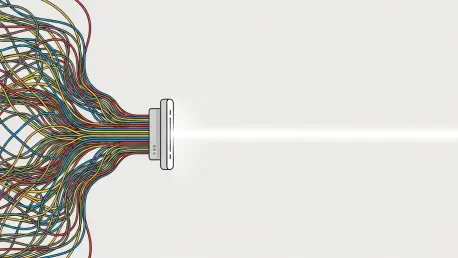

An artificial intelligence system capable of flawlessly summarizing a year’s worth of complex legal precedents or a corporation’s entire financial history represents a monumental leap in analytical power, yet this capability has remained tethered to an equally monumental and often prohibitive computational cost. As organizations seek to deploy AI for increasingly sophisticated tasks, they confront a billion-dollar question: how can models digest and reason over vast oceans of information without drowning in the expense of processing it all? A groundbreaking research initiative from Stanford University and Nvidia, centered on a method called “End-to-End Test-Time Training” (TTT-E2E), proposes a novel and elegant solution that could redefine the economics of long-term AI memory. This approach suggests a future where AI does not just recall information but continuously learns from it, achieving long-context mastery at a sustainable price.

When an AI’s Perfect Memory Comes With an Impossible Price Tag

The fundamental challenge in processing extensive documents, from lengthy technical manuals to sprawling codebases, stems from a deeply ingrained trade-off between an AI’s memory and its efficiency. For any enterprise application that relies on understanding context that stretches across thousands or even millions of words, this dilemma dictates the boundary between what is possible and what is practical. The need for an AI to connect a detail on page one with a concept on page five hundred is critical for tasks like legal discovery, compliance auditing, and advanced customer support, yet the technology to do so perfectly has been notoriously resource-intensive.

This high cost of memory has created a significant bottleneck for innovation, forcing developers into a difficult compromise. Deploying a model that can handle long-form content with perfect fidelity often means accepting slower response times and incurring substantial operational expenses, limiting its use to only the most high-value applications. The research into TTT-E2E was born from this exact pain point, aiming to dismantle the financial barrier that has long prevented AI from achieving true long-term contextual understanding. By rethinking how a model stores and accesses information, the work offers a pathway to democratize long-context capabilities for a wider range of enterprise uses.

The Unwinnable War a Flawless Memory vs Sustainable Speed

At the heart of the long-context dilemma lies a technical conflict between two dominant classes of AI architecture. On one side are Full Self-Attention Transformers, the undisputed champions of accuracy. These models operate by having every new piece of information meticulously cross-reference every piece of information that came before it. This exhaustive review grants them a form of lossless recall, enabling them to pinpoint and utilize details from anywhere in the input. However, this perfection comes at a steep, quadratic computational cost; as the document length doubles, the processing power required can quadruple, making this approach impractical and financially unviable for truly massive texts.

On the other side of this conflict are linear-time models, such as Recurrent Neural Networks (RNNs). These architectures are lauded for their efficiency, maintaining a constant and predictable inference cost no matter how much information is fed into them. Their drawback is a tendency to “forget” crucial details over long sequences, as older information gets diluted or overwritten in their memory state. For enterprises analyzing streams of system logs, complex patient histories, or multi-year support ticket threads, this propensity for forgetting is an unacceptable risk. The industry has long sought a model that breaks this zero-sum game, delivering the sustained accuracy of a Transformer with the sustainable speed of an RNN.

The Breakthrough Teaching AI How to Learn on the Job

The innovation proposed by the TTT-E2E method represents a paradigm shift away from deploying static, pre-trained models toward using dynamic systems that learn continuously. Traditionally, an AI model is trained exhaustively and then “frozen,” with its internal weights fixed before deployment. The TTT-E2E approach discards this rigidity, reimagining the model as a perpetual student that adapts and updates its understanding in real time as it processes new information during inference. This is not about simply adding new facts to a database but fundamentally altering the model’s internal structure to reflect new knowledge.

This dynamic capability is achieved by changing the training objective itself. Instead of teaching the model a set of facts, the process focuses on meta-learning, or teaching the model how to learn effectively on its own. This is accomplished through a sophisticated two-loop optimization that simulates on-the-job training. In an “inner loop,” the model practices updating its weights as it reads a stream of text. In an “outer loop,” the system evaluates how well the model learned and adjusts its base parameters to make future learning cycles faster and more stable. This novel method produces a model that arrives not as a finished product but as an optimized learner, ready to absorb the specifics of any document it encounters.

The TTT-E2E architecture facilitates this on-the-fly learning through an ingenious dual-memory system. For its short-term “working memory,” it uses Sliding Window Attention (SWA), which efficiently processes a fixed-size block of the most recent text, keeping inference costs low and constant. The true breakthrough is its long-term “compressed memory.” As information leaves the SWA window, it is not forgotten. Instead, its essential meaning is compressed and integrated directly into a set of dedicated dynamic layers within the model’s weights. This mechanism allows the model to build a rich, evolving summary of the entire document’s history without having to store every word, preventing the catastrophic forgetting that plagues other efficient models.

Putting the New Memory to the Test From Theory to Performance

To validate this new approach, researchers conducted extensive experiments, pitting TTT-E2E models against powerful baselines across contexts scaling up to 128,000 tokens—the equivalent of a lengthy novel. The results were compelling. While established efficient models like Mamba 2 saw their performance plateau or even degrade with more context, TTT-E2E demonstrated the same desirable scaling behavior as a Full Attention Transformer. Its understanding, measured by perplexity, continued to improve as it was fed more information, proving its ability to effectively use a long-term memory.

The performance metrics revealed a model that achieved the best of both worlds. In terms of accuracy, the 3-billion-parameter TTT-E2E model successfully outperformed its Full Attention counterpart on the long-context benchmarks. Simultaneously, its efficiency was vastly superior. When processing a 128,000-token document, the TTT-E2E model was 2.7 times faster than a Full Attention Transformer running on cutting-edge Nvidia #00 hardware. Addressing potential enterprise concerns about a model that modifies itself during operation, co-author Yu Sun noted that its stability is mathematically sound and comparable to standard RNNs, which are already trusted in production environments.

Redefining the Enterprise Playbook a New Role for RAG

Despite its remarkable capabilities, the compression-based memory of TTT-E2E is not without its limitations. This became evident during the “Needle in a Haystack” test, an evaluation designed to see if a model can retrieve a single, random fact hidden within a massive volume of text. In this scenario, the lossless cache of a Full Attention Transformer allowed it to excel, while TTT-E2E, which prioritizes semantic meaning over verbatim recall, struggled to find the specific detail. This highlights a key distinction: TTT-E2E is designed to understand the gist, not to memorize every word.

This limitation positions TTT-E2E not as a replacement for technologies like Retrieval-Augmented Generation (RAG) but as a powerful collaborator. RAG systems, which pull exact information from an external database, remain essential for tasks requiring perfect factual recall. The relationship can be understood through an analogy: TTT-E2E functions like the human brain, absorbing the general knowledge, themes, and connections within a document. RAG, in contrast, acts as the “notepad” one uses to jot down a specific password or address that must be remembered perfectly.

This symbiotic relationship points toward a new strategic playbook for enterprise AI. Future systems may leverage a dual-memory approach on a larger scale, using a moderately sized perfect-recall window for immediate tasks while relying on a vast, compressed memory built with TTT-E2E to maintain long-term context over billions of tokens. This hybrid model could handle everything from real-time customer conversations to in-depth analysis of an organization’s entire documented history, finally breaking the long-standing compromise between memory, speed, and cost.

This research signaled a crucial evolution in the pursuit of artificial intelligence with human-like memory. The focus had shifted from building ever-larger, static archives of information toward creating dynamic systems capable of intelligent and efficient compression. By teaching models how to learn on the job, the work done by the Stanford and Nvidia teams provided a tangible blueprint for AI that could not only read a book but also remember its story. This development represented a significant step toward a future where AI’s long-term memory was no longer a costly luxury but a practical and accessible reality for a new generation of enterprise applications.