The Seismic Shift That Redefined Voice Interaction Overnight

Just a short time ago, the hallmark of interacting with a voice AI was the awkward, multi-second pause—a digital breath that shattered any illusion of a natural conversation. Businesses built their strategies around these limitations, accepting stilted, “request-response” interactions as the cost of innovation. That era is definitively over. In a rapid succession of breakthroughs from a handful of leading AI labs, the foundational pillars of voice technology have been completely rebuilt. The once “impossible” challenges of latency, conversational fluidity, data efficiency, and emotional intelligence have been solved not in theory, but with commercially available, enterprise-ready models. This article explores this paradigm shift, dissecting how the technical landscape has been irrevocably altered and providing a new strategic playbook for enterprises looking to stay relevant in an industry where yesterday’s cutting-edge technology is today’s relic.

From Clunky Chatbots to Conversational Partners: A Brief History of Voice AI’s Limitations

To understand the magnitude of the current revolution, one must first appreciate the constraints that defined the previous generation of voice AI. For years, the user experience was dictated by a slow, linear process: a user would speak, the audio was sent to a server, transcribed into text, processed by a language model, and then synthesized back into audio. This multi-step journey was the source of the infamous 2-5 second delay that made conversations feel frustratingly robotic. This “half-duplex” model, functioning like a walkie-talkie where only one party can speak at a time, prevented users from interrupting or correcting the AI, forcing them into a rigid, unnatural conversational pattern. These fundamental technical hurdles confined voice AI to simple command-and-control functions, making it a functional tool but a poor conversationalist, incapable of grasping the nuance, speed, and emotional texture of human interaction.

Deconstructing the Revolution: How Four Impossible Problems Were Solved

From Awkward Pauses to Instantaneous Dialogue: The End of Latency

The most immediate and noticeable failure of past voice AI was its inability to respond in real time. The natural rhythm of human conversation involves response gaps of around 200 milliseconds; anything longer feels unnatural. The new generation of models has effectively erased this problem. Inworld AI’s TTS 1.5, for example, achieves a latency of under 120 milliseconds—a speed that is literally faster than human perception. Similarly, FlashLabs’ Chroma 1.0 employs a novel “streaming architecture” that begins generating an audio response while still processing the user’s input, bypassing the traditional, time-consuming workflow. The combined impact of these innovations is profound: the “thinking pause” is gone. For customer service bots, interactive training avatars, and in-car assistants, this leap transforms the interaction from a clunky transaction into a believable dialogue, rendering any system with a perceptible delay obsolete.

Beyond Your Turn: Achieving True Conversational Fluidity

Solving for speed was only the first step. True human conversation is a dynamic, overlapping exchange, not a turn-based game. This is where Nvidia’s PersonaPlex model introduces a crucial breakthrough with its “full-duplex” capability. Its dual-stream architecture allows it to listen and speak simultaneously, enabling it to process user interruptions in real time. If a user starts speaking while the AI is in the middle of a sentence, the model can stop, listen, and pivot its response instantly. Critically, it also understands “backchanneling”—the subtle verbal cues like “uh-huh” and “got it” that humans use to signal engagement. This allows for more efficient and natural conversations; a user can now cut short a lengthy legal disclaimer with a simple “Okay, move on,” and the AI will understand and comply. This move from a rigid monologue to a fluid dialogue is a monumental step toward creating truly helpful and less frustrating automated assistants.

Democratizing Voice AI: Efficiency, Accessibility, and Emotional Intelligence

Beyond speed and fluidity, two other barriers have fallen: data inefficiency and a lack of emotional awareness. Alibaba’s Qwen3-TTS model addresses the first with a groundbreaking tokenizer that can represent high-fidelity speech using just 12 tokens per second. This radical compression makes high-quality voice AI lightweight and affordable, enabling deployment on edge devices or in low-bandwidth environments. The second and perhaps most significant breakthrough is the integration of emotional intelligence. Previous AI models were “sociopaths by design,” capable of processing words but blind to the user’s emotional state. Hume AI, now strategically licensed and integrated by Google DeepMind, is changing this by creating an “emotional layer” for AI. It moves beyond mere text analysis to understand tone, dialect, and emotional modulation, allowing an AI to “read the room” and respond with appropriate empathy—a critical function for applications in healthcare, finance, and customer relations.

The New Competitive Frontier: Building the Modern Voice Stack

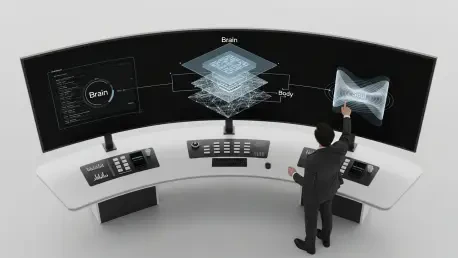

With the core technical challenges of voice AI effectively solved and commoditized, the competitive landscape has shifted. Speed, responsiveness, and interruptibility are no longer differentiators; they are the new baseline. The future of voice AI will be defined not by technical performance, but by the quality of the user experience, with emotional resonance as the ultimate goal. To achieve this, enterprise builders must adopt a new, three-part “Voice Stack.” The first layer is the “Brain,” a powerful Large Language Model like GPT-4o or Gemini that provides core reasoning. The second is the “Body,” a combination of the new, efficient, and responsive open-weight models like PersonaPlex and Chroma that handle the mechanics of conversation. The final and most crucial layer is the “Soul,” a specialized emotional intelligence engine like Hume AI that ensures the AI can understand and respond to human feeling, creating interactions that are not just functional but empathetic.

Actionable Strategies for the New Era of Voice AI

The rapid obsolescence of old voice technology demands immediate action. The first step for any organization is to conduct a critical audit of its existing voice AI strategy. Does your system respond in under a second? Can users interrupt it naturally? Does it operate with an awareness of the user’s emotional context? If the answer to any of these questions is no, your strategy is already behind the curve. The path forward involves a strategic upgrade to the modern voice stack. For many, this will mean leveraging the new, commercially permissive open-weight models to rebuild the “Body” of their systems for superior performance and efficiency. The most forward-thinking organizations will then integrate an emotional intelligence layer—the “Soul”—to create truly next-generation, empathetic experiences that build trust and drive engagement.

Conclusion: Adapt or Be Left Behind in the Empathetic AI Revolution

The world of voice AI has undergone a fundamental transformation. The clumsy, frustrating bots of the past have been superseded by a new class of technology capable of interacting with human-level speed, fluidity, and emotional awareness. This is not a distant future; it is the new standard for the current market and beyond. The shift from a purely functional to an emotionally intelligent interface marks the removal of the final barrier between humans and machines. For enterprises, the message is clear: the strategies and technologies that were sufficient yesterday are now a liability. The time has come to move beyond chatbots that can speak and begin building empathetic partners that can listen, understand, and connect. Those who fail to adapt will find their voice echoing in an empty room.