An artificial intelligence model achieved in four days what took a highly skilled human expert two years of dedicated practice to learn, a staggering display of accelerated progress that simultaneously reveals a looming crisis at the heart of the AI revolution. This paradox defines the current state of artificial intelligence, where headline-grabbing advancements mask a hidden and unsustainable cost: an insatiable appetite for data. As AI models grow in power and complexity, they are rapidly consuming the world’s finite supply of high-quality training data, creating a critical bottleneck that threatens the very pace of future innovation. This emerging trend is not a distant theoretical problem but a present-day reality, exemplified by the development of cutting-edge systems. This analysis will dissect this trend through a detailed examination of a state-of-the-art AI coding model, exploring the concrete evidence, the profound implications for the industry, and the innovative solutions that researchers are now scrambling to develop.

Evidence of the Trend: The Voracious Appetite of Modern AI

The rapid acceleration of AI capabilities is no longer a matter of speculation; it is a measurable phenomenon with clear benchmarks. Yet, this incredible speed comes at a cost that is often overlooked in the race for performance. A closer look at the training processes behind these advanced models reveals a dependency on data that is orders of magnitude greater than human learning requirements. This disparity highlights a fundamental inefficiency in current AI methodologies, an inefficiency that is pushing the industry toward a critical resource limit. The trend is clear: progress is becoming synonymous with unprecedented data consumption, a path that is proving to be unsustainable.

Benchmarking a New Era of AI Performance

Recent breakthroughs serve as a powerful testament to the speed of AI development. Nous Research’s NousCoder-14B, a specialized model for competitive programming, recently set a new high-water mark by achieving a 67.87 percent accuracy on the rigorous LiveCodeBench v6 benchmark. This benchmark evaluates models on complex coding challenges published between August 2024 and May 2025, ensuring a test against novel and difficult problems. This level of performance places the model in the upper echelons of AI coding assistants, demonstrating its capacity for sophisticated reasoning and code generation.

What makes this achievement particularly remarkable is the timeline. The model was trained in just four days, a feat made possible by a powerful cluster of 48 Nvidia B200 GPUs. This rapid development cycle showcases the incredible efficiency of modern hardware and training frameworks, allowing for iterative improvements at a pace previously unimaginable. The ability to go from a base model to a top-tier specialist in under a week represents a paradigm shift in AI research and development.

This accelerated learning stands in stark contrast to the human equivalent. Joe Li, a researcher on the project and a former competitive programmer, noted that achieving a similar jump in skill took him nearly two years of consistent practice during his teenage years. While the model mastered a domain in 96 hours, a dedicated human required thousands of hours of study and problem-solving. This dramatic compression of the learning timeline underscores the raw power of contemporary AI but also sets the stage for a critical examination of the resources required to fuel such rapid advancement.

A Case Study in Sample Inefficiency

The true nature of AI’s data dependency becomes clear when examining the training process of NousCoder-14B more closely. To reach its benchmark-setting performance, the model was trained on a massive dataset comprising 24,000 distinct competitive programming problems. Each problem required the model to understand complex constraints, devise a logical algorithm, and generate functional, efficient code, representing a significant unit of learning. This vast repository of examples formed the bedrock of its accelerated skill acquisition.

This data consumption starkly contrasts with the human learning process. The human expert, Joe Li, estimated that he solved approximately 1,000 problems to achieve a comparable level of proficiency. While the human brain can generalize abstract principles and apply them to new scenarios from a relatively small set of examples, the AI model required a much larger volume of data to build its understanding. This reveals a fundamental difference in learning efficiency between biological and artificial intelligence.

The resulting 24-to-1 ratio of problems required by the AI compared to the human expert serves as a clear and quantifiable demonstration of modern AI’s sample inefficiency. While AI learns faster in terms of absolute time, it is vastly less efficient in terms of the data it needs to consume. This dependence on massive datasets is not merely a technical detail; it is the central pillar of the current development paradigm. It is also the trend’s most significant vulnerability, as the finite nature of high-quality data begins to cast a shadow over future progress.

Expert Insight: Reaching the Limits of Available Data

The challenge of data inefficiency is now colliding with the physical limits of data availability. Researchers at the forefront of AI development are no longer just theorizing about a future data shortage; they are actively encountering it. The insights gleaned from projects like NousCoder-14B provide a sobering look at a resource that was once considered nearly infinite. This realization marks a critical inflection point, forcing the industry to confront a reality where the next leap in performance cannot be achieved simply by ingesting more information. The paradigm of scaling by brute force is reaching its inevitable conclusion.

Within the technical report for NousCoder-14B lies a startling discovery with profound implications for the entire field. The Nous Research team concluded that their carefully curated dataset of 24,000 problems may represent the near-total corpus of high-quality, verifiable competitive programming problems publicly available on the internet. This wasn’t just a large dataset; it was nearly a complete one. For this specialized, high-value domain, researchers are no longer just scraping the surface of available data—they are hitting the bottom of the barrel.

This finding serves as an urgent warning from experts on the front lines: the industry is rapidly approaching the physical limits of training data in many specialized fields. What is happening in competitive programming today is a preview of what will likely occur in other domains, from legal analysis to medical diagnostics, where high-quality, labeled data is scarce and difficult to produce. The era of assuming an endless ocean of data is over. Instead, developers are now navigating a world of finite, and in some cases, fully exhausted, data reservoirs.

The significance of this “data finitude” cannot be overstated. Unlike compute power, which continues to scale predictably according to Moore’s Law and innovations in hardware manufacturing, high-quality data is not infinitely scalable. It is generated by human activity, curated by experts, and often bound by real-world constraints. This fundamental difference presents a direct challenge to the prevailing AI development paradigm, which has largely relied on the premise that larger models fed with larger datasets will inevitably yield better results. With one of those key variables hitting a hard ceiling, the entire equation for AI progress must be reconsidered.

Future Outlook: Pioneering Solutions to Data Scarcity

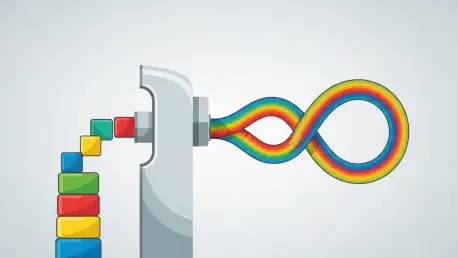

The recognition of a data bottleneck is catalyzing a fundamental shift in AI research. As the strategy of simply scaling up datasets becomes untenable, the focus is pivoting toward ingenuity and efficiency. The new frontier of AI is not about finding more data but about doing more with the data that already exists. This imperative is driving the development of sophisticated new techniques designed to make models learn more like humans—iteratively, intelligently, and efficiently. Beyond efficiency, researchers are pursuing a more radical solution: teaching AI to become a creator of its own knowledge, effectively breaking free from the constraints of human-generated data altogether.

The Next Evolution: From Data Scaling to Data Efficiency

The future of AI progress will likely be defined by a transition away from brute-force data consumption and toward smarter, more efficient learning methodologies. The core objective is to maximize the knowledge extracted from every single data point, a stark departure from the current model of relying on massive statistical correlations across vast datasets. This evolution requires building systems that can reason, experiment, and learn from their mistakes in a more nuanced and interactive manner.

One of the most promising techniques on the horizon is multi-turn reinforcement learning. Current training pipelines often rely on a simple, binary reward signal at the end of a task—the code either passes all test cases or it fails. In contrast, multi-turn reinforcement learning would enable a model to learn from intermediate feedback. For example, the AI could learn to interpret a compilation error, a “time limit exceeded” notification, or a failure on a specific public test case as valuable information to guide its next attempt. This iterative process of refinement more closely mimics how a human programmer debugs code, allowing the model to learn complex problem-solving strategies from a single problem rather than simply succeeding or failing.

This shift toward data efficiency has profound implications for every industry reliant on AI. In fields like autonomous driving, medical imaging, and financial modeling, where high-quality data is either inherently limited, expensive to acquire, or bound by privacy regulations, data efficiency will become a decisive competitive advantage. Companies that master these new, efficient learning techniques will be able to build more capable and robust AI systems with fewer resources, outpacing competitors who remain dependent on the increasingly scarce resource of massive, curated datasets.

The Infinite Frontier: Synthetic Data and Self Play

While data efficiency can extend the utility of existing datasets, the most ambitious and promising long-term solution to the data bottleneck is to remove the dependency on human-generated data entirely. The ultimate goal is to train AI models to generate novel, high-quality problems for themselves, creating a virtuous cycle of continuous learning and improvement. This approach would transform data from a finite resource to be consumed into an infinite resource to be created.

This concept is often referred to as a “self-play” loop, a strategy famously employed by DeepMind’s AlphaGo to achieve superhuman performance in the game of Go. In the context of coding, one AI model could act as a “problem generator,” creating new and challenging programming tasks, while another model would act as a “problem solver.” The solver’s attempts would provide feedback to refine both its own abilities and the generator’s capacity to create meaningful and diverse challenges. This dynamic could, in theory, create a perpetually expanding curriculum of training data, pushing the models’ capabilities far beyond what is possible with any static, human-curated dataset.

However, this forward-looking approach is not without its significant challenges. The primary hurdle is ensuring the quality and diversity of the synthetically generated data. A poorly designed self-play system could lead to a feedback loop where the AI generates trivial or repetitive problems, causing its capabilities to stagnate or even degrade in a process known as model collapse. Pioneering robust methods to guide the generation process, ensuring that the synthetic data remains novel, challenging, and grounded in reality, represents one of the most critical open research questions in the field today.

A Paradigm Shift from Consumption to Creation

The journey of models like NousCoder-14B offered a powerful demonstration of modern AI’s unprecedented speed, but their immense data requirements simultaneously unveiled a critical bottleneck that threatened the future of innovation. The reliance on vast, and as it turned out, finite datasets exposed a fundamental vulnerability in the prevailing development strategy, signaling that the path of ever-increasing scale had reached a natural limit.

The central argument that emerged from this trend was undeniable: the era of brute-force data scaling was drawing to a close. Continued progress in artificial intelligence became contingent not on acquiring more data but on fundamentally solving the challenge of data scarcity. The industry’s focus had to pivot from mere consumption to intelligent application and, ultimately, to creation.

In retrospect, the next wave of great AI breakthroughs was driven not by the organizations that could amass the largest datasets, but by those that pioneered the methods for data-efficient learning and synthetic data generation. The crucial innovation was not just in teaching machines how to code or reason, but in developing the techniques that allowed machines to effectively teach themselves, marking a paradigm shift from an age of data consumption to an age of automated knowledge creation.