Beneath the surface of the generative AI revolution lies a staggering inefficiency, a silent tax paid in wasted GPU cycles for tasks far below a model’s pay grade. As enterprises transition from a period of experimental enthusiasm to the pragmatic realities of large-scale deployment, this hidden cost is coming into focus. The conversation is shifting from sheer model size to architectural intelligence, where cost-performance becomes the dominant metric for success. This analysis examines the emerging trend of conditional memory, a novel architectural approach that promises to solve this waste. Using DeepSeek’s groundbreaking research as a central case study, it explores the mechanics, impact, and profound future implications of building models that are not just bigger, but fundamentally smarter.

Analyzing the Shift Toward Architectural Efficiency

The Data Story: Quantifying Inefficiency in Transformer Models

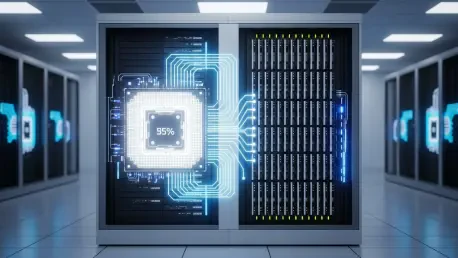

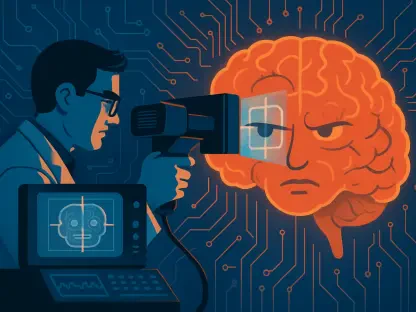

The core issue driving this trend can be quantified as “silent LLM waste.” In essence, large language models expend an inordinate amount of valuable computational resources to simulate simple, static knowledge lookups. Every time a model needs to recall a common phrase, a name, or a piece of boilerplate text, it engages its complex reasoning circuits to reconstruct that information from its weights. This happens because the standard Transformer architecture lacks a “native knowledge lookup primitive”—a direct, efficient way to retrieve stored facts.

This architectural limitation is akin to using a supercomputer to perform basic arithmetic or tasking a brilliant mathematician with memorizing a phone book. The tools are mismatched for the job, leading to profound inefficiency. For enterprises scaling their AI operations, this waste is no longer a trivial matter. The cumulative cost of millions of GPUs spending cycles on rote memorization instead of complex problem-solving represents a significant and growing drain on infrastructure budgets, demanding a more intelligent approach to model design.

A Real-World Solution: DeepSeek’s Engram Module

A concrete example of this trend in action is DeepSeek’s “Engram” module, an elegant implementation of conditional memory designed to separate knowledge retrieval from reasoning. The system operates by using hash-based lookups on short sequences of input tokens. This allows it to access a massive, dedicated memory table in constant time, an O(1) operation that is orders of magnitude more efficient than the multi-layer computational simulation it replaces.

However, a simple lookup system would be prone to ambiguity and errors. Engram’s innovation lies in its sophisticated “gating mechanism.” This gate uses the model’s existing contextual understanding, built by the preceding Transformer layers, to validate the retrieved memory. If the information is relevant—for instance, retrieving data for “Apple” the company in a business context—the gate integrates it. If it is irrelevant, the gate suppresses it, ensuring accuracy. The results of this hybrid approach are telling: while knowledge-focused benchmarks improved from 57% to 61%, complex reasoning scores saw a far more significant jump from 70% to 74%. This demonstrates that offloading memory tasks does not just make lookups faster; it liberates the model’s core computational pathways to perform what they do best: reason.

Expert Insights: Differentiating Internal and External Memory

Industry leaders view this development as a fundamental redesign of the model itself. As Chris Latimer, CEO of Vectorize, notes, conditional memory is a method for “squeezing performance out of smaller models” by re-architecting the internal forward pass. It is not an add-on but an intrinsic optimization that changes how a model processes information from the ground up.

This internal focus clearly distinguishes conditional memory from other popular approaches like Retrieval-Augmented Generation (RAG) or agentic memory systems. RAG, for instance, connects a model to external, dynamic knowledge bases to provide up-to-date information. In contrast, conditional memory is an internal architectural fix. It optimizes how the model handles the static linguistic and factual patterns already encoded within its own parameters, making the entire system more efficient at leveraging its existing knowledge.

Future Outlook: The Rise of Hybrid AI Architectures

DeepSeek’s research uncovered what may become a foundational principle for next-generation model design: the “75/25 allocation law.” Their experiments revealed that optimal performance is achieved when a sparse model’s capacity is allocated with approximately 75% dedicated to dynamic computation and 25% to static memory. This finding suggests that future state-of-the-art models will likely be hybrid systems, as purely computational architectures are inherently wasteful and overly memory-focused models lack reasoning power.

This trend has profound implications for enterprise AI infrastructure. The Engram module’s design enables a “prefetch-and-overlap” strategy, where its massive memory tables can be offloaded to cheaper, more abundant host CPU DRAM. Because the lookups are deterministic, the system can prefetch the required data while the GPU is busy with earlier computations, effectively hiding the latency. This allows for models with hundreds of billions of memory parameters to run with a negligible performance loss, decoupling expensive GPU memory from massive knowledge storage.

Consequently, a major shift in infrastructure spending may be on the horizon. Organizations could increasingly favor memory-rich server configurations over simply adding more high-end GPUs, optimizing for performance-per-dollar. The broader industry focus is tilting away from a brute-force race for size and toward a more nuanced pursuit of “smarter, not just bigger” models. Architectures that intelligently balance computation and memory are poised to define the next era of AI, delivering superior capabilities at a more sustainable cost.

Conclusion: Embracing a New Paradigm of Efficiency

The analysis of computational waste in LLMs revealed a critical bottleneck that hindered scalable deployment. The emergence of conditional memory, exemplified by systems like Engram, provided a powerful architectural solution that proved its value by enhancing both reasoning and cost-efficiency. This trend confirmed that the primary value of offloading memorization was not merely faster lookups, but the liberation of computational resources for more complex tasks.

This shift has reinforced the argument that the next frontier of AI advancement was defined by intelligent design rather than sheer scale. The discovery of principles like the 75/25 allocation law and the viability of infrastructure-aware strategies like prefetching from CPU DRAM established a new blueprint for model development. For developers and enterprises, the path forward became clearer: to unlock sustainable, powerful, and cost-effective AI, they needed to look beyond parameter counts and embrace the architectural innovation that makes models smarter from the inside out.