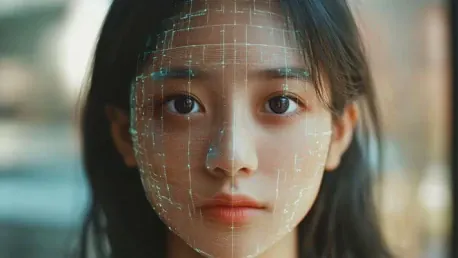

The rise of deepfakes — AI-generated media that distorts reality — marks a significant turning point in both digital and cybersecurity landscapes. Paralleling the overwhelming threat posed by the “white walkers” in HBO’s “Game of Thrones,” the challenge deepfakes present is pressing and pervasive. Emerging as advanced, nearly undetectable deceptions, deepfakes have the potential to create entirely fictitious personas or alter existing media to mislead and manipulate.

The Growing Threat of Deepfakes

Alarming Statistics and Real-World Impact

Deepfakes have swiftly become a formidable threat to both organizations and governments, creating a sense of alarm among cybersecurity experts. Ajay Amlani, president of biometric authentication company iProov, underscores this concern, revealing that nearly half of all organizations have already encountered deepfakes. Market surveys further illuminate the issue, showing that 70% of respondents believe generative AI-created deepfakes will significantly affect their operations. Despite this, only a small fraction of companies are proactively addressing the threat, putting a spotlight on the pressing need for action.

One prime example of the potential harm caused by deepfakes involves a finance worker at a multinational company who was deceived into transferring $25 million. Another striking instance includes a North Korean hacker who used deepfake technology to secure employment at a cybersecurity firm. These scenarios starkly illustrate the substantial financial and operational damage that deepfakes can inflict. As these malicious technologies become more widespread, the urgency to develop robust defensive measures becomes paramount.

Technological Advancements Facilitating Deepfakes

The rapid development in processing speeds, bandwidth, and generative AI has propelled the sophistication of deepfakes, making them increasingly challenging to detect. These technological advancements have significantly lowered the barriers for malicious actors, enabling them to create and distribute high-quality deceptive content with ease. Amlani points out that the combination of improved hardware, software, and information-sharing capabilities has accelerated the production and dissemination of these sophisticated forgeries.

Regional differences in the impact of deepfakes are also notable. Organizations in Asia Pacific, Europe, and Latin America report encountering deepfakes more frequently than those in North America. This trend is attributed to local targeting by malicious actors, who often exploit regional vulnerabilities before expanding their reach globally. The regional disparities in deepfake encounters highlight the need for tailored approaches in combating this growing threat, taking into account the specific challenges faced by different areas around the world.

Deepfakes Among Top Cybersecurity Threats

A Comparative Analysis of Cyber Threats

Deepfakes are not only a rising concern but also rank alongside other significant cybersecurity threats. According to recent surveys, password breaches top the list, with 64% of organizations identifying them as a primary concern. This is closely followed by ransomware attacks, which account for 63%, and phishing along with social engineering attacks at 61%. The inclusion of deepfakes in this list underscores the gravity of the threat they pose. Unlike conventional cyber threats that exploit system vulnerabilities, deepfakes manipulate human perception, making it increasingly difficult to trust digital content unequivocally.

Given the profound challenge of distinguishing genuine digital personas and content from sophisticated frauds, there is an urgent call for enhanced defensive measures. The pervasive nature of these cybersecurity threats compels organizations to continually assess and update their strategies for safeguarding digital integrity. As deepfakes become more prevalent and increasingly sophisticated, the need for robust verification techniques and proactive defenses has never been more critical.

The Urgency for Enhanced Defenses

The challenges posed by deepfakes highlight a pronounced need for improved defensive measures. Traditional solutions, such as embedded software designed to flag AI-altered content, often fall short in detecting these advanced deceptions. Captchas, meant to distinguish human users from automated bots, are also becoming less effective. The complexity of captchas creates additional usability issues, especially for individuals with cognitive or physical impairments, rendering this approach impractical for widespread application.

Ajay Amlani stresses that overcoming the deepfake threat requires more than just incremental improvements in existing security measures. Given the fundamental difficulty in trusting digital personas, a shift toward more sophisticated and user-friendly authentication methods is essential. This involves leveraging cutting-edge technologies and innovative approaches to ensure the authenticity of digital content, thereby safeguarding individuals and organizations from the evolving threat landscape.

Leveraging Biometrics for Defense

Popular Biometric Solutions

In the battle against deepfakes, facial biometrics have emerged as a primary line of defense. Three-quarters of organizations are adopting this technology to enhance their security protocols. The use of multifactor authentication and device-based biometric tools is also growing, with 67% of respondents in iProov’s survey implementing these measures. These technologies aim to create a multi-layered security approach, making it more difficult for malicious actors to penetrate defenses.

Apart from technical solutions, organizations are also focusing on educating their employees about the risks associated with deepfakes. Training programs and awareness campaigns are being rolled out to ensure that staff are vigilant and capable of recognizing potential threats. Regular security audits and system updates are further steps being taken to counter deepfake risks. By combining advanced technological tools with ongoing education and system evaluations, organizations are building a more resilient defense against this sophisticated menace.

Evaluating Biometric Methods

The effectiveness of biometric tools in combating deepfakes varies significantly. Fingerprint recognition is considered the most reliable, with an efficacy rate of 81%. This is followed by iris recognition, which stands at 68%, and facial recognition at 67%. However, simpler methods such as basic behavioral analysis and voice recognition show lower efficacy rates of 50% and 48%, respectively. This suggests that while biometric tools offer a promising solution, their effectiveness can differ based on the specific technology used.

Ajay Amlani critiques some biometric methods for being cumbersome and easily bypassed by advanced threat actors. He highlights the need for innovative solutions that are both sophisticated and user-friendly. The goal is to develop authentication tools that minimize the risk of exploitation by malicious entities while providing a seamless experience for legitimate users. This balance is crucial in ensuring widespread adoption and effectiveness of biometric defenses against the growing threat of deepfakes.

Innovative Biometric Technology

iProov’s Unique Approach

iProov’s proprietary biometric technology stands out due to its innovative method, which involves reflecting randomized colors from a device screen onto the user’s face. This approach allows the system to analyze various features such as skin texture, lips, eyes, nose, pores, sweat glands, and follicles, distinguishing genuine human characteristics from potential forgeries. By capturing how these features interact with light, the system can effectively identify static images, masks, and other deceptive means that fail to mimic the properties of human skin.

The advanced analysis conducted by iProov’s technology offers a high level of accuracy, surpassing 98%. This impressive pass rate has led to its deployment across commercial and government sectors, where the need for reliable authentication is paramount. The ease of use and high accuracy rate make this technology a valuable tool in the fight against deepfakes, providing a robust solution for verifying the authenticity of digital personas.

The Need for International Cooperation

The emergence of deepfakes—AI-generated media that significantly distorts reality—has brought about a dramatic shift in both digital and cybersecurity realms. Much like the relentless threat of the “white walkers” in HBO’s “Game of Thrones,” the danger posed by deepfakes is both urgent and widespread. These sophisticated, nearly undetectable fabrications can create entirely false personas or alter existing images and videos to deceive and manipulate audiences.

Deepfakes utilize powerful machine learning algorithms to seamlessly blend and alter visual and audio content, making it increasingly difficult for even trained experts to determine what’s real and what’s fake. This advanced form of media manipulation threatens to undermine trust in digital content, making it a crucial issue that needs immediate attention.

The implications are wide-ranging. In the political arena, deepfakes could be weaponized to spread disinformation, disrupt elections, or damage reputations. Similarly, in everyday social interactions, they can be used for blackmail, harassment, or creating fake news. For businesses, deepfakes pose a risk to brand reputation and can lead to significant financial loss through fraud.

Addressing this multifaceted threat requires a combination of advanced detection technologies, comprehensive legislation, and public awareness. Only by understanding the full scope of the challenge can we hope to develop effective strategies to combat the rise of deepfakes.