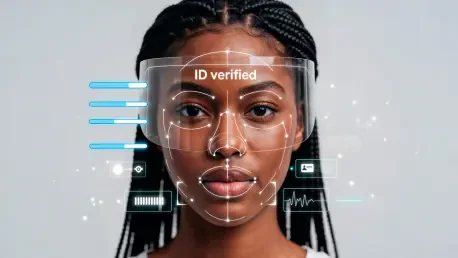

Facial recognition technology has been heralded as a game-changer for security and identification, promising near-perfect accuracy in controlled testing environments, yet it often stumbles when deployed in the unpredictable chaos of real-world scenarios. High-profile benchmark tests, such as those conducted by leading institutions, frequently report accuracy rates exceeding 99%, painting an optimistic picture of reliability. However, these numbers can be misleading when systems face the messy realities of public spaces. From dimly lit streets to crowded urban centers, the conditions under which this technology must operate are far from the sterile settings of a lab. This glaring discrepancy raises critical questions about the dependability of facial recognition in practical applications, especially in high-stakes areas like law enforcement. As adoption continues to grow, understanding why these systems falter outside controlled environments becomes essential for addressing their limitations and ensuring fair, effective use across diverse contexts.

Unmasking the Gap Between Lab and Reality

The primary challenge with facial recognition lies in the stark contrast between lab-based evaluations and real-world performance. Benchmark tests often rely on high-quality, front-facing images under ideal conditions, which fail to replicate the complexities of everyday environments. Factors such as poor lighting, extreme weather, and obscured faces due to masks or angles significantly degrade accuracy. Research from esteemed academic institutions has pointed out that these controlled datasets create an inflated sense of reliability, as they exclude the variables that dominate public spaces. For instance, a system might excel at identifying a clear, well-lit face in a test but struggle to do the same in a bustling city square at dusk. This disconnect means that the impressive statistics touted by developers often bear little resemblance to the technology’s effectiveness when deployed in actual field conditions. Until testing protocols evolve to mirror these challenges, the true capabilities of facial recognition will remain obscured, leaving policymakers and users with a false sense of security.

Ethical Risks and the Push for Better Testing

Beyond technical limitations, the real-world failures of facial recognition carry profound ethical implications, particularly in terms of misidentification and bias. Documented cases in major cities have revealed instances where individuals were wrongfully detained or arrested due to system errors, with disproportionate impacts on certain demographic groups like people of color and women. These mistakes stem from evaluation datasets that lack diversity, leading to higher false positive rates for underrepresented populations. Such incidents erode public trust and highlight the urgent need for testing that reflects real-world diversity and conditions. Despite these concerns, adoption of the technology continues to expand, with plans for broader deployment in public safety roles. Researchers advocate for independent, field-based assessments to ensure systems are reliable before they are integrated into critical decision-making processes. Addressing these gaps through realistic evaluations and stricter oversight is vital to mitigate harm and build a framework where facial recognition can be both effective and equitable.