A fundamental transformation is reshaping the global landscape of open-source Artificial Intelligence, driven not by a grand strategic design but by the practical, on-the-ground needs of developers worldwide. While prominent Western AI laboratories are increasingly pulling back from open releases, their Chinese counterparts are stepping forward to fill the void, rapidly becoming the default providers for a new, unmanaged global AI infrastructure. This shift is not only altering the distribution of technological power but also introducing profound new challenges for governance and security on a scale previously unseen, a trend substantiated by new security research that reveals the vast, decentralized network taking shape.

A Tale of Two Strategies

The Western Pivot to Secrecy

A palpable sense of caution has descended upon the leading AI laboratories in the West, as giants like OpenAI, Anthropic, and Google are fundamentally rethinking their relationship with the open-source community. This strategic retreat is fueled by a confluence of mounting regulatory pressures and powerful commercial incentives. Governments are increasingly scrutinizing the proliferation of highly capable AI models, demanding greater control to mitigate the risks of misuse, from disinformation campaigns to the development of novel cyberweapons. Concurrently, the immense cost of conducting thorough safety reviews and the lucrative potential of proprietary, API-gated services create a strong business case for keeping the core components of their most advanced models—the “weights”—under lock and key. This walled-garden approach allows these companies to maintain strict control over their technology, monitor its usage, and directly monetize access, but it effectively closes the door on a global community of developers and researchers who rely on open access to innovate and build.

This move toward controlled access, while understandable from a risk management and commercial perspective, creates a significant vacuum in the open-source ecosystem. Developers who wish to fine-tune models for specific tasks, deploy them on private infrastructure for security or cost reasons, or simply experiment at the cutting edge are finding their options from Western sources increasingly limited. The API-first model prioritizes centralized control, turning AI into a utility that is consumed rather than a tool that can be freely adapted. As a result, the very definition of “open” is being contested, with Western labs favoring a more restricted interpretation that stands in stark contrast to the emerging approach from the East. This divergence is not merely a philosophical debate; it has tangible consequences for the direction of global AI development, steering a significant portion of the world’s innovation toward alternative, more accessible sources of cutting-edge technology, thereby setting the stage for a new phase in the geopolitical AI competition.

China’s Open-Source Offensive

While Western labs build higher walls around their AI creations, a different strategy is unfolding in China. Spearheaded by technology behemoths such as Alibaba, Chinese developers are aggressively embracing a pragmatic open-source approach that directly addresses the needs of the global developer community. They are releasing a steady stream of powerful, high-quality large language models, with Alibaba’s Qwen2 family serving as a prime example of this offensive. These models are not just research artifacts; they are engineered for practical, real-world application. A key aspect of this strategy is the explicit optimization for local deployment, empowering users to run sophisticated AI on their own hardware rather than being tethered to a cloud provider’s API. This focus on accessibility is a game-changer for countless organizations and individuals who require greater control over their data, latency, and costs.

Furthermore, Chinese developers are keenly focused on lowering the technical barriers to entry. They achieve this through methods like quantization, a process that significantly reduces a model’s size and computational requirements without a catastrophic loss in performance. This allows powerful models to run effectively on commodity hardware, moving advanced AI out of the exclusive domain of large, well-funded data centers and onto standard computers accessible to a much broader audience. This user-centric approach has proven incredibly successful. For a rapidly growing number of developers around the world, Chinese models are no longer just an alternative; they are becoming the most practical, and sometimes the only, viable option for accessing and experimenting with powerful AI that can be run locally. This strategy is effectively democratizing access to advanced AI capabilities and, in doing so, is rapidly establishing Chinese technology as a central and indispensable pillar of the global open-source ecosystem.

The Unmanaged Global AI Network

Data-Driven Reality

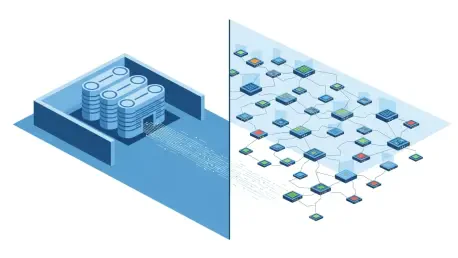

The ascension of Chinese models is not a matter of speculation but a quantifiable reality, as evidenced by a groundbreaking security study from SentinelOne and Censys. Over a period of 293 days, researchers meticulously mapped the global landscape of exposed AI systems, identifying a sprawling and largely unsecured network of 175,000 hosts operating across 130 different countries. This research provides the first concrete data set illustrating the scale and composition of a new, decentralized AI compute substrate that exists almost entirely outside the walled gardens of major commercial platforms. It reveals a world where the deployment of advanced AI is no longer confined to the secure data centers of a few tech giants but is distributed globally across a vast, heterogeneous, and often anonymous infrastructure. The study highlights the tangible consequence of the West’s strategic retreat, showing a clear demand for powerful, locally deployable models being met by alternative suppliers.

This unmanaged network is characterized by its significant geographic concentration in the United States and China, the two epicenters of AI development. In the U.S., a substantial portion of the activity is centered in Virginia, home to many of the massive data centers that form the backbone of the cloud, accounting for 18% of the nation’s exposed hosts. In China, deployment is heavily clustered in major technology hubs like Beijing, which alone represents 30% of the country’s exposed systems. This data paints a vivid picture of a public, unmanaged, and increasingly Chinese-influenced AI infrastructure operating at a global scale. It underscores the fact that while policy discussions often focus on the governance of large, centralized platforms, a parallel and much wilder ecosystem has already taken root, one that presents an entirely different set of challenges for security, accountability, and international cooperation in the age of artificial intelligence.

The “Governance Inversion”

The rapid proliferation of decentralized, open-weight AI models has given rise to a phenomenon that security researchers have termed a “governance inversion”—a fundamental reversal of traditional risk and accountability structures. In a centralized, platform-hosted model like ChatGPT, a single entity, such as OpenAI, maintains comprehensive control over the entire technology stack. This entity is responsible for implementing safety guardrails, monitoring for malicious activity, rate-limiting requests to prevent abuse, and possesses the ultimate “kill switch” to terminate access if necessary. Accountability is clear and concentrated. However, once a model’s weights are released into the wild, this entire control structure evaporates instantly. The original developer loses all direct influence over how, where, and for what purpose their creation is deployed and used, fundamentally altering the calculus of risk management.

This shift results in a radical diffusion of accountability. Instead of a single point of control, responsibility is scattered across tens of thousands of independent operators in more than a hundred countries, each running systems that are entirely disconnected from the oversight of commercial AI platforms. There is no centralized authentication, no abuse detection, and no mechanism for coordinated response. This creates a chaotic and fragmented environment where enforcing any kind of safety standard becomes nearly impossible. The research identified a persistent backbone of 23,000 hosts with extremely high uptime, indicating these are not fleeting experiments but operational systems. Making matters more complex, a significant portion—between 16% and 19%—of this core infrastructure could not be attributed to any identifiable owner, rendering traditional abuse reporting and takedown procedures completely ineffective in this new and untamed digital frontier.

From Chatbots to Autonomous Agents

The security implications of this vast, unmanaged network are amplified by the advanced and increasingly autonomous capabilities of the models being deployed. Analysis of the 175,000 exposed hosts revealed a deeply concerning reality: nearly half of them, a full 48%, are configured with “tool-calling” abilities. This means they are not simply passive text generators but are empowered to take direct action in the digital world. These AI systems can be instructed to execute code, interact with external APIs, and connect to other systems to perform tasks autonomously. This capability fundamentally changes the threat landscape. On an unauthenticated, tool-enabled server, an attacker no longer needs to rely on traditional methods like deploying malware or stealing credentials. A single, carefully crafted prompt can become a potent weapon, commanding the AI to perform malicious actions.

These actions could range from summarizing confidential internal documents and exfiltrating sensitive data to extracting API keys embedded in code or triggering downstream services in a corporate network. The prompt itself becomes the primary attack vector. The danger is compounded by the fact that approximately 26% of the identified hosts are running models that have been specifically optimized for multi-step reasoning and planning. When this advanced cognitive capability is combined with tool-calling, it enables the AI to not just execute a single command but to devise and carry out complex, multi-stage operations with little to no human intervention. This paves the way for fully autonomous agents capable of pursuing goals, whether benign or malicious. Alarmingly, the study also identified at least 201 hosts that were explicitly running “uncensored” configurations, where safety guardrails have been deliberately stripped away, creating powerful and unpredictable tools that are ripe for misuse.

A New Strategy for the West

The emergence of this decentralized, unmanaged AI substrate was not a fleeting anomaly but a persistent feature of the modern technological landscape, one that was projected to professionalize and become more capable over time. The core backbone of these systems was expected to grow more stable, handling increasingly sensitive data and executing more complex tasks as features like autonomous agency became standard. This reality required a fundamental strategic reassessment from Western AI labs and policymakers. The traditional assumptions about influence, which were rooted in the control of centralized platforms, no longer held true. Even perfect governance of Western-hosted services would have had a limited impact if the dominant, real-world AI capabilities were being deployed on an open, decentralized network powered by non-Western models. To maintain influence and relevance in this new world, Western labs had to evolve their approach to open-source releases.

They needed to move beyond treating these releases as one-off research contributions and instead view them as what they had become: long-lived infrastructure artifacts with global reach and lasting consequences. This paradigm shift demanded a new commitment to post-release engagement and responsibility. It required actively investing in monitoring the adoption, modification, and potential misuse of their models in the wild. While they could no longer control deployment directly, they could still shape the types of risks they introduced into the ecosystem through more careful model design, ongoing security research, and proactive engagement with the global developer community. The center of gravity in the open-source AI world had decisively shifted, not by dictate, but because of the undeniable practical and economic realities of who was supplying the powerful, accessible tools that the world’s innovators demanded. The 175,000 exposed hosts were merely the visible manifestation of this deep and ongoing realignment.