In today’s digital era, the landscape of information exchange has undergone a dramatic evolution, driven significantly by advancements in technology. A pivotal development is the emergence of generative artificial intelligence (AI), which has revolutionized how information is created, consumed, and manipulated. As generative AI technology matures, it increasingly plays a dominant role in propagating disinformation, necessitating a thorough exploration of its mechanisms, applications, and potential impacts on society.

Generative AI and Disinformation: The Intersection

Generative AI empowers the automated creation of text, images, and other forms of digital content, reshaping the parameters of how media is generated and distributed. Within the sphere of disinformation, its application involves using these capabilities to craft slightly varied iterations of a message. This technique, termed “AIPasta,” allows for a nuanced approach compared to traditional CopyPasta methods, which rely on repetitive message deployment. Generative AI’s relevance extends beyond mere creation; it engages with the manipulation of digital narratives on a scale previously unmatched.

The intersection of generative AI with disinformation campaigns signifies a critical concern within the digital information environment. This technology’s ability to fabricate content that maintains an appearance of diversity underscores its utility in crafting intricate webs of digital deception. In the context of media manipulation, such advances highlight critical issues of scrutiny concerning authenticity and truthfulness.

Core Mechanisms of Generative AI in Disinformation

Engine of Creation: Text Variation

Generative algorithms form the beating heart of AI-driven disinformation. These mathematical models are capable of producing complex variations of texts, making disinformation more effective and challenging to counteract. Unlike repetitive CopyPasta techniques, AI-driven disinformation uses sophisticated text variation methodologies to form semi-unique narratives. This innovation fosters the illusion of widespread consensus when the same message is subtly altered, complicating efforts to dissect and debunk false claims.

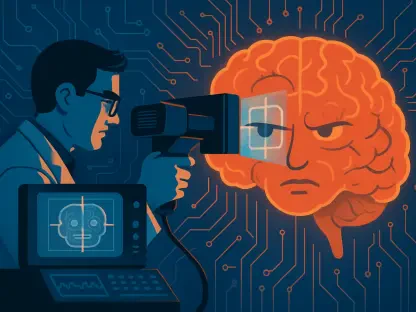

Cloaking Content with Detection Bypass

The ability of generative AI to dodge detection is among its most troubling features. By mimicking human-like variability in produced content, AI tools effectively evade traditional detection systems designed to tag and flag anomalous or propagandistic materials. This capability empowers disinformation architects to distribute their narratives with an increased level of stealth, hindering moderation policies and complicating internet protocols that aim to promote factual exchange.

Recent Innovations and Emerging Behavioral Trends

In recent years, AI-driven disinformation has witnessed significant innovation, with emerging trends pushing its potential even further. Initiatives that enhance content customization and contextual adaptation are making generative AI an increasingly potent instrument in crafting deceptive content. This capability allows for better alignment with the psychological and ideological predispositions of targeted audiences, increasing disinformation’s persuasive power while maintaining its subtlety.

Additionally, the behavioral aspect of AI engagement has evolved. By understanding interaction patterns, generative AI can fine-tune its approaches, learning from user responses and adjusting its outputs for maximum impact. This evolution of behavioral alignment marks a critical advance in disinformation strategies, enabling a more sophisticated manipulation of public perception.

Real-World Deployment and Case Studies

Generative AI’s influence permeates numerous sectors, with the political, healthcare, and social domains at the forefront of real-world applications. Instances of disinformation are evident in narratives surrounding key events like the 2020 U.S. presidential election and COVID-19 pandemic. AIPasta efforts employed during these events illustrate the demographic-specific approaches where such methods proved notably persuasive.

In these politically charged or crisis-related contexts, AI’s role in dissemination has been particularly evident in shaping and reinforcing divisive narratives. This strategic use underscores the technology’s embedding in efforts to blur the lines between reality and fabricated stories, challenging democratic processes and public trust.

Challenges on the Horizon

Facing this technology is beset with complex challenges, foremost of which are the technical and regulatory hurdles that need urgent attention. The sophistication of generative AI demands advanced techniques for detection and moderation to keep pace. Moreover, the encroachment of AI in fostering disinformation raises regulatory concerns about privacy, security, and the ethical dimensions inherent in AI exploitation.

Efforts to mitigate these looming challenges include intensifying research into more advanced AI moderation systems and fostering international collaborations aimed at establishing robust regulatory frameworks. Yet, significant obstacles remain in aligning technological advancements with governance policies, calling for enhanced vigilance and proactive discourse.

Looking Ahead: The Future of Generative AI in Disinformation

The trajectory of generative AI in disinformation campaigns points toward increased complexities and challenges for both technology developers and policymakers. As the technology evolves, breakthroughs in AI-driven content creation tools could push the boundaries of narrative crafting even further, potentially leading to broader implications across diverse sectors. Yet, alongside potential advancements, the necessity for strategic countermeasures will become more pronounced as efforts to mitigate disinformation risks evolve in concert.

Examining the long-term impact, generative AI’s integration into disinformation practices has initiated a profound shift in how information is both perceived and consumed by the public. The continuing sophistication of AI capabilities necessitates a reevaluation of current strategies, ensuring defenses are as dynamic and adaptive as the threats they aim to counteract.

A Summary of Generative AI’s Role in Disinformation

In examining its core attributes, recent developments, and manifested usage cases, the generative AI narrative craft in disinformation reveals a technologically sophisticated approach with a tangible impact on information ecosystems. The ability to create varied and evasive narratives aligns with emerging behavioral traits, enhancing its potency. In current scenarios, the technology finds itself at a crossroads where its innovative abilities are matched by the critical need for decisive action to curb its misuse. As generative AI continues to advance, the considerations for its future deployment in information space underline a shared responsibility to balance innovative use with ethical entreaties.