What if the most advanced technology shaping society today can predict patterns and optimize systems but stumbles when faced with the messy, unpredictable nature of human emotion and culture? This gap in artificial intelligence (AI) capabilities is not a minor glitch—it’s a fundamental challenge that could define whether AI becomes a force for good or a source of unintended harm. As AI permeates every corner of life, from healthcare to climate solutions, the absence of human context in its design raises urgent questions about its future.

The importance of this issue cannot be overstated. AI is no longer just a tool for tech enthusiasts; it’s a cornerstone of global progress, influencing decisions that impact millions. Yet, without grounding in the humanities—disciplines like philosophy, sociology, and literature that unpack the nuances of human experience—AI risks amplifying biases and missing critical perspectives. This story explores why integrating human stories and values into AI development is not a luxury but a necessity for creating technology that truly serves humanity.

Why AI Must Embrace Human Narratives

At the heart of AI’s current limitations lies a stark reality: raw data and algorithms alone cannot capture the depth of human experience. A system might analyze thousands of medical records in seconds, but without understanding a patient’s cultural background or personal fears, it could miss the bigger picture. The humanities offer a lens to interpret these subtleties, ensuring AI doesn’t reduce people to mere numbers.

This isn’t just about making AI more empathetic—it’s about making it effective. Systems that ignore context often produce solutions that fail in real-world scenarios. For instance, an AI designed for disaster response might prioritize efficiency over local traditions, alienating the very communities it aims to help. Bridging this gap requires a deliberate shift toward embedding human narratives into the core of AI design.

The stakes are high as AI continues to evolve at a breakneck pace. Without a foundation in humanities, the risk of creating tools that are powerful yet tone-deaf grows exponentially. This intersection of technology and human insight is where the future of responsible innovation will be forged.

The Hidden Dangers of AI’s Uniform Thinking

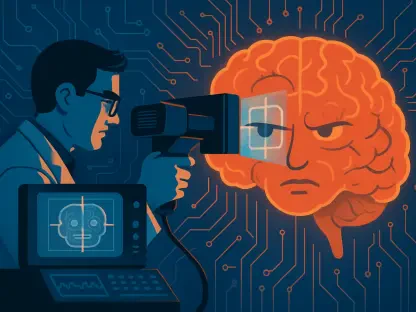

Current AI systems often suffer from what experts call the “homogenization problem.” Most are built on similar architectures, akin to baking identical cakes from the same recipe across the globe, regardless of local tastes or needs. This uniformity replicates biases and blind spots, embedding them into applications ranging from hiring algorithms to predictive policing, often with harmful consequences.

Consider the societal fallout from social media platforms, where algorithmic echo chambers have deepened divisions over the past decade. AI, now infiltrating critical sectors like healthcare and climate action, could repeat these missteps on an even larger scale if left unchecked. Studies indicate that biased AI in healthcare has already led to disparities in diagnosis rates, with error margins up to 30% higher for marginalized groups in some cases.

Addressing this demands urgent action. The window to steer AI development toward inclusivity and context-awareness is narrowing as these systems become more entrenched. Ignoring this challenge risks creating a technological landscape that prioritizes efficiency over fairness, amplifying existing inequities rather than resolving them.

Reframing AI with a Human Perspective

Envisioning a better path forward, the concept of “Interpretive AI” emerges as a transformative approach. This paradigm shifts focus from rigid, one-size-fits-all solutions to systems that embrace ambiguity and context, much like human reasoning does. Instead of delivering a single “correct” answer, Interpretive AI would offer multiple valid perspectives, reflecting the complexity of real-world issues.

Practical applications of this vision are already taking shape. In healthcare, AI could move beyond symptom checklists to capture a patient’s full story—cultural beliefs, emotional state, and personal history—leading to more tailored and trusted care. Similarly, in climate action, such systems could adapt global data to local cultural and political realities, crafting solutions that communities are more likely to adopt. These examples highlight how humanities-driven AI can tackle intricate, human-centric challenges.

Breaking the mold of uniform AI design also requires diverse architectures. Initiatives like “Doing AI Differently” push for alternative frameworks that prioritize interpretive depth over computational sameness. Supported by international collaborations, this movement underscores that reimagining AI isn’t just a technical fix—it’s a cultural and ethical imperative with far-reaching implications for global problem-solving.

Champions Pushing for Change in AI

Voices from across the field are sounding the alarm and driving this shift. Professor Drew Hemment, a leading expert in interpretive technologies, likens current AI to someone who has memorized a dictionary but struggles to hold a meaningful conversation. This vivid analogy captures the interpretive gap that leaves AI ill-equipped for nuanced human interactions, a flaw that must be addressed.

Safety remains a cornerstone of this transformation, as emphasized by Jan Przydatek of a prominent safety-focused foundation. Future AI systems, regardless of their innovative design, must be deployed with reliability and security at their core. This perspective reinforces that human-centered AI isn’t just about understanding—it’s about protecting those it serves.

Global collaboration amplifies these efforts, with researchers from the UK and Canada uniting through shared funding initiatives to explore new AI paradigms. This diversity of thought, spanning academia and industry, reflects a collective commitment to redefining AI. Their combined insights pave the way for systems that don’t just process data but resonate with the richness of human experience.

Crafting a Future Where AI Reflects Humanity

Building this human-centered AI future demands actionable steps, starting with interdisciplinary partnerships. Technologists and humanities scholars must collaborate from the outset, ensuring that interpretive features are baked into AI design rather than added as an afterthought. Such teamwork can spark innovations that balance raw computational power with cultural and ethical awareness.

Funding and research opportunities also play a critical role. Programs like “Doing AI Differently” demonstrate the value of investing in alternative AI architectures that prioritize context over uniformity. Allocating resources to these explorations, especially over the next few years from 2025 to 2027, could yield breakthroughs that redefine how technology interacts with society.

Ultimately, the goal is to foster human-AI collaboration that amplifies creativity and understanding. By embedding humanities into every stage of development, AI can become a tool that enhances human potential rather than overshadows it. This vision offers a roadmap for tackling global challenges, ensuring technology evolves in harmony with the values and stories that define humanity.

In reflecting on this journey, the push to integrate humanities into AI development stood as a defining moment. It marked a shift from viewing AI solely as a technical marvel to recognizing it as a cultural force that needed to mirror human complexity. The efforts of researchers, safety advocates, and global collaborators laid a foundation for technology that respected and reflected diverse perspectives.

Looking back, the actionable steps taken—fostering interdisciplinary dialogue, securing targeted funding, and prioritizing interpretive design—offered a clear path forward. These measures ensured that AI didn’t just solve problems but did so with an understanding of the human lives at stake. The legacy of this movement was a reminder that technology’s true power emerged when it served as a partner to humanity, not a replacement.