In the fast-paced realm of artificial intelligence (AI), large language models (LLMs) have emerged as vital tools, driving innovations from conversational chatbots to intricate sentiment analysis systems. Yet, their staggering computational requirements often lead to slow processing times and substantial energy consumption, creating formidable obstacles for scalability and practical deployment across various sectors. A pioneering study published in Nature Computational Science by Leroux, Manea, Sudarshan, and colleagues unveils a promising breakthrough: an analog in-memory computing attention mechanism tailored to boost the performance of LLMs. This research suggests a transformative shift in AI architecture, potentially establishing a new benchmark for both efficiency and speed in an era where demand for real-time solutions is ever-growing.

The core challenge with current digital computing frameworks lies in their struggle to meet the intense demands of modern LLMs, particularly due to the complexity of attention mechanisms within transformer-based designs. These mechanisms, reliant on extensive matrix multiplications, consume significant time and power, forming critical bottlenecks that impede real-time applications in essential fields like healthcare and finance. The researchers identify these inefficiencies as a pressing barrier, underscoring the need for an innovative approach to support the expanding role of AI technologies in addressing complex, time-sensitive challenges across industries.

The Limitations of Digital Computing

Addressing Computational Bottlenecks

Traditional digital computing systems face substantial hurdles when tasked with supporting the heavy computational loads demanded by LLMs. At the heart of these models are attention mechanisms, integral to transformer architectures, which require intricate matrix operations to function effectively. These operations, while crucial for enabling the models to prioritize relevant data, are notoriously slow and resource-intensive. As a result, processing delays become inevitable, hampering the ability of AI systems to deliver prompt outputs. This inefficiency is not merely a technical annoyance but a significant limitation for industries reliant on rapid data analysis, where every second counts in decision-making processes.

Moreover, the energy costs associated with these digital operations are staggering, as constant data transfers between memory and processing units exacerbate power consumption. This creates a dual challenge of performance and sustainability, with current architectures struggling to keep pace with the escalating needs of AI applications. The strain on digital systems highlights a critical gap in technology, pushing researchers to explore alternative methodologies that can handle such demands without the prohibitive costs in time and energy that current setups entail.

Impact on Real-Time Use

The limitations of digital computing have profound implications for real-time AI applications, particularly in sectors where immediacy is paramount. In healthcare, for instance, delays in processing patient data can hinder timely diagnoses, potentially affecting outcomes in critical situations. Similarly, in financial markets, where algorithms must react to market fluctuations within milliseconds, sluggish processing can lead to missed opportunities or substantial losses. These delays, rooted in the inefficiencies of traditional systems, restrict the practicality of deploying LLMs in environments that demand instantaneous responses.

Beyond speed, the high energy demands of digital architectures pose additional barriers to widespread adoption. The environmental impact of powering these systems, often overlooked in the pursuit of performance, adds pressure to find solutions that minimize resource use. As AI continues to permeate various aspects of daily life, the inability of digital frameworks to support real-time needs without exorbitant energy costs underscores an urgent call for innovation, setting the stage for alternative approaches to bridge this technological divide.

Analog In-Memory Computing: A New Frontier

Redefining Processing Efficiency

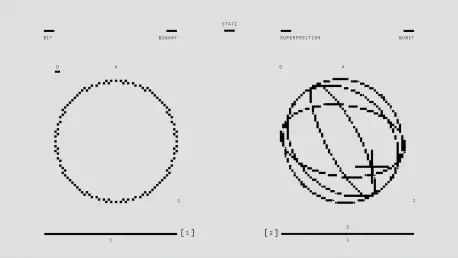

Analog in-memory computing emerges as a revolutionary concept that challenges the constraints of traditional digital systems by redefining how data is processed. Unlike conventional setups that require constant data movement between memory and processing units, this approach performs calculations directly within memory, significantly reducing latency. By leveraging analog circuits, which operate on continuous signals rather than binary values, the system can handle vast amounts of data simultaneously. This results in a dramatic improvement in processing speed, offering a compelling alternative for managing the intensive demands of LLMs with far less energy expenditure.

This innovation also addresses a fundamental flaw in digital architectures by eliminating the bottlenecks caused by data shuttling. The ability to compute within memory not only accelerates operations but also cuts down on power usage, presenting a dual advantage in an era where efficiency is critical. As the technology focuses on optimizing core components of AI models, it paves the way for systems that are inherently faster and more sustainable, potentially transforming the landscape of computational design for future AI advancements.

Focus on Attention Mechanisms

A key aspect of analog in-memory computing lies in its targeted optimization of attention mechanisms, the backbone of transformer-based LLMs. These mechanisms, which determine how models weigh different parts of input data, are computationally heavy due to the matrix multiplications they require. By applying analog processing, the system executes these operations almost instantaneously, a stark contrast to the sluggish pace of digital methods. This acceleration ensures that LLMs can maintain high accuracy and functionality while slashing the time needed for critical tasks, enhancing their suitability for dynamic applications.

The implications of this optimization extend beyond mere speed, as the reduced computational load also translates to lower energy requirements. This efficiency is particularly vital for applications where continuous operation is necessary, ensuring that performance does not come at the expense of sustainability. By honing in on attention mechanisms, the technology demonstrates a nuanced understanding of where inefficiencies lie, offering a tailored solution that could redefine how AI models process and prioritize information in real time.

Sustainability and Societal Impact

Greening AI Technology

As AI applications proliferate, their environmental footprint has become a pressing concern, with training and running LLMs contributing significantly to carbon emissions. The energy-intensive nature of digital computing exacerbates this issue, often requiring vast resources to sustain operations. Analog in-memory computing offers a timely solution by drastically cutting the power needed for both training and inference phases of LLMs. This reduction aligns with global sustainability goals, positioning the technology as a step toward greener AI practices that lessen the environmental burden of technological progress.

The shift to energy-efficient systems is not just a technical adjustment but a response to broader societal demands for responsible innovation. By minimizing the carbon footprint of AI operations, this approach addresses criticisms of the industry’s environmental impact, fostering technologies that support ecological balance. The emphasis on sustainability reflects a growing awareness within the field that high performance must be paired with accountability, ensuring that advancements do not compromise the planet’s well-being for future generations.

Balancing Performance and Responsibility

Achieving high functionality while minimizing environmental harm is a delicate balance that analog in-memory computing strives to maintain. The technology’s ability to enhance processing speed without a corresponding spike in energy use marks a significant departure from traditional methods, offering a model where performance and responsibility coexist. This dual benefit is particularly relevant as industries increasingly adopt AI solutions, necessitating systems that can scale without exacerbating ecological challenges faced globally.

Furthermore, this balance extends to economic considerations, as reduced energy consumption can lower operational costs for organizations deploying AI at scale. The societal impact of such savings could democratize access to advanced technologies, enabling smaller entities to leverage powerful tools previously out of reach due to resource constraints. By prioritizing both efficiency and ethical considerations, the approach underscores a holistic vision for AI development, where technological gains contribute positively to both industry capabilities and environmental stewardship.

Future Potential and Industry Applications

Transforming Real-World Solutions

The potential applications of analog in-memory computing span a wide array of industries, promising transformative outcomes through enhanced processing capabilities. In healthcare, the ability to analyze patient data in real time could lead to quicker, more accurate diagnoses, improving patient care during critical moments. Similarly, in finance, algorithms equipped with faster response times could optimize high-frequency trading, capitalizing on market shifts with unprecedented precision. Even in consumer technology, virtual assistants might become more intuitive, providing seamless interactions that enhance user experiences daily.

Beyond specific sectors, the broader impact of this technology lies in its capacity to make AI more accessible and efficient across the board. By addressing the core inefficiencies of current systems, it enables solutions that meet real-world needs with greater agility. The prospect of faster, more responsive AI tools could redefine operational standards in multiple fields, driving innovation and efficiency where traditional digital constraints once limited progress, thus opening new avenues for practical deployment.

Collaborative Innovation

The researchers behind this breakthrough advocate for a collaborative approach to fully realize the potential of analog computing in AI, urging the broader community to engage in exploration and refinement. This call for joint effort emphasizes that while the current findings are groundbreaking, the technology’s trajectory could be further shaped through shared expertise and resources. The vision is to integrate analog methodologies into mainstream AI design, potentially establishing them as a standard for future architectures over the coming years, starting from now through to 2027 and beyond.

Such collaboration could accelerate the development of hybrid systems that combine the best of analog and digital approaches, fostering innovations that address diverse challenges. The invitation to industry leaders and academics alike highlights the transformative promise of this technology, suggesting that collective input could unlock applications yet unimagined. This focus on unity within the field reflects a forward-thinking mindset, aiming to reshape how AI systems are conceptualized and implemented for maximum societal benefit.

Charting the Path Ahead

Reflecting on this pioneering research, the strides made by Leroux, Manea, Sudarshan, and their team in integrating analog in-memory computing into LLMs mark a significant turning point in AI development. Their focus on enhancing processing speed and curbing energy use through a refined attention mechanism delivered results that outstripped traditional digital methods. Looking forward, the next steps involve scaling these innovations through collaborative research to refine and adapt the technology for diverse applications. Industry stakeholders and academic institutions should prioritize investment in hybrid computing models, testing their efficacy across real-world scenarios to validate broader adoption. Additionally, establishing frameworks for energy-efficient AI design will be crucial to sustain momentum in reducing environmental impact. This path not only promises to elevate AI capabilities but also ensures that technological progress aligns with global sustainability imperatives, setting a precedent for responsible innovation in the years ahead.