The very artificial intelligence designed to predict our future health could inadvertently betray our most private medical secrets, creating a high-stakes paradox at the heart of modern medicine. As large-scale foundation models trained on vast electronic health records advance, they promise to revolutionize medical predictions and enhance patient outcomes. However, this review explores the inherent risk of “memorization,” a critical vulnerability where these systems inadvertently store and can subsequently leak patient-specific data. The purpose of this analysis is to provide a thorough understanding of this threat, the innovative methods proposed to quantify it, and its profound implications for the future of safe and ethical AI deployment in clinical settings.

Understanding Clinical Foundation Models and Data Privacy

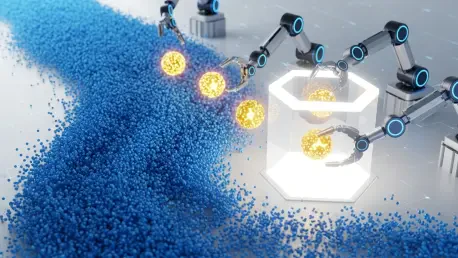

The core technology behind clinical AI involves training foundation models on immense datasets compiled from electronic health records (EHRs). In principle, these systems are designed to achieve generalization, a process where the model learns to identify broad, underlying patterns from countless patient histories. This allows it to make accurate predictions about new, unseen medical cases, effectively learning the statistical rules of disease progression and treatment response.

The fundamental tension arises when this process fails, leading to memorization. Instead of learning a general rule, the model stores specific, verbatim details from individual patient records used in its training. This failure mode transforms a powerful predictive tool into a potential vector for data leakage, directly threatening the confidentiality that forms the bedrock of patient trust. A model that can be prompted to regurgitate a unique sequence of a patient’s medical events poses a direct and serious risk to privacy.

Key Dimensions of Memorization Risk

Attacker’s Prior Knowledge as a Threat Vector

The potential for extracting sensitive information from a clinical AI model is not abstract; it is directly correlated with the amount of prior knowledge an adversary possesses. Research demonstrates that the more specific details an attacker already has about a patient, such as a unique series of lab tests or diagnoses, the higher the likelihood they can prompt the model to reveal additional, unkown information. This “query-and-response” dynamic turns partial information into a key for unlocking a more complete patient profile.

This vulnerability makes targeted attacks a practical concern. An individual with some level of access to a patient’s health information could leverage the AI model to fill in the gaps, potentially exposing highly sensitive data. This transforms the risk from a random possibility into a directed threat, where specific individuals can be targeted by those with malicious intent and a sliver of pre-existing knowledge.

The Vulnerability of Statistical Outliers

Patients with rare or unique medical conditions are disproportionately vulnerable to memorization. Within a massive training dataset, their records function as statistical outliers, deviating significantly from the common patterns the model is designed to learn. Because their data does not conform to a general trend, the model is more likely to store their specific details directly rather than abstracting a rule from them.

This phenomenon makes re-identification a far greater risk for these individuals. A memorized detail about a common condition like hypertension could apply to millions, but a detail related to a rare genetic disorder is far more likely to be traced back to a specific person. Consequently, the very patients who could benefit most from AI-driven insights into rare diseases are also the ones whose privacy is most exposed by this technological flaw.

Evolving a New Standard for Privacy Evaluation

The field is witnessing a critical shift away from abstract, one-size-fits-all privacy guarantees toward a more practical and context-aware framework for risk assessment. Traditional privacy metrics often fail to capture the nuances of real-world threats, providing theoretical security that may not hold up against a determined adversary. The new consensus is that a more pragmatic approach is necessary to build trust and ensure safe deployment.

A novel testing setup developed by MIT researchers exemplifies this evolution. This framework emphasizes assessing the feasibility of an attack and the potential harm of a data leak. Instead of treating all privacy breaches as equal, it creates a tiered system that evaluates how much prior information an attacker would need to successfully extract data. This allows for a more realistic understanding of risk, distinguishing between a hypothetical threat and a probable one.

Practical Applications and Real-World Context

Potential Deployment in Predictive Medicine

The intended applications for these advanced foundation models are transformative. In predictive medicine, they could be used to forecast disease progression, identify patients at high risk for developing certain conditions, or recommend personalized treatment plans based on a patient’s unique medical history and genetic makeup. These tools are envisioned as powerful aids in clinical workflows.

By integrating these models, physicians could gain data-driven support for their most complex decisions. An AI could analyze millions of similar cases to predict how a specific patient might respond to a new therapy, augmenting the clinician’s expertise and leading to more proactive and effective care. The goal is not to replace human judgment but to enhance it with unparalleled predictive insight.

The Broader Landscape of Healthcare Data Breaches

The risk of AI memorization does not exist in a vacuum; it enters a healthcare landscape already grappling with significant data security challenges. The U.S. Department of Health and Human Services has recorded hundreds of major health information data breaches affecting millions of people in just the last couple of years. These incidents demonstrate the existing vulnerabilities in protecting sensitive patient information from conventional cyberattacks.

This context underscores the critical importance of establishing robust safeguards before introducing a new and more subtle potential point of failure. Adding AI-driven data leakage to the list of existing threats could further erode patient trust and complicate an already-fraught security environment. Therefore, addressing memorization is not just a technical challenge but a necessary step in shoring up the entire healthcare data ecosystem.

Challenges in Mitigating Data Leakage

Technical and Ethical Hurdles

One of the primary challenges in this domain is the technical difficulty of preventing memorization without compromising model performance. Techniques designed to inject “noise” or otherwise obscure individual data points during training can reduce the risk of leakage, but they often come at the cost of the model’s accuracy and utility. Striking the right balance between privacy preservation and predictive power remains a central technical hurdle for AI developers.

Beyond the technical aspect lies a profound ethical imperative. The practice of medicine is built on a foundation of trust, a principle enshrined in concepts like the Hippocratic Oath. A data breach originating from a clinical AI system would represent a fundamental violation of this trust, potentially damaging the patient-physician relationship and discouraging patients from sharing the complete information necessary for their care.

Differentiating Harmful Leaks from Benign Disclosures

A key part of the mitigation effort involves developing a more nuanced approach to risk management. This requires creating systems that can differentiate the severity of potential data leaks. Not all information carries the same weight, and a sophisticated privacy framework must be able to distinguish between disclosures that are benign and those that are catastrophic.

For example, a model inadvertently revealing a patient’s general age range constitutes a privacy violation, but it is fundamentally different from one that exposes a highly sensitive and stigmatized diagnosis, such as an HIV-positive status or a history of substance abuse. By creating a hierarchy of harm, developers can implement proportionate safeguards, focusing the most stringent protections on the data that poses the greatest risk to an individual’s well-being and social standing.

The Future of Responsible Clinical AI

The path forward for clinical AI focuses on the development and implementation of robust guardrails to ensure these powerful tools can be deployed safely. Future research will concentrate on refining the practical testing frameworks that can validate a model’s privacy protections before it ever interacts with real patient data in a live clinical setting. This involves creating standardized benchmarks and audit procedures that can be universally applied.

Achieving this will require deep, interdisciplinary collaboration. The problem of AI memorization is not one that can be solved by computer scientists alone. It demands a socio-technical solution crafted by a coalition of AI developers, clinicians, hospital administrators, ethicists, and legal privacy experts. Together, this diverse group can build a comprehensive governance structure that addresses the technical, ethical, and practical dimensions of deploying AI responsibly in medicine.

Concluding Assessment

This review of clinical AI memorization risk highlighted the significant and complex challenge of balancing innovation with patient privacy. The investigation into foundation models revealed that while their potential to advance medicine was immense, the inherent risk of memorizing and leaking sensitive patient data posed a serious threat. The work done to quantify this threat vector, particularly concerning attackers’ prior knowledge and the vulnerability of statistical outliers, established the tangible nature of the danger.

Ultimately, the analysis concluded that the development of practical, tiered evaluation frameworks offered a viable path forward. By shifting the focus from abstract guarantees to real-world attack feasibility and harm assessment, the field has begun to forge the tools necessary for responsible deployment. The central task that remained was to continue this interdisciplinary effort, ensuring that the immense power of artificial intelligence could be harnessed to heal without harm, thereby upholding the foundational trust at the heart of medicine.