The relentless expansion of artificial intelligence, from generative art to complex data analysis, is concurrently creating an energy consumption crisis that threatens its own technological progress. As AI models grow in complexity and capability, their demand for electrical power is skyrocketing, placing an immense strain on global energy grids and raising serious questions about the long-term sustainability of the current technological trajectory. This pressing challenge has ignited a race to develop new forms of hardware that can deliver powerful computation without the punishing energy cost.

A groundbreaking development from a collaborative Japanese research team offers a powerful solution. Researchers from the National Institute for Materials Science (NIMS), Tokyo University of Science, and Kobe University have engineered a novel device that combines the unique properties of graphene with brain-inspired computing principles. Their work not only demonstrates a path toward dramatically more efficient AI but also proves that high performance does not have to come at an unsustainable environmental price. This breakthrough addresses the core of the AI energy dilemma, presenting a viable hardware alternative that could redefine the industry’s future.

Is the AI Revolution Running on an Unsustainable Energy Budget?

The digital infrastructure powering modern AI is one of the most energy-intensive industries on the planet. Massive data centers, filled with racks of powerful processors, run 24/7 to train and operate the large language models and deep learning algorithms that have become integral to science, business, and daily life. The energy required to cool these facilities and power their computations is already comparable to the consumption of entire countries, a figure that is projected to grow exponentially in the coming years.

This escalating demand represents a significant societal and environmental challenge. As the world transitions toward cleaner energy sources, the ballooning power requirements of the AI sector risk undermining these efforts. Without a fundamental shift in how AI computations are performed, the industry’s carbon footprint could become a major obstacle to global climate goals. This reality has forced researchers and engineers to look beyond simply making existing processors more efficient and instead to reimagine the very foundations of computational hardware.

The Soaring Power Demands of Modern Artificial Intelligence

The core reason for AI’s immense energy appetite lies in the architecture of deep learning. These models rely on vast networks of artificial neurons, and training them involves billions or even trillions of calculations, primarily multiply-accumulate operations. Each of these operations, while small on its own, consumes a measurable amount of energy. When scaled up to the level required by state-of-the-art models, the cumulative power draw becomes enormous, limiting both the accessibility and the environmental viability of advanced AI.

This computational load has been a persistent bottleneck, pushing conventional silicon-based hardware to its physical limits. While software optimizations have provided incremental gains, they have failed to keep pace with the exponential growth in model complexity. The problem is fundamental: the current hardware paradigm, which separates memory and processing units, inherently creates energy inefficiencies as data is constantly shuttled back and forth. A more sustainable solution requires a completely different approach to processing information.

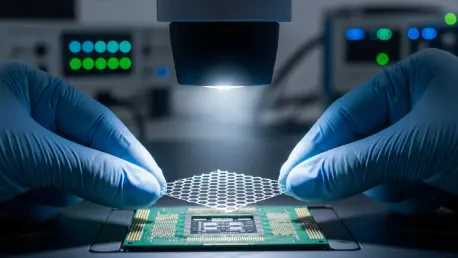

A Brain-Inspired Solution Emerges from Graphene

Inspiration for a new path forward comes from the most efficient computing device known: the human brain. A new class of AI devices known as “physical reservoirs” mimics the brain’s method of processing information, leveraging the intrinsic properties of a material to perform complex computations. This approach, called reservoir computing, has long held promise for its potential to drastically reduce power consumption by minimizing the need for traditional, energy-intensive calculations.

The collaborative research team’s innovation lies in its unique device architecture, which masterfully combines a sheet of graphene with an ion gel. Graphene, a single layer of carbon atoms, offers exceptionally high electron mobility, while the ion gel introduces a complex dynamic of ion and electron movements. This interaction allows the device to process input signals with an extremely broad range of time constants, effectively giving it a much richer and more complex computational capacity than previous reservoir devices.

Research Confirms a 100-Fold Drop in Computational Load

The performance of this new graphene-based hardware represents a monumental leap forward. In testing, the device achieved the highest-level computational performance ever recorded for a physical reservoir, reaching a standard comparable to that of sophisticated deep learning models running on conventional software. This result is critical, as it overcomes the primary drawback that has historically limited the adoption of reservoir computing: a perceived trade-off between energy efficiency and raw performance.

Most importantly, this high performance was achieved with an astounding reduction in computational burden. The research confirmed that the device reduced the required computational load to approximately 1/100th of what is typically needed for software-based AI to perform a similar task. This two-orders-of-magnitude improvement is not just an incremental step; it is a fundamental breakthrough that validates the potential of physical reservoir computing as a commercially and environmentally viable alternative to the current power-hungry paradigm.

Paving the Way for Sustainable, High-Performance AI Hardware

The implications of this development are profound, pointing toward a future where powerful AI is not confined to massive, energy-guzzling data centers. By integrating high performance with extreme energy efficiency, this graphene-based technology could enable sophisticated AI to run on smaller, localized devices, from smartphones and autonomous vehicles to advanced medical sensors. This move toward “edge computing” would enhance data privacy, reduce latency, and make AI more accessible and resilient.

This research marked a critical turning point in the quest for sustainable artificial intelligence. The successful demonstration of a physical reservoir that is both powerful and exceptionally efficient provided a tangible blueprint for the next generation of AI hardware. As this technology matures, it has the potential to decouple the growth of AI from unsustainable energy consumption, ensuring that the benefits of this transformative technology can be realized without compromising environmental stewardship. The path toward a greener, smarter future for computing has become significantly clearer.