In the ever-evolving landscape of machine learning, a fundamental concept has long dictated how models are designed and optimized: the bias-variance trade-off. This principle suggests that striking a balance between a model’s simplicity (bias) and its flexibility (variance) is essential to avoid underfitting or overfitting data. However, recent discourse in the field, spearheaded by innovative thinkers like Professor Andrew Wilson from NYU, challenges this entrenched idea with compelling arguments. The assertion that there may not need to be a trade-off at all is shaking the foundations of traditional machine learning wisdom. This provocative stance invites a deeper exploration into whether the constraints imposed by this concept have limited the potential of deep learning systems. As the community grapples with these new perspectives, the implications for building more robust and adaptive models are profound, urging a reevaluation of long-held assumptions in the pursuit of smarter AI.

Challenging Conventional Wisdom

The traditional bias-variance trade-off has been a guiding light for machine learning practitioners, positing that simpler models often fail to capture complex patterns due to high bias, while overly complex models risk overfitting by capturing noise as signal, driven by high variance. This balancing act has historically pushed developers to carefully calibrate model complexity to ensure generalization to unseen data. However, Professor Wilson’s critique turns this notion on its head by suggesting that the trade-off isn’t an inevitable constraint. Instead, emerging phenomena like double descent and benign overfitting reveal a counterintuitive reality: increasing model complexity beyond a certain point can actually improve generalization rather than degrade it. This insight challenges the fear of overparameterization and suggests that highly expressive models may hold untapped potential for better performance if approached with a nuanced understanding of their behavior.

Further exploration into this paradigm shift uncovers a fascinating aspect of overparameterized models: a tendency toward simplicity bias. Despite their vast capacity, these models often prioritize simpler solutions that generalize well, almost as if guided by an inherent principle akin to Occam’s razor. This automatic preference for less complicated functions undermines the assumption that larger models are doomed to overfit. Instead, it hints at a self-regulating mechanism within deep learning architectures that can adapt to data without catastrophic failure on new inputs. Such findings push the boundaries of how model design is approached, encouraging researchers to rethink the limitations imposed by traditional frameworks. The focus shifts from merely controlling size to understanding how these models inherently navigate the spectrum of complexity, opening doors to innovative strategies that leverage scale without sacrificing accuracy or reliability.

Redefining Model Complexity

Another critical dimension of this evolving discourse is the rejection of parameter counting as a definitive measure of model complexity. While conventional thinking equates more parameters with a greater risk of overfitting, this perspective is increasingly seen as oversimplified. A more accurate assessment, as argued by thought leaders in the field, lies in evaluating the induced distribution over functions that a model can represent. This approach emphasizes the model’s functional behavior—its predisposition to favor certain solutions over others—rather than its raw size. By focusing on how a model inherently prioritizes simplicity or complexity in its outputs, a clearer picture emerges of its capacity for generalization. This nuanced metric offers a pathway to design systems that harness vast expressive power while still maintaining robust performance across diverse datasets, redefining what complexity truly means in deep learning.

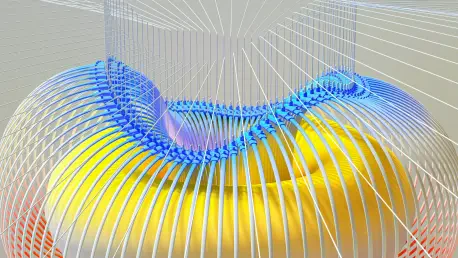

Building on this redefined understanding, the philosophy of model design can take inspiration from the dual nature of the world itself—complex in its manifestations yet often governed by simple underlying principles. Bayesian methods, for instance, are highlighted as a powerful tool to achieve this balance. Through a process of marginalization, these approaches naturally weigh flexibility against simplicity, acting as an automatic filter for overly intricate solutions. This adaptability proves invaluable across varying data regimes, whether dealing with limited samples or expansive datasets, without requiring constant manual adjustments. Such methodologies align model behavior with fundamental beliefs about reality, fostering systems that are both intricate enough to capture nuanced patterns and grounded enough to avoid spurious correlations. This alignment represents a significant step forward in crafting AI that mirrors the balance seen in natural systems, enhancing both robustness and practical utility.

Implications for Future AI Development

The broader implications of questioning the bias-variance trade-off resonate across the AI research community, signaling a pivotal moment of transformation. Resistance to overturning established doctrines is natural, yet the push to challenge outdated assumptions is vital for progress. Misconceptions surrounding this trade-off may have inadvertently hindered advancements by discouraging the exploration of highly expressive models. By embracing the benefits of scale and integrating alternative perspectives like Bayesian frameworks, the field stands to gain significantly in terms of creating more intelligent systems. This shift in thinking, though not yet universally accepted, reflects a growing diversity of thought within machine learning, where empirical evidence from deep learning research increasingly supports the idea that larger models can be advantageous rather than detrimental when designed with careful consideration.

Looking ahead, the insights gained from this debate provide a roadmap for innovation in AI development. The recognition that the bias-variance trade-off is not an immutable law but a context-dependent heuristic reshapes how complexity is approached. Phenomena such as double descent and benign overfitting demonstrate that scaling up can yield unexpected benefits, prompting a reevaluation of design constraints. Moving forward, adopting alternative complexity metrics and aligning models with a balanced view of simplicity and intricacy become essential next steps. These strategies, grounded in the critique of traditional norms, offer a foundation for building systems that are not only more adaptive but also more reflective of the world’s inherent duality. The momentum from this intellectual shift encourages ongoing experimentation and dialogue, ensuring that the evolution of machine learning continues to break new ground in creating impactful, intelligent technologies.