I’m thrilled to sit down with Laurent Giraid, a renowned technologist whose expertise in artificial intelligence has made him a sought-after voice in the field. With a deep focus on machine learning, natural language processing, and the ethical dimensions of AI, Laurent brings a unique perspective to the latest developments shaking up the industry. Today, we’re diving into the groundbreaking release of a new AI model that’s turning heads worldwide. Our conversation explores the technical innovations behind this system, its impact on global competition, the implications of open-source strategies, and how it’s reshaping the economics and accessibility of cutting-edge AI technology for businesses and developers alike.

Can you walk us through the significance of this latest AI model release and why it’s creating such a buzz in the tech community?

Absolutely, Daniel. This new model, with its massive scale of 685 billion parameters, represents a leap forward in AI capabilities. It’s not just about size, though; it’s about how it matches or even surpasses the performance of some of the most advanced proprietary systems out there. What’s really exciting is its open-source nature, which breaks down barriers and makes high-level AI accessible to developers and researchers globally. This release is a big deal because it challenges the status quo, showing that world-class innovation doesn’t have to be locked behind paywalls.

What do you think sets this model apart from other AI systems currently on the market?

One of the standout features is its hybrid architecture. Unlike earlier attempts that struggled to balance different functions, this model seamlessly integrates chat, reasoning, and coding capabilities into a single system. It’s also incredibly efficient, handling massive context windows—think the equivalent of a 400-page book—while maintaining impressive speed. Add to that its benchmark performance, like scoring 71.6% on coding tasks, and you’ve got a system that’s not just powerful but practical for real-world applications.

How does the background of the company behind this model influence their approach to AI development, especially compared to their American counterparts?

Coming from China, the company seems to prioritize accessibility and rapid adoption over strict control of intellectual property, which is a contrast to many American firms that often guard their tech closely. Their strategy leans heavily on open-source distribution, which aligns with a broader philosophy of treating AI as a public good to spur innovation. This differs from the American focus on monetization through restricted access and premium pricing, reflecting a fundamental divide in how technology is viewed and shared.

Let’s dive into the performance metrics. How does this model’s ability to process vast amounts of context impact its usability for developers and businesses?

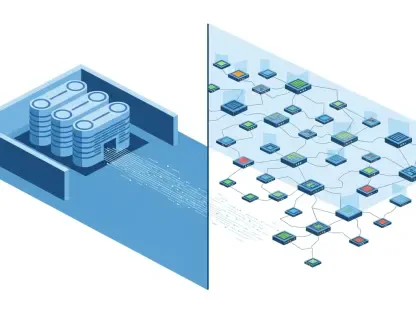

Processing up to 128,000 tokens of context is a game-changer. It means the model can handle incredibly complex tasks—like analyzing long documents or maintaining coherence over extended conversations—without losing track of details. For developers, this opens up possibilities for building applications that require deep understanding, such as advanced legal or technical analysis tools. For businesses, it translates to more reliable outputs in scenarios where context is critical, reducing errors and enhancing productivity.

The cost efficiency of this model has been a hot topic. Can you explain how its pricing structure could reshape the economics of AI adoption for enterprises?

Certainly. At roughly $1.01 per coding task, this model undercuts competitors that charge up to 68 times more for similar workloads. For enterprises running thousands of AI interactions daily, those savings add up to millions of dollars. It lowers the financial barrier to adopting cutting-edge AI, allowing smaller companies or startups to compete with larger players. This could democratize access to powerful tools, fundamentally shifting how businesses budget for and integrate AI solutions.

There’s been talk about innovative features like special tokens for web search and internal reasoning. How do these enhancements improve the model’s functionality?

These special tokens are a brilliant touch. The web search capability allows the model to pull in real-time information, making its responses more current and relevant, which is crucial for dynamic tasks. The internal reasoning tokens, on the other hand, enable the model to think through problems step-by-step before answering, improving accuracy on complex queries. Together, they make the system more versatile and reliable, addressing longstanding challenges in hybrid AI models.

How do you see the open-source strategy behind this release influencing the broader AI industry, especially in terms of competition?

Open-source distribution flips the traditional AI business model on its head. By making frontier-level capabilities freely available, it accelerates global adoption and puts pressure on proprietary systems to justify their high costs. It fosters a more collaborative environment where innovation isn’t siloed within a few big players. However, it also challenges competitors to innovate faster or risk losing market share, potentially leading to a race where accessibility, not just power, becomes the key differentiator.

What’s your forecast for the future of AI competition, especially with open-source models gaining such traction?

I believe we’re heading toward a landscape where the focus shifts from building the most powerful AI to making that power widely accessible. Open-source models like this one are leveling the playing field, enabling smaller teams and even individual developers to contribute to and benefit from cutting-edge tech. This democratization will likely drive rapid advancements, but it also raises questions about sustainable business models for AI companies. In the next few years, I expect to see a blend of open and closed systems coexisting, with competition centering on user experience, customization, and ethical considerations as much as raw performance.