The digital detritus of modern work, scattered across countless folders and file formats, has long presented a challenge beyond the reach of simple organizational tools, but a new class of artificial intelligence is now poised to step out of the chat window and directly into this chaos. For years, AI assistants have been confined to conversational interfaces, capable of generating text, answering questions, and even writing code, but always at an arm’s length from the user’s actual workspace. That separation is now dissolving, raising a fundamental question about the future of productivity: what happens when an AI is granted the keys to the kingdom, with direct access to read, edit, and create files on a personal computer? This transition from a passive conversationalist to an active participant marks a significant evolutionary leap, promising to automate tedious tasks while introducing a new dimension of trust and risk into the human-computer relationship. The industry is on the cusp of this new reality, and its implications are just beginning to unfold.

From Conversational AI to a Hands on Digital Assistant

The central proposition of this emerging technology hinges on granting an AI assistant access that moves beyond a simple chat window to the file system itself. While current AI chatbots can analyze text or data that is manually fed into them, their inability to directly interact with a local environment has been a significant limitation. The prospect of an AI that can autonomously navigate a folder structure, identify relevant documents, and execute multi-step tasks represents a paradigm shift from a tool that responds to a partner that acts. This evolution necessitates a reevaluation of how users interact with AI, moving from a command-and-response model to one of delegation and supervision, where the AI operates with a degree of autonomy within a defined workspace.

Anthropic’s new agent, known as Cowork, serves as a prime example of this next-generation capability. It is specifically designed to perform a wide range of non-technical tasks by operating directly within a user’s local folders. Unlike its predecessors, which were limited to processing information provided by the user, Cowork can be instructed to sort a cluttered downloads folder, synthesize information from multiple documents, or even create new files based on its analysis. This capability effectively transforms the AI from a knowledgeable consultant into a hands-on digital coworker, capable of managing the administrative and organizational burdens that consume a significant portion of the modern workday. The introduction of such agents signals a deliberate move toward more integrated and practical AI applications.

A Strategic Shift Toward Practical Task Oriented AI

The emergence of AI agents like Cowork signifies a critical market inflection point, heralding a move beyond the dominance of large language models engineered primarily for text generation. While the ability to draft an email or write a poem captured the public’s imagination, the long-term strategic focus for AI developers is shifting toward systems that can execute real-world tasks. The true value, particularly in an enterprise context, lies not just in generating content but in automating the complex, often tedious workflows that underpin business operations. This pivot from conversational prowess to practical execution is reshaping the competitive landscape, pushing companies to develop AI that can do more than just talk.

This new competitive arena places Anthropic’s agentic AI in direct opposition to established technology giants like Microsoft and Google. Microsoft has been steadily integrating its Copilot assistant across the Windows operating system, aiming to create a ubiquitous AI layer that assists with a variety of tasks. Similarly, Google continues to embed AI functionalities within its productivity suite. Anthropic’s strategy, however, appears to be more focused and granular, offering a specialized agent that operates within a controlled environment rather than attempting a complete operating system overhaul. This approach may appeal to users seeking powerful automation without ceding full control to an OS-level AI.

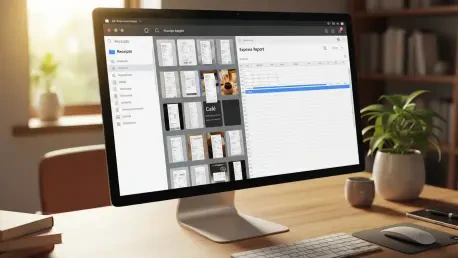

The core argument for this strategic direction is that profound business value will be unlocked by AI that can autonomously manage entire workflows from start to finish. A chatbot that can offer advice on creating an expense report is useful, but an AI agent that can independently open a folder containing dozens of receipt screenshots, extract the relevant data from each image, and compile it into a perfectly structured spreadsheet is transformative. It is this level of end-to-end automation that companies are betting on, as it promises not just incremental efficiency gains but a fundamental reimagining of how administrative and organizational work is performed.

Inside the Sandbox How an AI Agent Interacts with Your Machine

The architecture of Cowork is built upon a foundation of user trust and controlled access. Instead of granting the AI unrestricted permission to roam a computer’s file system, users designate a specific, sandboxed folder for it to operate within. This folder-based system acts as a digital container, allowing the AI agent to read existing files, modify them as instructed, or create entirely new ones, all while being confined to a clearly defined boundary. This design choice is a crucial security and comfort measure, providing users with the confidence that the AI’s actions will not spill over into sensitive or unrelated parts of their local machine, thereby striking a balance between powerful functionality and user control.

At the heart of its operation is a process known as the “agentic loop,” which distinguishes it from simpler AI models. When assigned a task, the AI does not merely generate a single response; it formulates a comprehensive plan, executes the necessary steps, and, critically, checks its own work for accuracy and completeness. If it encounters ambiguity or reaches a point where it requires further direction, it proactively asks the user for clarification before proceeding. This iterative cycle of planning, acting, and verifying allows the system to handle more complex, multi-stage tasks with a higher degree of reliability, making the interaction feel less like a rigid command-line interface and more like a collaborative exchange with a capable assistant.

The practical applications of this technology are both diverse and immediately impactful. For instance, a user could direct the agent to organize a chaotic downloads folder, where it would intelligently sort files by type, date, or content and apply a consistent naming convention. In another scenario, it could be given a folder of receipt images and tasked with generating a detailed expense report in a spreadsheet format. It can also sift through scattered notes across multiple text documents to draft a coherent, unified report. Furthermore, its capabilities are not limited to the local folder. Through integration with data connectors for services like Notion and Asana, and by leveraging browser automation, Cowork can interact with external websites and platforms, extending its utility beyond the desktop and into the broader digital ecosystem.

The Remarkable Origin of an AI That Helped Build Itself

The development of Cowork was not the result of a top-down corporate mandate but rather an unexpected discovery rooted in user behavior. Anthropic had previously released Claude Code, a terminal-based tool designed to help software engineers automate programming tasks. While the tool proved highly successful within its target demographic, the company began to notice a peculiar and growing trend: developers were creatively repurposing the coding assistant for a wide array of non-coding activities. They were using a tool built for software development to conduct vacation research, clean out their email inboxes, and even manage personal projects, effectively hacking its intended purpose to serve their broader productivity needs.

This emergent user behavior was a clear signal to the company that there was an unmet demand for agentic AI among a non-technical audience. Boris Cherny, an engineer at Anthropic, commented on the “diverse and surprising” use cases that were observed, which ranged from building slide decks to recovering wedding photos from a corrupted hard drive. The company realized that the underlying AI agent was far more capable than its developer-focused interface suggested. This insight prompted the creation of Cowork, which essentially packages the powerful agentic engine of Claude Code into a more accessible, user-friendly interface designed for anyone, not just those comfortable with a command line.

Perhaps the most compelling aspect of Cowork’s origin story is the report that the Anthropic team built the entire feature in approximately a week and a half, largely by leveraging Claude Code itself. This created a recursive feedback loop where an AI tool was instrumental in building its more advanced, consumer-facing successor. This instance of an AI system being used to significantly accelerate its own development and expansion has profound implications. It suggests a future where the pace of AI innovation could increase exponentially, as the tools themselves become active participants in their own creation. This dynamic may create a significant competitive advantage for organizations that can effectively harness their own AI to build the next generation of products.

A Guide to Opportunities Risks and Availability

The shift from a chatbot that suggests changes to an agent that directly makes them introduces a new category of risks, and Anthropic has been unusually transparent about them. In its launch materials, the company explicitly warns that Cowork has the potential to take “destructive actions (such as deleting local files)” if it misinterprets a user’s instructions. This candid admission highlights the inherent dangers of granting an AI direct file system access. Because the technology is not infallible and can misunderstand context or nuance, users are strongly urged to provide clear, unambiguous guidance, especially when instructing the agent to perform sensitive operations like file deletion or modification.

Beyond accidental damage, the rise of agentic AI presents more complex security challenges, chief among them the threat of malicious prompt injection attacks. This technique involves embedding hidden, harmful instructions within content that the AI might process from an external source, such as a website or a document. A successful attack could trick the agent into bypassing its safety protocols and performing unauthorized actions on the user’s behalf. While Anthropic states that it has built sophisticated defenses against such attacks, it also acknowledges that agent safety remains an active and evolving area of research for the entire industry. These risks are not unique to Cowork, but its capabilities make it one of the first mainstream tools to bring these theoretical dangers into the user’s immediate workspace.

Currently, access to Cowork is limited to a research preview available exclusively to Claude Max subscribers who use the macOS desktop application. Users on other subscription tiers can join a waitlist for future access. Anthropic has outlined a clear roadmap for expansion, with plans to incorporate cross-device synchronization and develop a version for Windows users, pending feedback from the initial rollout. This phased approach allows the company to gather real-world data on how the agent is used and refine its safety features before a wider release. Ultimately, the successful adoption of this technology will depend on more than just the intelligence of the AI model. The decisive factors will be its seamless integration into existing workflows and, most importantly, the company’s ability to earn and maintain the trust of users who are being asked to delegate tasks to their new AI coworker.

The transition from purely conversational AI to action-oriented agents marked a pivotal moment in the evolution of human-computer interaction. The development of tools like Cowork illustrated a fundamental shift in design philosophy, moving away from systems that simply provide information toward those that actively participate in completing tasks. This change required a new social contract between users and their technology, one built on a delicate balance of delegated autonomy and vigilant oversight.

The astonishing speed at which Cowork was reportedly developed, with an AI tool aiding in its own creation, provided a powerful glimpse into a future of compounding technological advancement. This recursive dynamic suggested that the capabilities of these systems could grow at a rate that would continually challenge organizational and societal capacity to adapt. The era of the AI coworker had unequivocally begun, and it brought with it not only the promise of unprecedented productivity but also a new and profound set of responsibilities for its human supervisors. The central challenge had shifted from teaching an AI to understand our world to learning how to effectively and safely manage its growing presence within it.