As the landscape of enterprise software continues to evolve with artificial intelligence at its core, few are better positioned to unpack these changes than Laurent Giraid, a renowned technologist with deep expertise in AI, machine learning, and natural language processing. With a keen focus on the ethical implications of AI, Laurent offers a unique perspective on how organizations can harness this technology while navigating the complex challenges of data management, governance, and security. In this engaging conversation with Daniel Mairly, Laurent dives into the critical issues surrounding enterprise AI adoption, the staggering failure rates of AI projects, and the innovative tools and strategies that aim to build trust and deliver real business value. From tackling fragmented data systems to ensuring ethical AI deployment, this interview explores the intersection of technology and responsibility in today’s corporate world.

Can you start by shedding light on what a ‘trust layer’ means in the context of enterprise AI, and why it’s becoming a cornerstone for successful deployment?

Absolutely, Daniel. A trust layer in enterprise AI refers to a foundational framework that ensures AI systems operate with accuracy, security, and compliance. It’s about embedding safeguards into every step of AI deployment—think of it as a safety net that addresses data integrity, protects sensitive information, and aligns with regulatory standards. Right now, it’s critical because so many AI projects are failing—over 80% don’t deliver value, often due to fragmented data or weak governance. Without trust, companies can’t scale AI confidently; they risk errors, breaches, or even legal issues. This layer is what allows businesses to move from cautious experimentation to transformative, reliable use of AI.

What do you see as the primary reasons behind the high failure rate of AI initiatives in large organizations?

The failure rate is indeed alarming, and it boils down to a few core issues. First, many organizations have siloed or poor-quality data, which is a disaster for AI since these systems are only as good as the information they’re trained on. Then there’s the lack of robust governance—without clear policies, AI can produce biased or unreliable outputs. Additionally, fragmented systems make integration a nightmare; AI can’t function seamlessly if it’s not connected across platforms. Beyond tech, there’s often a gap in skills or executive support. If teams aren’t trained or leadership isn’t aligned on AI’s value, projects stall. It’s a mix of technical and cultural hurdles that companies must overcome.

Let’s dive into some of the innovative solutions being developed. How does context indexing for unstructured data work, and what kind of impact can it have on business operations?

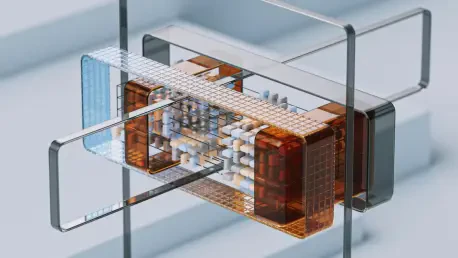

Context indexing is a game-changer for handling unstructured data like contracts, diagrams, or reports. Essentially, it’s a method to help AI interpret these complex documents by understanding the specific business context they exist in. It’s not just about reading the text—it’s about grasping the meaning behind terms, relationships, or visuals in a way that’s relevant to the organization. For operations, this means faster, more accurate decision-making. Imagine a field engineer uploading a technical schematic and getting AI-guided troubleshooting instantly. It saves time, reduces errors, and empowers employees with insights they might not have accessed otherwise.

Another interesting development is the concept of data clean rooms. Can you explain how they function to safeguard sensitive information while enabling collaboration?

Data clean rooms are secure environments where organizations can share and analyze data with partners without exposing sensitive details. They use technologies like zero-copy, which means data isn’t duplicated or moved—it’s accessed in a controlled way, minimizing risk. This speeds up collaboration significantly while maintaining privacy. For instance, in industries like banking, clean rooms can help detect fraud by allowing institutions to analyze patterns with partners in hours instead of weeks. Beyond advertising, sectors like healthcare or retail could use this to improve patient outcomes or personalize customer experiences, all while keeping data secure.

Standardizing business terms seems to be a persistent challenge. How can AI-driven semantic layers help ensure consistency across an enterprise?

Semantic layers powered by AI tackle the chaos of inconsistent business terminology by translating raw data into standardized definitions that everyone in the organization understands. Terms like ‘annual contract value’ or ‘churn’ can mean different things across departments, and if AI misinterprets them, you get flawed insights. A semantic layer ensures AI speaks the company’s language, aligning data across systems. This is huge for collaboration—sales, marketing, and finance can all work from the same playbook, reducing confusion and driving better decisions. It’s about creating a single source of truth for the entire enterprise.

I’ve heard the term ‘agent sprawl’ in relation to AI. What does this mean, and why is it a growing concern for large companies?

Agent sprawl refers to the uncontrolled proliferation of AI agents—think virtual assistants or automated tools—across different platforms and vendors within an organization. It’s a concern because, without centralized oversight, you end up with a mess of disconnected agents that don’t communicate or align with company goals. This can lead to inefficiencies, security gaps, and governance issues. For large companies, managing this sprawl is critical to avoid duplicated efforts or rogue AI actions. Centralized solutions that orchestrate these agents help ensure they work together, adhere to policies, and deliver cohesive value rather than chaos.

Looking at the competitive landscape, how do you think integrated AI platforms stack up against standalone solutions for enterprise needs?

Integrated AI platforms have a clear edge over standalone solutions, especially for enterprises. When AI is built into a broader platform, it’s natively connected to existing workflows, data sources, and security protocols. This seamless integration reduces the need for custom development, which standalone solutions often require—think of the time and cost of hiring developer teams to stitch everything together. Integrated platforms offer a plug-and-play experience with built-in governance and scalability. That said, standalone tools can be more specialized, but for most large organizations, the holistic approach of an integrated system better addresses the complexity of enterprise AI challenges.

As we wrap up, what is your forecast for the future of enterprise AI adoption over the next few years?

I’m cautiously optimistic about the future of enterprise AI. Over the next few years, I expect we’ll see a shift from experimentation to more mature, production-scale deployments, but it won’t happen overnight. The focus on trust, governance, and data quality will intensify as companies learn from past failures. We’ll likely see tighter regulations pushing for ethical AI use, which could slow some initiatives but ultimately build confidence. Adoption will vary—some industries like tech and finance will race ahead, while others may lag due to resource constraints. The key will be platforms that simplify complexity and prove real ROI. If done right, AI could fundamentally transform how businesses operate, but it’s going to take patience and persistent effort to get there.