As artificial intelligence becomes woven into the fabric of critical business operations, the silent threat of a model outage has transformed from a technical inconvenience into a potential catastrophe for revenue and reputation. In response to this growing vulnerability, enterprise AI infrastructure company TrueFoundry has unveiled TrueFailover, a sophisticated resilience layer engineered to safeguard businesses against the increasingly common and costly disruptions from AI model and provider failures. The launch marks a pivotal moment in the evolution of AI, signaling its transition from an experimental technology confined to research labs into an indispensable utility that, like electricity or cloud computing, demands unwavering reliability. This new solution addresses the urgent need for robust uptime guarantees that currently outpace the native capabilities of most major AI model providers, offering a structured defense against the inherent fragility of today’s AI infrastructure.

The Rising Stakes of AI Downtime

From Experiment to Essential Infrastructure

The enterprise AI landscape is undergoing a profound and rapid transformation, moving decisively beyond internal pilot programs and proofs-of-concept toward the deployment of AI in customer-facing, revenue-generating, and operationally critical applications. This shift has fundamentally altered the risk calculus associated with AI integration. When an AI system that handles customer support, manages logistics, or powers a core product feature fails, the consequences are no longer abstract or confined to a development team. Instead, they manifest as immediate and tangible impacts on the bottom line, from lost sales and operational paralysis to diminished customer satisfaction and lasting damage to brand reputation. Reliability, once a secondary consideration to model performance, has now become a paramount concern for any organization staking its business processes on AI. The conversation has shifted from “How smart is the model?” to “How dependable is the service?”

This heightened need for reliability is compounded by the inherent fragility of the current AI infrastructure. A consensus is emerging that large language model (LLM) providers such as OpenAI, Anthropic, and Google, despite their immense scale and resources, do not yet offer the same level of reliability as traditional cloud infrastructure providers like Amazon Web Services (AWS) or Microsoft Azure. The systems that power these advanced models are extraordinarily complex, resource-intensive, and susceptible to periodic outages, latency spikes, and performance degradation. According to TrueFoundry’s CEO, Nikunj Bajaj, such incidents occur with concerning regularity, happening every few weeks or months. This creates a consistent and predictable risk for dependent businesses, making it clear that relying on a single provider for a mission-critical function is no longer a tenable strategy.

Redefining Failure in the AI Era

The challenge of AI downtime extends well beyond complete, binary outages where a service is simply unavailable. A more significant and insidious problem is the rise of partial failures, often referred to as “brownouts.” These are scenarios where an AI model remains technically online and responsive but experiences a severe degradation in performance, such as significant slowdowns in response time or a noticeable drop in the quality and accuracy of its outputs. These “slow but technically up” situations can be more damaging than a complete crash because they often evade traditional monitoring systems that are designed to detect simple on/off states. While the service appears functional, it silently erodes the user experience, violates service-level agreements (SLAs), and can lead to flawed business decisions based on compromised AI-generated insights. This nuanced form of failure requires a more sophisticated approach to detection and mitigation.

Furthermore, the process of rerouting AI traffic during a failure is far more complex than the failover procedures for traditional digital infrastructure. Simply switching from one model provider to another is not a viable solution without addressing several critical challenges. A key consideration is maintaining the quality and consistency of the AI’s output. A prompt meticulously engineered to produce optimal results on a model like GPT-4 may yield entirely different, and potentially unacceptable, results on a backup model such as Google’s Gemini or Anthropic’s Claude. This disparity necessitates a more advanced, automated approach that can seamlessly manage these transitions by not only redirecting traffic but also adapting prompts and configurations on the fly. Without this intelligence, a failover event could simply trade one type of failure for another, replacing an outage with a stream of irrelevant or incorrect outputs.

Introducing TrueFailover: A Multi-Layered Defense Strategy

Core Architecture and Capabilities

TrueFoundry’s TrueFailover is positioned not as a single-point fix but as a comprehensive “safety net” built upon layers of redundancy to create a robust and resilient AI ecosystem. The core philosophy of the platform is that true reliability in the AI domain is achieved by combining multiple defensive strategies, including multi-model, multi-region, and multi-cloud configurations. This approach of diversifying dependencies across different providers, geographical locations, and cloud platforms significantly reduces the probability of a single point of failure affecting end-users. By architecting a system that can withstand disruptions at various levels, TrueFailover provides enterprises with the confidence to deploy AI in their most critical applications without being held captive by the uptime limitations of any single vendor or service.

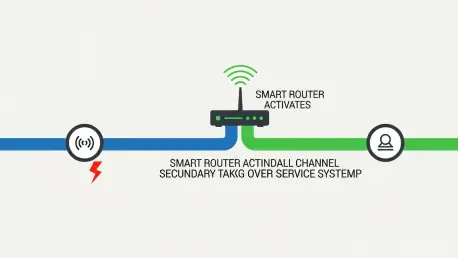

The system operates as an add-on module to TrueFoundry’s established AI Gateway, a platform that already demonstrates its scalability by processing over 10 billion monthly requests for major corporations. This existing infrastructure provides a proven foundation for TrueFailover’s automated resilience strategy. The architecture is designed to provide a unified and intelligent response to disruptions without requiring any manual intervention or code changes from application teams during a crisis. At its foundation, the system enables multi-model failover, which allows an enterprise to define a hierarchy of primary and backup models. These backups can be sourced from different providers, such as switching from OpenAI to Anthropic, or can even include self-hosted alternatives running on an enterprise’s own infrastructure. When the primary model becomes unavailable or unresponsive, traffic is automatically and transparently rerouted to the designated backup, ensuring service continuity. This protection also extends across geographical and infrastructural boundaries through multi-region and multi-cloud resilience, enabling health-based routing that can intelligently divert traffic away from a problematic region or cloud provider to a healthy alternative.

Proactive and Intelligent Failure Prevention

Arguably the most sophisticated feature of the TrueFailover system is its degradation-aware routing. This capability moves far beyond simple uptime monitoring by continuously analyzing a complex combination of performance signals, including latency, error rates, and other subtle indicators of output quality. LLMs often operate as shared resources, meaning a sudden demand spike from one customer can negatively impact performance for all others using that same model instance. The system is designed to watch for these early warning signs—such as incrementally rising response times or an increase in unusual error patterns—that suggest a model is becoming unstable. An AI-driven decision engine then uses these aggregated signals to proactively reroute traffic before users experience a noticeable drop in service quality. This pre-emptive action effectively prevents a “brownout” from ever materializing, shifting the paradigm from reactive failure recovery to proactive failure avoidance.

To address the critical challenge of maintaining output quality across different models, TrueFailover automates the complex task of managing provider-specific prompts and configurations. Enterprises can define and pre-test unique prompts tailored for each potential backup model. When a failover event occurs, the system does not just switch the API endpoint; it also dynamically applies the corresponding, pre-validated prompt. This “planned, not reactive” approach ensures that the quality and relevance of the AI’s output remain within acceptable parameters, a crucial factor for maintaining a consistent and reliable user experience. To further enhance stability, the platform incorporates strategic caching. This feature acts as a buffer, shielding the underlying AI models from sudden, overwhelming traffic spikes and helping to prevent rate-limit-related failures during periods of high demand. By absorbing these demand surges, the system prevents them from causing service throttling or brownouts, adding another layer of resilience to the entire AI stack.

Designed for the Enterprise

Upholding Compliance and Governance

Recognizing the unique constraints faced by large enterprises, especially those in highly regulated industries like healthcare and financial services, TrueFoundry explicitly designed TrueFailover to operate strictly within enterprise-defined guardrails. A core principle of the system is that data is never routed to a model, provider, or geographical region that has not been explicitly pre-approved by the enterprise’s administrators. This gives compliance and security teams granular control to define which models are acceptable for failover, which vendors are permitted to receive traffic, and which data residency requirements must be upheld at all times. This ensures that the system’s automated responses to outages do not inadvertently compromise security protocols, data privacy mandates like GDPR or HIPAA, or other stringent regulatory obligations. The platform’s ability to maintain these controls during a crisis makes it a viable solution for even the most risk-averse organizations.

While powerful, the company remains transparent about what the system cannot solve on its own. The effectiveness of TrueFailover is ultimately contingent on proper and thoughtful configuration by the enterprise. For instance, if an organization sets up a failover path from a large, powerful model like GPT-4 to a much smaller, less capable model without adjusting prompts and performance expectations accordingly, the system cannot magically guarantee equivalent output quality. The logic of the failover path must align with business requirements. Furthermore, it cannot protect against failures within an enterprise’s own single-point infrastructure; if all designated self-hosted models run on a single GPU cluster that experiences a hardware failure, there is no alternative to which traffic can be routed. The system is a tool for building resilience, not a replacement for sound infrastructure design.

A Critical Market Inflection Point

The timing of TrueFailover’s launch coincides with a critical inflection point in the market. As noted by TrueFoundry’s leadership, the era of purely internal-facing AI experiments is rapidly drawing to a close. With AI now powering public-facing applications and integral business functions, the financial and reputational stakes of an outage are higher than ever before. The traditional service-level agreement (SLA) models common in cloud computing are not yet widely applicable to the AI world due to the shared-resource nature and immense operational costs of running large language models. This creates a significant market gap for third-party resilience solutions that can bridge the divide between business requirements and the current state of AI infrastructure reliability.

Enterprises are quickly coming to understand that reliability cannot be simply outsourced to a single model provider; it must be deliberately engineered through a multi-layered strategy. TrueFoundry’s credibility in this space is bolstered by its existing footprint within large-scale enterprise AI deployments. Founded in 2021 by former Meta engineers, the San Francisco-based company has secured $21 million in funding and supports a notable roster of clients, including Nvidia, Adopt AI, and Games 24×7. With its platform already managing over 1,000 machine learning clusters and processing immense traffic volumes, TrueFoundry has a real-world foundation for its reliability-focused ambitions. TrueFailover is not just a theoretical solution but a product born from deep experience in managing the operational challenges of AI at scale.

A Foundational Step Towards Industrialized AI

The introduction of TrueFoundry’s TrueFailover was a significant development in the broader industrialization of enterprise AI, reflecting a critical shift in the priorities of technology leaders, who have moved beyond a singular focus on model performance to embrace the paramount importance of operational resilience. The platform directly addressed the unacceptable business risk posed by a dependency on single, often fallible, AI providers. By delivering a comprehensive solution that automated multi-model and multi-region failover, actively monitored for performance degradation, and managed the intricate complexities of prompt adaptation, TrueFoundry provided a vital infrastructure layer that allowed businesses to de-risk their strategic AI investments. The system’s architecture, which thoughtfully incorporated strict, user-defined compliance guardrails, positioned it as a viable option for even the most highly regulated industries. Ultimately, TrueFailover provided a definitive answer to the urgent question that had come to define the enterprise AI landscape: what happens when the AI that supports your business suddenly disappears? With this solution, businesses gained the tools to ensure that, for their customers and internal users, that moment of disappearance never had to arrive.