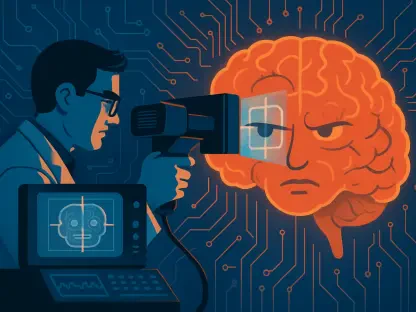

The nuanced and often subconscious language of human gestures has long represented a formidable barrier for technology, a silent dialect that machines could perceive but rarely comprehend. While systems have been able to recognize a predefined set of rigid movements for years, this interaction has often felt less like a conversation and more like a series of commands, lacking the fluidity and context of genuine human communication. This gap between simple recognition and true understanding has limited the potential of human-computer interaction. However, a significant shift is underway, driven by recent research that moves beyond one-dimensional analysis. A novel framework detailed in a study by Q. Lu introduces a more holistic approach, suggesting a future where our devices don’t just see our motions but begin to grasp the intent behind them, heralding a new era of intuitive and seamless technological integration. This evolution is built upon a sophisticated architecture that mirrors human perception by integrating multiple layers of information and dynamic analysis.

The Building Blocks of a Smarter System

A foundational innovation in this advanced framework is the deliberate move away from simplistic, single-modality analysis toward comprehensive multimodal data integration. Traditional gesture recognition systems, which often relied solely on a single visual feed from a camera, proved fragile and unreliable in real-world conditions. Factors such as variable lighting, partially obstructed views, or visually complex backgrounds could easily confuse these unimodal systems, leading to frustrating inaccuracies. To overcome these limitations, the new approach advocates for the simultaneous integration of multiple data streams. This method creates a richer and more complete data landscape by combining visual information with input from motion tracking sensors and even auditory cues. By analyzing this broader spectrum of information, the system constructs a more resilient and accurate interpretation of a user’s actions. Consequently, a temporary weakness in one modality, such as a camera being momentarily obscured, can be effectively compensated for by the strengths of another, like continuous data from motion sensors.

Further enhancing the system’s cognitive capabilities is its sophisticated analysis of motion over time, coupled with a mechanism inspired by human focus. The research draws a critical distinction between recognizing static poses and interpreting dynamic gestures, which are narratives of motion that unfold over moments. To capture this, the method implements a meticulous inter-frame motion analysis, continuously tracking the evolution of a movement across a sequence of frames. This temporal analysis is crucial for capturing the defining characteristics of a gesture, such as its velocity, trajectory, and acceleration, which are lost in a single snapshot. Complementing this is a shared attention mechanism, which allows the system to dynamically prioritize the most relevant information. For instance, when interpreting a gesture involving intricate finger movements, the system allocates more computational “attention” to high-resolution visual data of the hand. Conversely, for a large, sweeping arm motion, it may prioritize data from motion tracking sensors. This dynamic, context-aware focus enables the system to effectively distinguish between gestures that might otherwise appear similar, leading to a significantly more robust and intuitive interaction.

From Theory to Real-World Impact

The synergistic combination of these advanced techniques yields a system that transcends simple command execution, achieving a more nuanced and accurate understanding of user intent. The primary finding from Lu’s research is that by integrating multimodal inputs, temporal motion analysis, and an adaptive attention mechanism, technology can facilitate interactions that are not just reactive but can become proactive and even anticipatory. This represents a significant trend in the broader field of human-computer interaction: the development of more human-centric and context-aware systems. The framework demonstrates a substantial improvement in overall accuracy, responsiveness, and adaptability when compared to conventional methods. This leap forward allows for the creation of interfaces that feel less like rigid tools and more like collaborative partners, capable of understanding the subtleties of human expression and responding in a more natural and intuitive manner. The implications of this shift are poised to redefine how we interact with the digital world across numerous domains.

The potential applications for this highly refined gesture recognition framework are extensive and transformative. In the realm of accessibility and assistive technologies, its ability to recognize subtle, unconventional, or variable gestures can profoundly empower individuals with limited mobility, allowing them to interact more effectively with computers, smart home devices, and communication aids. This fosters greater independence and promotes a more inclusive digital society. For immersive environments like virtual and augmented reality, where intuitive control is paramount, this technology enables more natural manipulation of digital objects and seamless navigation of virtual spaces. In smart environments, from homes to autonomous vehicles, the framework can lead to more fluid integration of user commands, allowing a simple gesture to control lighting or manage an infotainment system. Furthermore, in an increasingly remote professional world, this technology can enhance virtual meetings by accurately interpreting non-verbal cues, helping to bridge the physical gap and foster clearer, more emotionally resonant communication.

Charting the Path Forward

Ultimately, Q. Lu’s gesture recognition method represented a significant leap forward in the field of human-computer interaction, setting a new standard by demonstrating the power of an integrated, dynamic, and cognitively inspired approach. The research not only provided an elegant solution to longstanding challenges but also laid the critical groundwork for future innovations. As research continued to build upon these foundational principles, the integration of even more advanced artificial intelligence techniques, such as deep learning and natural language processing, was anticipated to create systems that were more personalized, emotionally aware, and contextually responsive. Lu’s study highlighted a clear trajectory toward a future where the boundary between human and machine interaction became increasingly blurred, shaped by technologies that were thoughtfully designed to understand and respond to us on our own terms, through the silent and powerful language of our gestures.