The silent integration of artificial intelligence into the machinery of criminal justice is rapidly reshaping how decisions affecting life and liberty are made, creating a high-stakes environment where the promise of efficiency directly collides with the peril of systemic injustice. As algorithms increasingly influence everything from policing strategies to bail hearings and sentencing recommendations, the conversation has decisively shifted from if these technologies should be used to how they must be governed. The core dilemma lies in harnessing the immense potential of AI to reduce human error and optimize resources without simultaneously automating and amplifying long-standing societal biases. Given the complexity and opacity of many AI models, errors can become deeply embedded and nearly impossible to detect, leading to devastating and lasting consequences for individuals. This reality has created an urgent need for a clear, robust framework to ensure that the deployment of these powerful tools aligns with foundational democratic values and upholds, rather than undermines, the principles of a fair and equitable justice system.

The Double-Edged Sword of Algorithmic Justice

Navigating Potential Benefits and Inherent Risks

Proponents of integrating artificial intelligence into the justice system highlight its potential to bring a new level of objectivity and efficiency to historically fallible human processes. The theoretical promise is that well-designed algorithms could mitigate the impact of individual human biases, leading to more consistent and equitable outcomes in areas like bail setting and parole decisions. By analyzing vast datasets, these systems could optimize the allocation of limited resources, enabling law enforcement agencies to deploy personnel more effectively or helping courts manage overwhelming caseloads to reduce procedural delays. Furthermore, data-driven insights derived from AI analysis could inform the development of more effective and evidence-based criminal justice policies, moving the system away from intuition-based practices. In an ideal scenario, AI would function as a powerful tool for enhancing precision, reducing subjective error, and ultimately fostering a justice system that is not only more streamlined but also fundamentally fairer for all participants.

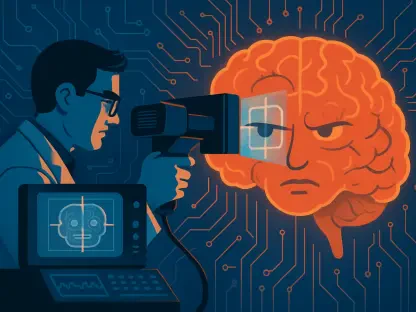

However, the significant risks associated with unregulated AI deployment cast a long shadow over its potential benefits. A primary and persistent danger is that algorithms trained on historical justice system data will inevitably learn and perpetuate the biases embedded within that data, leading to the calcification of discriminatory practices against marginalized communities. This can create a feedback loop where biased predictions lead to biased enforcement, which in turn generates more biased data. Moreover, the “black box” nature of many sophisticated AI models poses a direct threat to the constitutional principle of due process; if an individual cannot understand or challenge the logic behind an algorithmic decision that negatively affects their freedom, their right to a fair hearing is compromised. This lack of transparency erodes accountability, making it exceedingly difficult to assign responsibility when an algorithm makes a catastrophic error. Because these systems operate at a massive scale, a single flawed model could inflict systemic, unjust harm on thousands of people before the problem is even discovered.

The Imperative for Proactive Governance

The rapid pace of technological innovation in artificial intelligence is far outstripping the deliberate speed of legislative and regulatory bodies, creating a critical need for proactive governance. A reactive approach, where rules are created only after significant harm has occurred, is dangerously inadequate in this context. By the time the negative consequences of a poorly implemented AI system become apparent, lives may have already been irrevocably damaged, and trust in the justice system further eroded. The imperative is to establish clear and enforceable “guardrails” before these technologies become deeply entrenched in critical decision-making processes. This requires a broad societal consensus on the ethical and legal boundaries that must not be crossed, ensuring that the integration of AI is a deliberate and thoughtful process. The goal is to steer technological development in a direction that is fundamentally aligned with democratic values and the public good, rather than allowing it to be dictated solely by the pursuit of efficiency or commercial interests.

At the heart of this governance challenge is the non-negotiable principle that foundational rights must always take precedence over operational convenience. While the prospect of a more efficient and cost-effective justice system is appealing, no gain in speed or resource optimization can justify the sacrifice of due process, human dignity, and equal protection under the law. These are the cornerstones of a just society, and their preservation must be the primary consideration in the adoption of any new technology. A guiding framework is therefore not merely a technical checklist for developers but a moral and ethical compass for policymakers. It is a tool designed to facilitate deliberate, transparent decisions that weigh the potential benefits of AI against its potential to infringe upon fundamental rights. This ensures a system where technology is subordinate to constitutional principles, preserving the primacy of human values in an increasingly automated world and reinforcing public confidence that justice remains the ultimate objective.

A Framework for Responsible AI Implementation

Core Principles for Safeguarding Justice

In response to the urgent need for oversight, a diverse task force of experts has proposed a comprehensive framework built upon five core principles designed to guide the responsible use of AI. The first of these principles is that any system must be safe and reliable. This requires that all AI tools undergo thorough and continuous testing, monitoring, and active management to prevent and correct errors that could jeopardize public safety or an individual’s liberty. The second foundational principle is that systems must be confidential and secure. The criminal justice system handles vast amounts of highly sensitive personal data, from biometric information to detailed criminal histories. Consequently, any AI tool must be engineered with robust, state-of-the-art security protocols to protect this data, preserve individual privacy, and operate with a high degree of transparency regarding how information is collected, used, and stored. These two principles establish a baseline of technical competence and data integrity that is essential for any trustworthy application of AI in this high-stakes domain.

The framework further mandates that AI tools be effective and helpful, ensuring that their adoption is justified only when they can demonstrably improve justice system outcomes or operational efficiencies without introducing unacceptable collateral risks. Technology should not be adopted for its own sake but must serve a clear and beneficial purpose. Building on this, the fourth principle demands that systems be fair and just. This goes beyond simply avoiding harm and requires that AI tools be actively and continuously audited to identify, expose, and mitigate inherent biases that could lead to discriminatory results. The ultimate goal is to use technology to promote fairness, not just to avoid exacerbating existing inequities. Finally, and perhaps most importantly, the framework insists on the principle of democratic and accountable control. This ensures that ultimate decision-making authority is never abdicated to an algorithm. Meaningful human oversight must be maintained at all critical junctures, guaranteeing that technology serves as an aid to human judgment rather than a replacement for it.

Upholding Democratic Values Above All

This proposed set of principles should be understood less as a rigid technical manual and more as a foundational charter for ethical governance in the age of automation. Its overarching purpose is to ensure that the integration of AI into the justice system is a conscious, transparent, and value-driven endeavor. The framework compels policymakers, technologists, and legal professionals to engage in a critical dialogue about the trade-offs between technological progress and the preservation of fundamental human rights. It firmly establishes that technology must always be the servant of justice, not its master. By centering principles such as human dignity, accountability, and equal protection, this guide aims to steer the deployment of AI in a direction that reinforces the constitutional and ethical bedrock of the legal system. It is a vital, proactive strategy designed to prevent a future in which the relentless pursuit of efficiency leads to the erosion of equity and the substitution of convenience for constitutionally guaranteed rights.

The urgent call for a clear and proven oversight structure became a pivotal moment for policymakers. It was widely understood that without such definitive guardrails, the risks of systemic injustice and the gradual erosion of constitutional rights would have grown in lockstep with technological advancement. The central challenge that was addressed involved ensuring that democratic values consistently preceded the powerful allure of convenience and operational efficiency. The adoption of this guiding framework represented a society’s deliberate choice to steer its most powerful new tools toward the reinforcement of justice, not its distortion. This decisive action ensured that the future of the legal system was built not on the unquestioned and opaque authority of algorithms, but on the enduring and transparent principles of human dignity and equal protection under the law.