The generative AI landscape has just been significantly reshaped by the arrival of FLUX.2 [klein], a new suite of open-source models from the German startup Black Forest Labs that prioritizes practical speed and accessibility over the relentless pursuit of maximum theoretical image quality. This

Beneath the surface of the generative AI revolution lies a staggering inefficiency, a silent tax paid in wasted GPU cycles for tasks far below a model's pay grade. As enterprises transition from a period of experimental enthusiasm to the pragmatic realities of large-scale deployment, this hidden

From Financial Anxiety to Automated Assistance: A New Generation's Dilemma Young adults today are navigating a landscape of unprecedented financial pressure, caught between the aspiration for stability and the reality of economic uncertainty that widens the gap between their savings goals and their

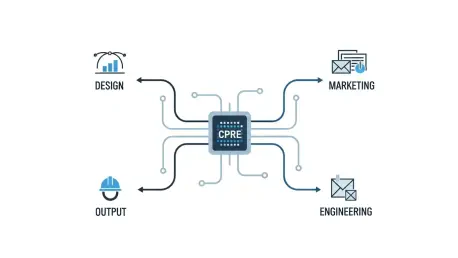

The whirlwind of artificial intelligence adoption that defined 2025 has given way to a period of strategic recalibration, marking a definitive end to the technology's honeymoon phase. After a year characterized by a frenetic and often fragmented implementation of AI tools, where businesses raced to

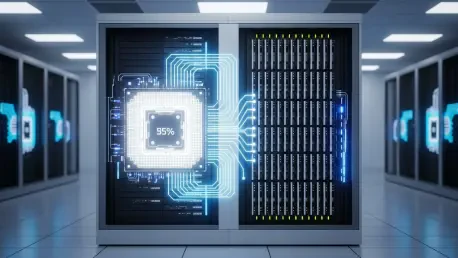

A monumental leap in artificial intelligence hardware has been achieved through a novel training method that reduces energy consumption by nearly six orders of magnitude compared to traditional GPUs while simultaneously boosting model accuracy. This groundbreaking technique, developed by a team of

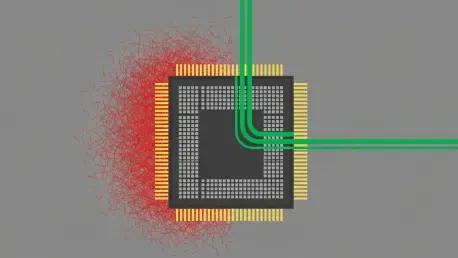

The tantalizing promise of quantum computing, with its potential to solve problems far beyond the reach of the most powerful classical supercomputers, has long been tempered by a fundamental and persistent obstacle: the inherent fragility of quantum information. For years, the global race to build